Introduction

Recently, various Layer 2 solutions have been introduced, led by ETH Layer 2, such as Optimism, zkSync, Polygon, Arbitrum, and StarkNet. These solutions aim to construct an open-source, modular codebase that allows developers to customize their own Layer 2.

As is well known, the current Ethereum network is notorious for its low performance and high gas fees. Although Layer 2 solutions like OP, zkSync Era have addressed these issues, whether deployed on the EVM virtual machine or on Layer 2, they still face a fundamental "compatibility" problem. This not only applies to the underlying code of Dapps needing to be compatible with the EVM but also to Dapp sovereignty compatibility.

The first part concerns the code level. Due to the diverse range of application types that need to be accommodated on the EVM, optimizations have been made for average user cases, achieving a balance for all types of users. However, it is not as user-friendly for Dapps deployed on top of it. For example, gaming applications in Gamefi may prioritize speed and performance, while Socialfi users may prioritize privacy and security. Due to the all-encompassing nature of the EVM, Dapps must sacrifice certain aspects, resulting in code-level compatibility issues.

The second part concerns the sovereignty level. As all Dapps share the same infrastructure, the concepts of application governance and underlying governance have emerged. Application governance is undoubtedly subject to underlying governance, meaning that specific requirements of some Dapps need to be supported through upgrades to the underlying EVM. Thus, Dapps lack sovereignty. For example, new features in Uniswap V4 require underlying EVM support for Transient Storage, relying on the inclusion of EIP-1153 in the Cancun upgrade.

In order to address the aforementioned issues of low processing performance and sovereignty on Ethereum L1, Cosmos (2019) and Polkadot (2020) have emerged. Both aim to assist developers in building their customized chains, enabling blockchain Dapps to have sovereignty and achieve high-performance cross-chain interoperability, creating a fully interconnected network.

Today, four years later, various Layer 2 solutions have also introduced their own superchain network proposals, starting with the OP Stack, followed by the ZK Stack, then Polygon 2.0, Arbitrum Orbit, and finally, StarkNet also introduced the Stack concept to keep up with the times.

Pioneers of fully interconnected networks, Cosmos and Polkadot, have paved the way.

Hi, What you provided is already in Chinese. So, I will translate it for you into English.Hello everyone, what kind of collisions and sparks will happen? In order to provide a comprehensive and in-depth perspective, we will explore this topic through a series of three articles.

This article, as the first chapter of this series, will sort out the technical solutions of various parties. In the second chapter, we will handle and sort out the economic models and ecosystems of each solution, and summarize the characteristics to consider when choosing Layer 1 and Layer 2 Stack. In the last chapter, we will discuss how Layer 2 develops its own super chain and summarize the entire series of articles.I. Cosmos

Cosmos is a decentralized network of parallel blockchains. It provides a universal development framework SDK, which allows developers to easily build their own blockchains. Multiple independent and different application-specific blockchains are linked and communicate with each other, forming an interoperable and scalable network.

1. Structural Framework

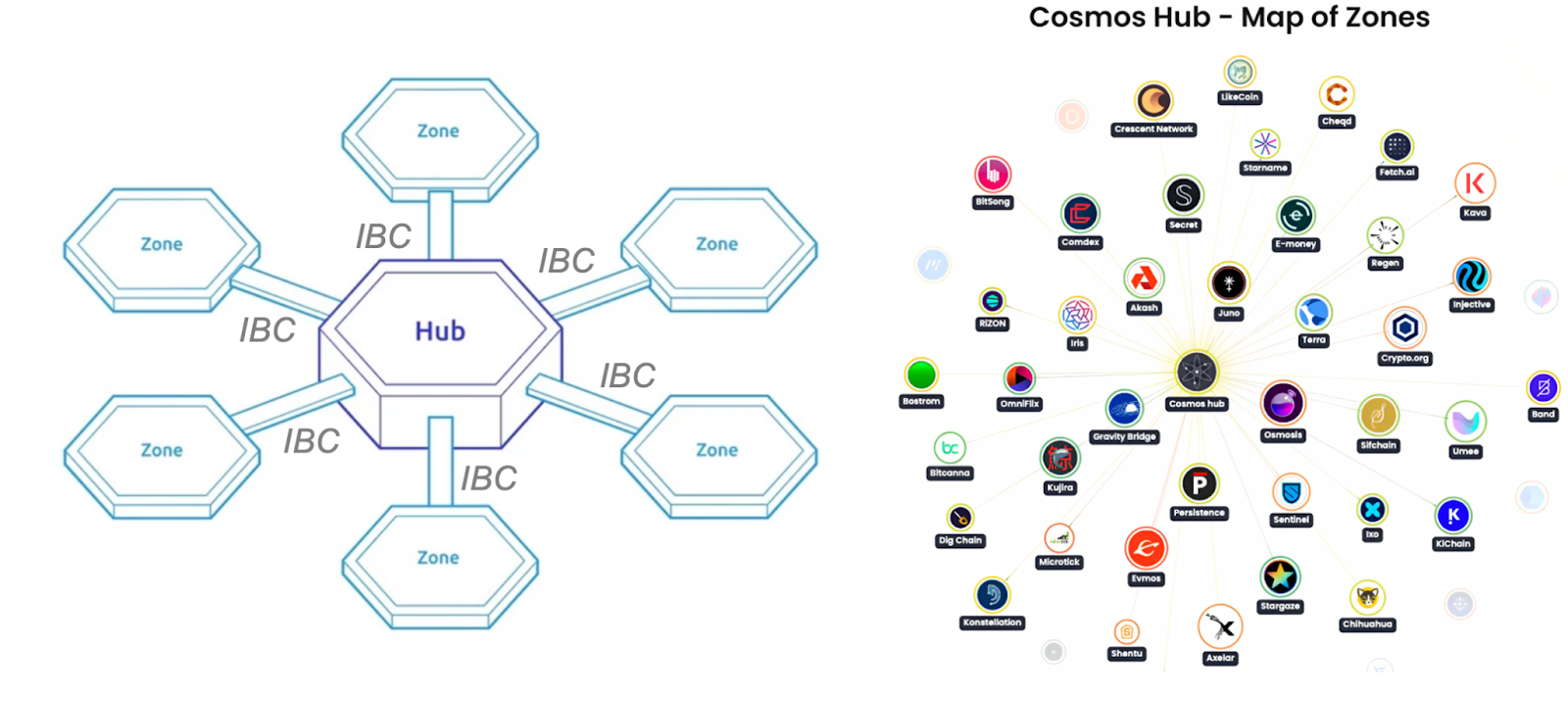

As mentioned earlier, when there are a large number of application chains in the ecosystem, and each chain communicates and transfers tokens using the IBC protocol, the overall network will be as complicated and difficult to sort out as a spider web.

Therefore, in order to solve this problem, Cosmos proposes a layered architecture, which includes two types of blockchains: Hubs (central hub chains) and Zones (regional chains).

Zones are regular application chains, and Hubs are designed specifically for connecting Zones, serving as a blockchain for communication between Zones. When a Zone establishes an IBC connection with a Hub, the Hub can automatically access (i.e. send and receive) all the Zones connected to it, greatly reducing communication complexity.

It's important to note that Cosmos and Cosmos Hub are two completely different things. Cosmos Hub is just one of the chains within the Cosmos ecosystem, primarily serving as the issuer of $ATOM and the communication center. You may think of the Hub as the center of the ecosystem, but any chain can become a Hub. If the Hub becomes the center of power, it goes against the original intention of Cosmos. Because Cosmos is fundamentally committed to the autonomy of each chain, having absolute sovereignty. If the Hub becomes the center of power, sovereignty is no longer sovereignty. So when understanding the Hub, it is important to note this.

2. Key Technologies

2.1 IBC

IBC (Inter-Blockchain Communication) allows for the transfer of tokens and data between heterogeneous chains. In the Cosmos ecosystem, the SDK framework is the same and Tendermint consensus engine must be used. However, heterogeneity still exists because chains within the framework may have different features, use cases, and implementation details.

So how can communication between chains with heterogeneous properties be achieved?

Only the consensus layer needs to have instant finality. Instant finality means that as long as more than 1/3 of the validators are correct and certain, the blocks will not fork, ensuring that once transactions are included in a block, they are final. Regardless of the differences in application cases and consensus aspects among heterogeneous chains, as long as their consensus layer satisfies instant finality, there can be unified rules for interoperability between chains.

The following is a basic process for cross-chain communication, assuming we want to transfer 10 $ATOM from Chain A to Chain B:

Tracing: Each chain runs a light node of another chain, so each chain can verify other chains.

Bonding: First, lock 10 $ATOM on Chain A, which users cannot use, and send a lock proof.

Relay: There is a relay between Chain A and Chain B to send the lock proof.

Validation: On Chain B, validate the blocks of Chain A. If they are correct, 10 $ATOM will be created on Chain B.

At this point, the $ATOM on Chain B is not real $ATOM, it is just a proof. The locked $ATOM on Chain A cannot be used, but the $ATOM on Chain B can be used normally. When the user consumes the proof on Chain B, the locked $ATOM on Chain A will also be destroyed.

However, the biggest challenge of cross-chain communication is not how to represent the data from one chain on another chain, but how to handle situations such as chain forks and chain reorganizations.

Because each chain in Cosmos is an independent and autonomous individual chain with its own dedicated validators. So there is a possibility of malicious behavior in different partitions. For example, if Chain A wants to transfer a message to Chain B, it is necessary to verify Chain B's Validators in advance to decide whether to trust that chain.

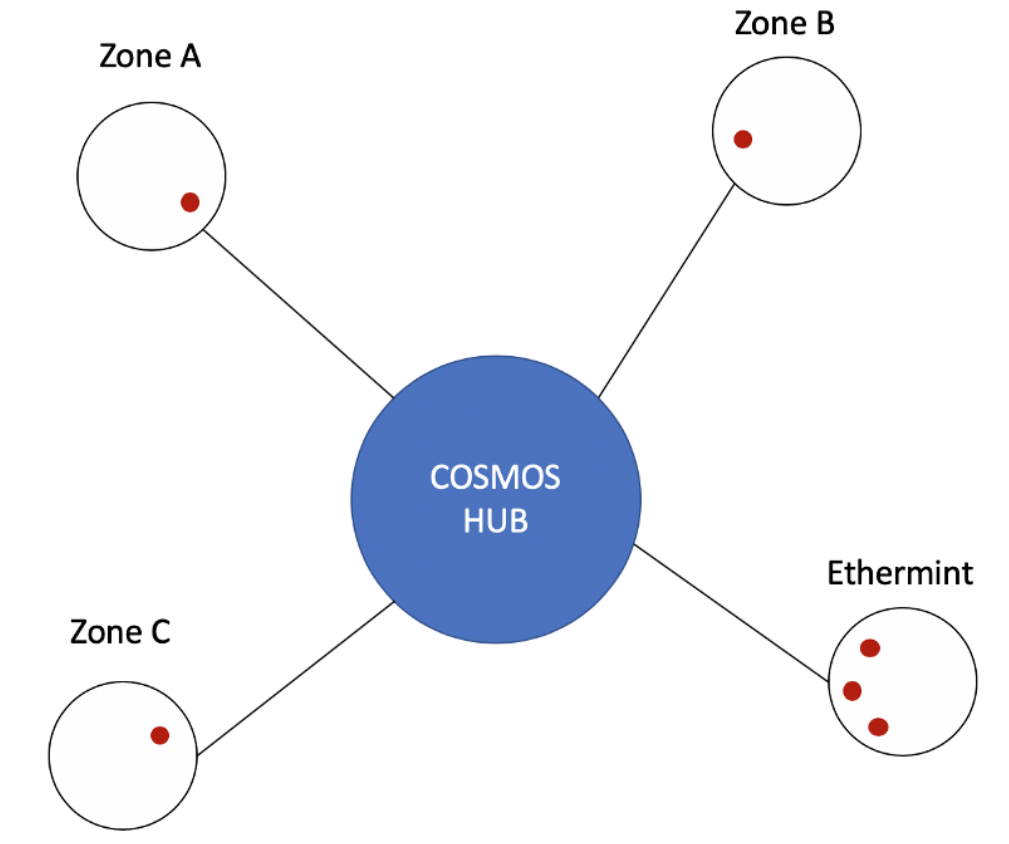

For example, assume that the red dot in the picture represents a token for ETM, and users in the ABC three partitions all want to use EVMOS to run the Dapps in their respective partitions. Because asset transfers have been made through cross-chain communication, they have all received ETM.

If Ethermint partition launches a double-spending attack at this time, it will undoubtedly affect the ABC partition, but only to a limited extent. The remaining networks not related to ETM will not receive any attacks. This is also guaranteed by Cosmos, even if such malicious information transmission occurs, it still cannot affect the entire network.

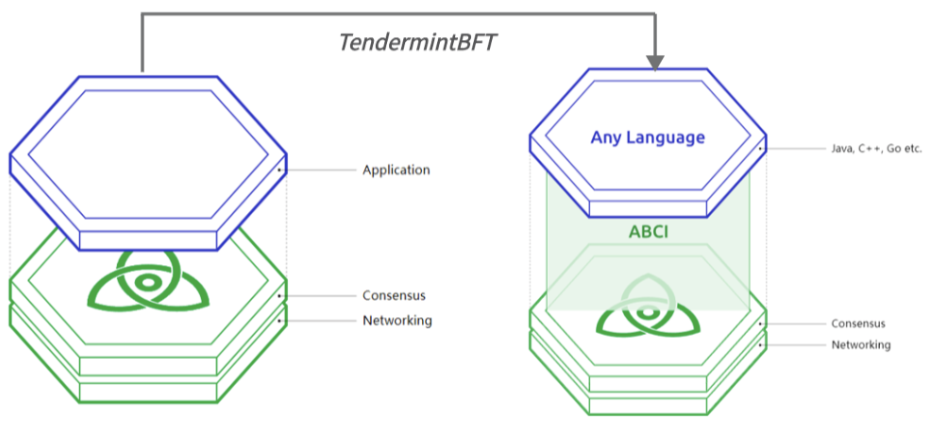

2.2 Tendermint BFT

Cosmos adopts Tendermint BFT as the underlying consensus algorithm and consensus engine for Cosmos, packaging the underlying infrastructure and consensus layer of the blockchain into a general-purpose engine solution, and using ABCI technology to support encapsulation of any programming language, thereby adapting to the underlying consensus layer and network. Therefore, developers can freely choose any language they like.

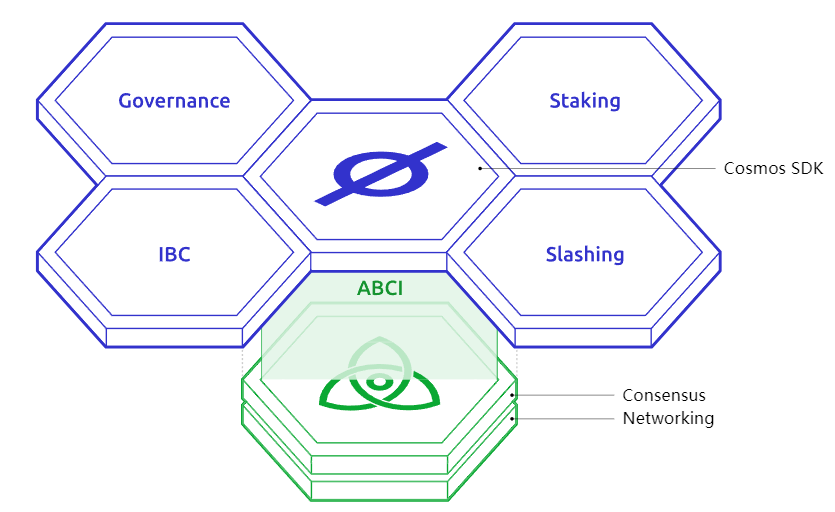

2.3 Cosmos SDK

Cosmos SDK is a modular framework launched by Cosmos, which simplifies the operation of building Dapps on the consensus layer. Developers can easily create specific applications/chains without having to rewrite the code for each module, greatly reducing development pressure, and now developers can also port applications deployed on EVM to Cosmos.

Source:https://v1.cosmos.network/intro

In addition, blockchains built using Tendermint and Cosmos SDK are also creating new ecosystems and technologies that lead the industry's development, such as the privacy chain Nym and the data availability solution Celestia. It is thanks to the flexibility and ease of use provided by Cosmos that developers can focus on project innovation without having to worry about repetitive work.

2.4 Interchain Security & Account

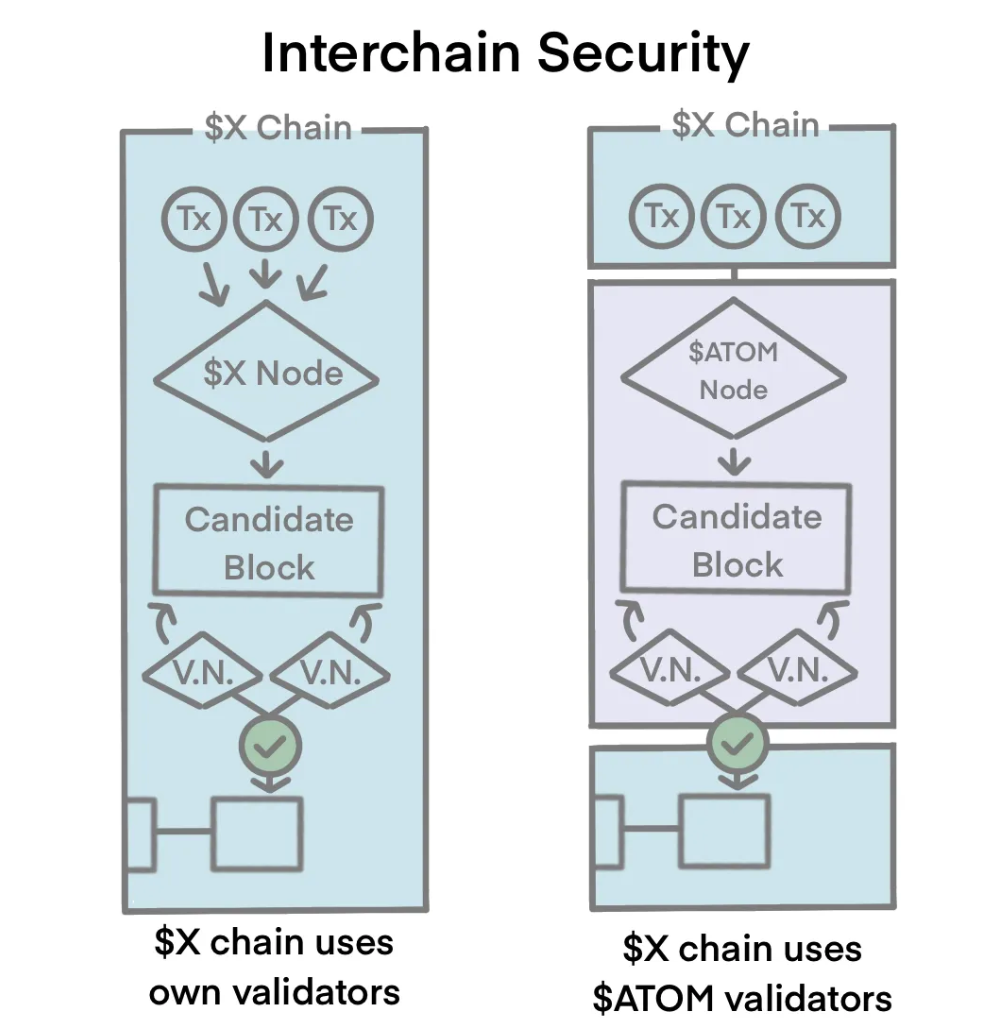

1) Interchain Security

Unlike the Ethereum ecosystem, which has L1 and L2, each application chain in the Cosmos ecosystem is equal to each other, without a hierarchical relationship. However, due to this reason, the interchain security in Cosmos is not as robust as Ethereum. In Ethereum, the finality of all transactions is confirmed by Ethereum, inheriting the security of the underlying layer. But for a standalone blockchain that builds its own security, how can security be maintained?

Cosmos introduces Interchain Security, which essentially achieves shared security by sharing a large number of existing validators. For example, a standalone chain can share a set of validators with the Cosmos Hub to produce new blocks for the standalone chain. As the nodes serve both the Cosmos Hub and the standalone chain, they can receive fees and rewards from both chains.

Source: https://medium.com/tokenomics-dao/token-use-cases-part-1-atom-a-true-staking-token-5 fd 21 d 41161 e

As shown in the figure, the transactions generated within the X Chain are generated by the nodes of X, and then verified. If sharing nodes with the Cosmos Hub ($ATOM), the transactions generated on the X Chain will be verified and calculated by the nodes of the Hub Chain to produce new blocks for X.

Ideally, choosing a chain with a large number of nodes and more mature, such as the Hub Chain, is the preferred option for sharing security. Because if you want to attack such a chain, attackers need to have a large amount of $ATOM tokens for staking, which increases the difficulty of the attack.

Not only that, the Interchain Security mechanism also greatly reduces the barriers to creating new chains. Generally speaking, if a new chain does not have particularly outstanding resources, it may take a lot of time to attract validators and cultivate an ecosystem. However, in Cosmos, because validators can be shared with the Hub chain, this greatly reduces the pressure on new chains and accelerates the development process.

2) Interchain Account

In the Cosmos ecosystem, because each application chain is self-governed, applications cannot access each other. Therefore, Cosmos provides a cross-chain account, allowing users to directly access all Cosmos chains that support IBC from the Cosmos Hub, so that users can access the applications on Chain B from Chain A, achieving full chain interaction.

II. Polkadot

Like Cosmos, Polkadot aims to build an infrastructure that allows for the free deployment of new chains and interoperability between chains.

1. Framework

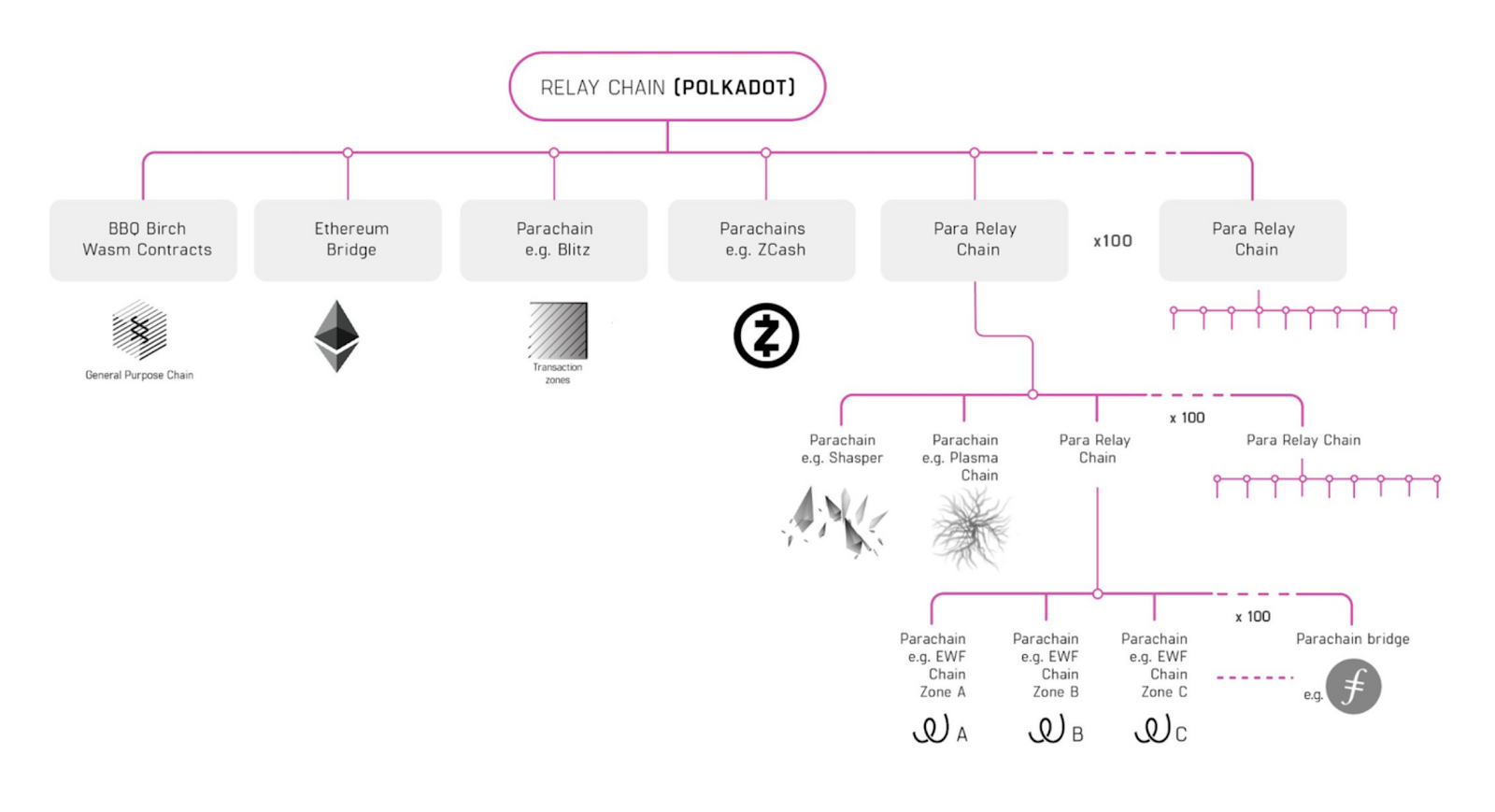

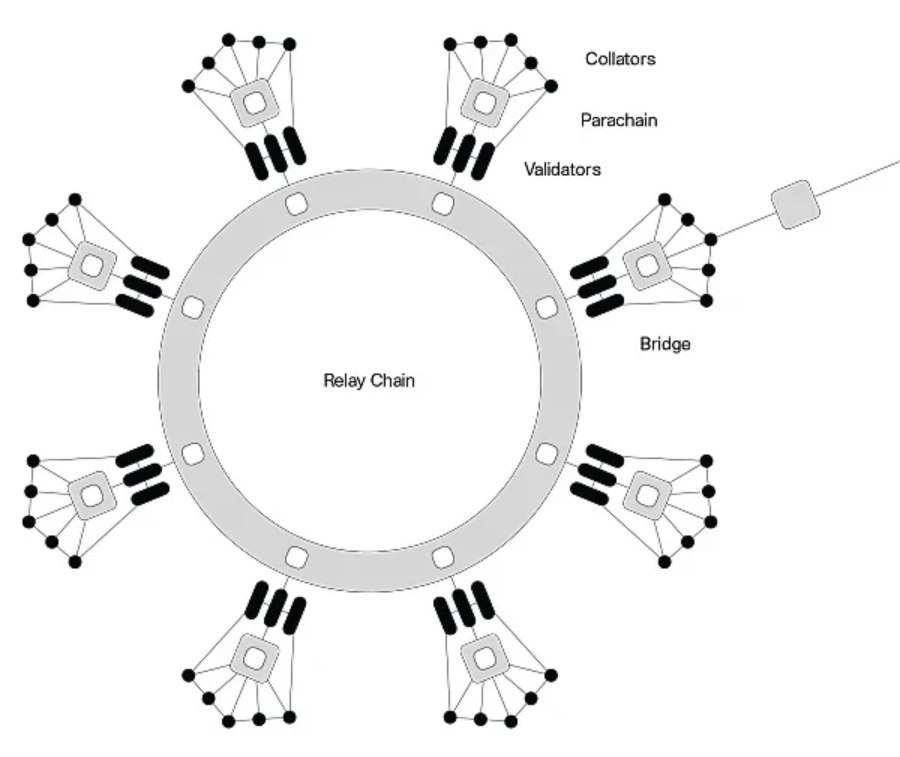

1.1 Relay Chain:

The relay chain, also known as the main chain, can be understood as the sun in the solar system, which is the core part of the entire network. All the parachains revolve around it. As shown in the picture, a relay chain links many different functional chains such as transaction chain, file storage chain, IoT chain, etc.

Source:https://medium.com/polkadot-network/polkadot-the-foundation-of-a-new-internet-e 8800 ec 81 c 7

This is Polkadot's layered expansion solution, where one relay chain connects to another relay chain, achieving infinite scalability. (Note: at the end of June this year, Polkadot founder Gavin proposed Polkadot 2.0, which may change the way we understand Polkadot.)

1.2 Parachains:

The relay chain has several parachain slots, and the parachains are connected to the relay chain through these slots, as shown in the picture:

Source:https://www.okx.com/cn/learn/slot-auction-cn

However, in order to obtain a slot, parallel chains must stake their $DOT. Once a slot is obtained, parallel chains can interact with the Polkadot mainnet through this slot and share security. It is worth mentioning that the number of slots is limited and gradually increasing. It is initially expected to support 100 slots, and the slots will be periodically reshuffled and allocated according to the governance mechanism to maintain the vitality of the parallel chain ecosystem.

Parallel chains that obtain a slot can enjoy the shared security and cross-chain liquidity of the Polkadot ecosystem. At the same time, parallel chains are required to provide certain benefits and contributions to the Polkadot mainnet as a return, such as handling most of the network transactions.

1.3 Parallel Threads:

Parallel threads are another processing mechanism similar to parallel chains. The difference is that parallel chains have dedicated slots to run continuously, while parallel threads share slots between them and take turns to use the slots.

When a parallel thread gains the right to use a slot, it can temporarily work like a parallel chain, processing transactions, generating blocks, etc. However, after this time period ends, the slot must be released for other parallel threads to use.

So parallel threads do not require long-term staking of assets. They only need to pay a certain fee each time they obtain the slot for a time period. Therefore, it can be said that parallel threads use slots on a pay-as-you-go basis. Of course, if a parallel thread receives enough support and votes, it can upgrade to a parallel chain and obtain a fixed slot.

Compared to parallel chains, parallel threads have lower costs and lower barriers to entry for Polkadot. However, they cannot guarantee when they will gain the right to use a slot, making them less stable. Therefore, they are more suitable for temporary use or testing of new chains. Chains that hope to run stably still need to upgrade to parallel chains.

1.4 Bridge:

Communication between parallel chains can be achieved through XCMP (explained later), and they share security and consensus. But what about heterogeneous chains?

It is important to note that although the framework provided by Substrate makes the chains that integrate into the Polkadot ecosystem homogenous, as the ecosystem evolves, there will inevitably be some well-developed and extensive public chains that want to participate in the ecosystem. It is not feasible for them to redeploy using Substrate. So how can we achieve message transmission between heterogeneous chains?

Take an example from daily life: if an iPhone wants to transfer files to an Android phone, a converter is needed to connect them because they have different ports. This is the actual role of a bridge. It is a parallel chain that acts as an intermediary between the relay chain and the heterogeneous chain (external chain). Smart contracts are deployed on the parallel chain and the heterogeneous chain, allowing the relay chain to interact with external chains and achieve cross-chain functionality.

2. Key Technologies

2.1 BABE&Grandpa

BABE (Blind Assignment for Blockchain Extension) is Polkadot's block production mechanism. Simply put, it randomly selects validators to produce new blocks, and each validator is assigned to different time slots. Only the validator assigned to a specific slot can produce a block within that time slot.

Additional information:

Time slot is a method used in blockchain block production mechanisms to divide the timeline. The blockchain is divided into time slots that appear at fixed intervals. Each time slot represents a fixed block production time.

Only the nodes assigned to each time slot Interval can produce blocks

In other words, it's an exclusive time period. In time slot 1, validator 1, assigned to this time slot 1, is responsible for producing blocks. Each validator has a unique time slot and cannot produce blocks simultaneously.

The advantage of this is that random assignment maximizes fairness, as everyone has a chance to be assigned. And because the time slots are known, everyone can prepare in advance, avoiding unexpected block production.

By using this random block production method, Polkadot ensures the orderly and fair operation of its ecosystem. But how can the blocks be adopted with the same consensus? Next, we will introduce another mechanism of Polkadot: Grandpa

Grandpa is a mechanism that finalizes blocks. It solves the potential issue of forks caused by different consensuses in BABE block production. For example, BABE node 1 and node 2 produce different blocks during the same time period, resulting in a fork. This is where Grandpa comes in. It asks all validators: Which chain do you think is better?

Validators will evaluate the two chains and vote for the one they think is better. The chain with the most votes will be confirmed by Grandpa as the final chain, while the rejected chain will be discarded.

Therefore, Grandpa acts as the "grandfather" of all validators, playing the role of the ultimate decision maker and eliminating the risk of forks caused by BABE. It enables Blockchain to reach a consensus that is accepted by everyone.

In conclusion, BABE is responsible for random block production, while Grandpa is responsible for selecting the final chain. By working together, they ensure the secure operation of the Polkadot ecosystem.

2.2 Substrate

Substrate is a development framework written in Rust language and provided by FRAME. It allows for the development of various Use Cases by providing a scalable underlying components. Any blockchain built with Substrate is not only compatible with Polkadot natively, allowing for shared security and parallel execution with other parachains, but also supports developers to build their own consensus mechanisms, governance models, and more, based on their specific needs.

In addition, Substrate provides great convenience in self-upgrades because it is a separate module at runtime, which can be replaced directly while running. As a shared consensus parachain, as long as it remains network and consensus synchronized with the relay chain,

You can update the execution logic directly without creating a hard fork.

2.3 XCM

If we were to explain XCM in a sentence, it would be: a cross-chain communication format that allows different blockchains to interact.

For example, Polkadot has many parallel chains. If parallel chain A wants to communicate with parallel chain B, it needs to package the information in XCM format. XCM is like a language protocol that everyone uses to communicate, enabling seamless interaction.

XCM format (Cross-Consensus Message Format) is the standard message format used for cross-chain communication in the Polkadot ecosystem, and it has derived three different ways of message delivery:

XCMP (Cross-Chain Message Passing): Under development. Messages can be transmitted directly or forwarded through a relay chain. Direct transmission is faster, while relay chain forwarding is more scalable but adds latency.

HRMP/XCMP-lite (Horizontal Relay Message Passing): In use. It is a simplified version and alternative to XCMP, where all messages are stored on the relay chain. Currently handles most of the cross-chain message passing.

VMP (Vertical Message Passing): Under development. It is a protocol for vertically passing messages between the relay chain and parallel chains. Messages are stored on the relay chain and then passed on after being interpreted by the relay chain.

For example, in the XCM format, there are various pieces of information, such as the quantity of assets to be transferred and the receiving account. When sending messages, the HRMP channel or relay chain will transmit messages in this XCM format. The receiving parallel chain will then check if the format is correct, parse the message content, and execute the instructions in the message accordingly. For instance, transferring assets to a specified account, thereby achieving cross-chain interaction and successful communication between the two chains.

Communication bridges like XCM are crucial for multi-chain ecosystems like Polkadot.

After understanding Cosmos and Polkadot, you should have some knowledge about their visions and frameworks. Next, we will explain in detail what the Stack solutions launched by ETH L2s are.

III. OP Stack

1. Structural Framework

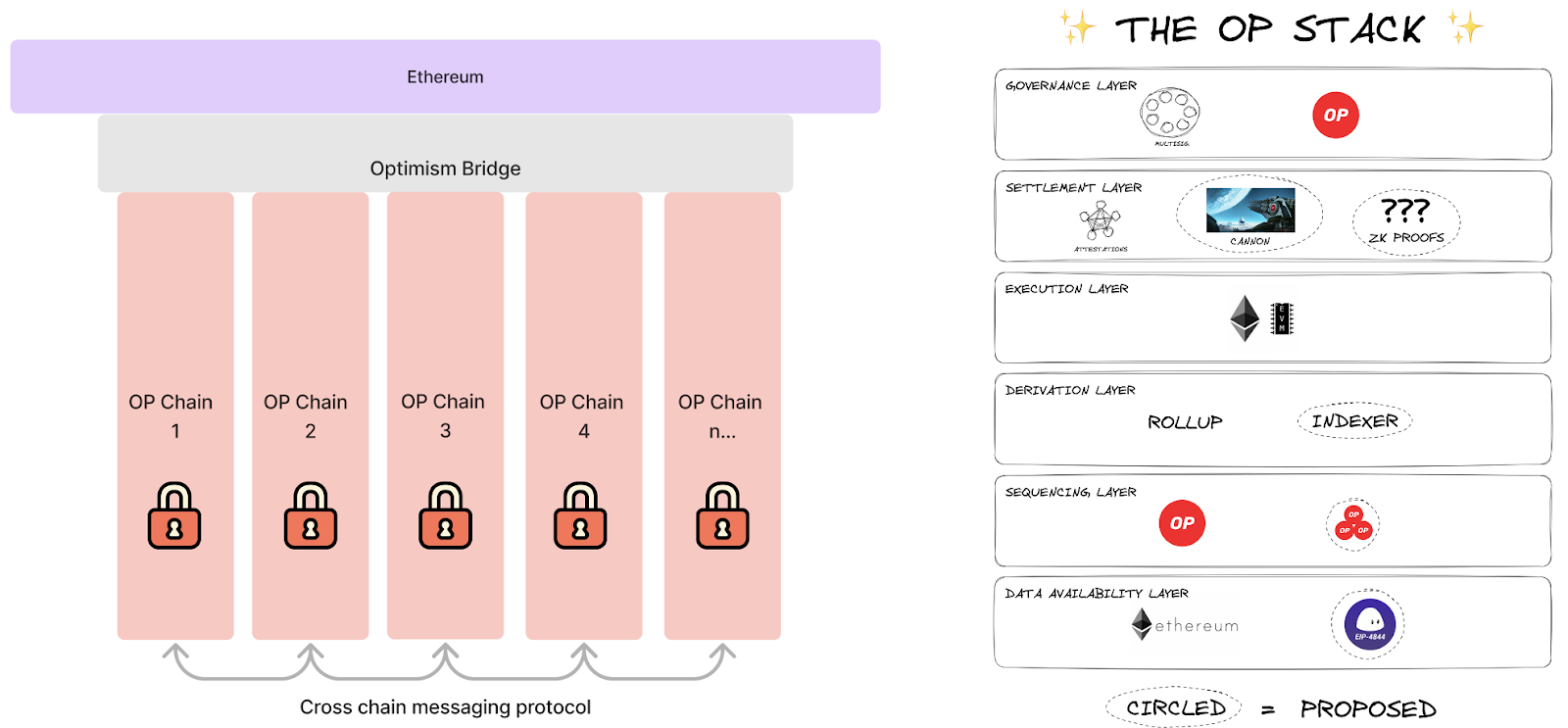

According to official documentation, OP Stack is a series of components maintained by the OP Collective. It first appears as software behind the mainnet and eventually takes the form of the Optimism superchain and its governance. L2 solutions developed using OP Stack can share security, communication layer, and general development stack. Developers have the freedom to customize chains to serve any specific blockchain use case.

From the diagram, we can understand that all the superchains in OP Stack communicate through the OP Bridge superchain bridge. They rely on Ethereum as the underlying secure consensus and build super L2 chains. The internal structure of each superchain is divided into:

1) Data Availability Layer: The data availability module using the OP Stack chain can be used to retrieve its input data. Since all chains get data from this layer, it has a significant impact on security. If a certain data cannot be retrieved from this layer, it may not be possible to synchronize the chain.

From the diagram, we can see that OP Stack uses Ethereum and EIP-4844, which essentially access data on the Ethereum blockchain.

2) Ordering Layer: The Sequencer determines how to collect user transactions and publish them to the data availability layer. In OP Stack, a single dedicated sequencer is used for processing. However, this may result in the sequencer not being able to retain transactions for too long. In the future, OP Stack will modularize the sequencer module to allow chains to easily change the sequencer mechanism.

In the diagram, you can see a single sequencer and multiple sequencers. A single sequencer allows any entity to act as a sequencer at any time (higher risk), while multiple sequencers are selected from a predefined set of possible participants. Therefore, if multiple sequencers are chosen, each chain developed based on OP Stack can make a specific selection.

3) Derivation Layer: This layer determines how the processed input of the raw data availability is handled and transmitted to the execution layer through the Ethereum API. From the image, OP Stack consists of Rollup and Indexer.

4) Execution Layer: This layer defines the state structure within the OP Stack system. When the engine API receives input from the derivation layer, it triggers state transitions. From the diagram, it can be seen that under OP Stack, the execution layer is EVM. However, with slight modifications, it can also support other types of VMs. For example, the Pontem Network plans to develop a Move VM L2 using OP Stack.

5) Settlement Layer: As the name suggests, it is used to handle the withdrawal of assets from the blockchain. However, such withdrawals require proof of the target chain's state to a third-party chain, and assets are processed based on that state. The key is to allow the third-party chain to understand the state of the target chain.

Once a transaction is published on the corresponding data availability layer and ultimately confirmed, the transaction is also finally confirmed on the OP Stack chain. Without breaking the underlying data availability layer, it is not possible to modify or delete the transaction. The transaction may not have been accepted by the settlement layer yet, as the settlement layer needs to verify the transaction result, but the transaction itself is already final on the data availability layer.

Variable.This is also a mechanism for heterogeneous chains. The settlement mechanisms of heterogeneous chains vary, so the settlement layer in the OP Stack is read-only, allowing heterogeneous chains to make decisions based on the state of the OP Stack.

In this layer, we see that the OP Stack uses the fraud proofs from OP Rollup. Proponents can present their challenges to the valid state, and if it is not proven wrong within a certain period of time, it will be automatically considered correct.

6) Governance layer: From the image, we can see that the OP Stack uses multi-signature + $OP tokens for governance. Multi-signatures are typically used for managing upgrades to the Stack system components, and operations are executed when participants have all signed. $OP token holders can vote and participate in the governance of the community DAO.

OP Stack is a combination of Cosmos and Polkadot, allowing for the customization of dedicated chains like Cosmos and the sharing of security and consensus like Polkadot.

2. Key Technologies

2.1 OP Rollup

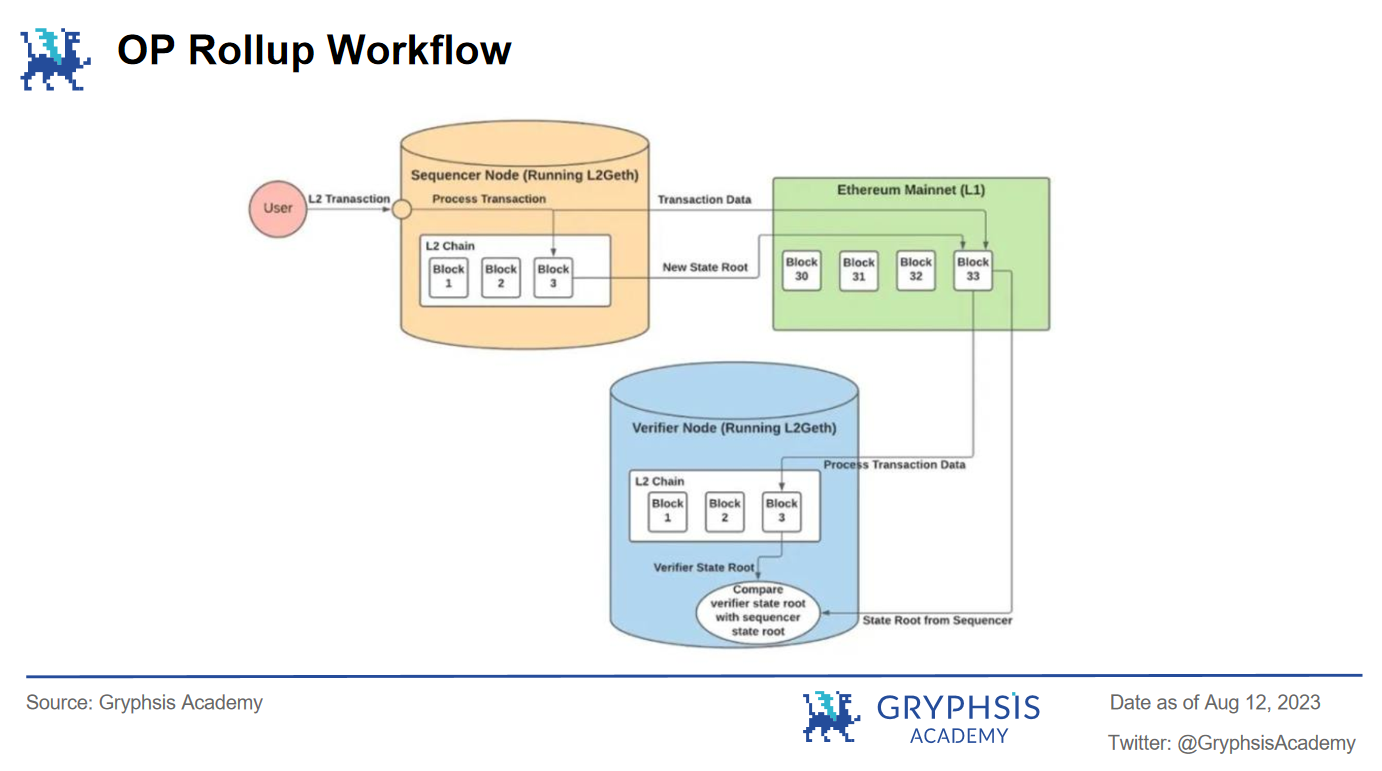

OP Rollup ensures security through data availability challenges and allows parallel execution of transactions. The specific implementation steps are as follows:

1) Users initiate transactions on L2

2) The sequencer batches process and then synchronizes the processed transaction data and the new state root to the smart contract deployed on L1 for security verification. It should be noted that the sequencer also generates its own state root while processing transactions and synchronizes it to L1.

3) After verification, L1 returns the data and state root to L2, and the user's transaction state is securely verified and processed.

4) At this point, OP Rollup treats the state root generated by the sequencer as optimistic and correct. It also opens a time window for validators to challenge whether the sequencer's generated state root matches the transaction's state root.

5) If no validator verifies during the time window, the transaction will be automatically considered correct. If fraudulent behavior is detected, the sequencer handling the transaction will be punished accordingly.

2.2 Cross-Chain Bridging

a) Intra-L2 Messaging

Due to the use of fraud proofs in OP Rollup, transactions need to wait for challenges to be completed, which takes a long time and results in a lower user experience. However, ZKP (Zero-Knowledge Proof) is costly and prone to errors, and implementing batch ZKP also takes time.

To solve the communication problem between L2 and OP Chains, OP Stack proposes modular proofs: using two proof systems for the same chain, developers building L2 Stacks can freely choose any bridging type.

Currently, the options provided by OP are:

High security, high latency fault tolerance (default high security bridge)

Low security, low latency fault tolerance (short challenge period to achieve low latency)

Low security, low latency validity proof (using trusted chain validators instead of ZKP)

High security, low latency of zero-knowledge proofs (ZKP ready)

Developers can selectively choose the bridging focus according to the needs of their own chain, such as choosing high security for high-value assets... Diverse bridging technologies allow efficient movement of assets and data between different chains.

b) Cross-chain transactions

Traditional cross-chain transactions are asynchronous, meaning that transactions may not be fully executed.

OP Stack proposes the idea of a shared sequencer to address this issue. For example, if a user wants to perform cross-chain arbitrage, Chain A and Chain B can share a sequencer to achieve consensus on the timing of transactions, and fees will only be paid after the transactions are confirmed on both sides, with both sequencers sharing the risk.

c) Hyperchain transactions

Due to the limited scalability of Ethereum L1's data availability (limited capacity), publishing transactions to a super chain is not scalable.

Therefore, in OP Stack, the use of the Plasma protocol to expand the amount of data accessible to the OP chain is proposed as a replacement for data availability (DA). Transaction data availability is pushed down to the Plasma chain, while only data commitments are recorded on L1, greatly improving scalability.

IV. ZK Stack

1. Structural Framework

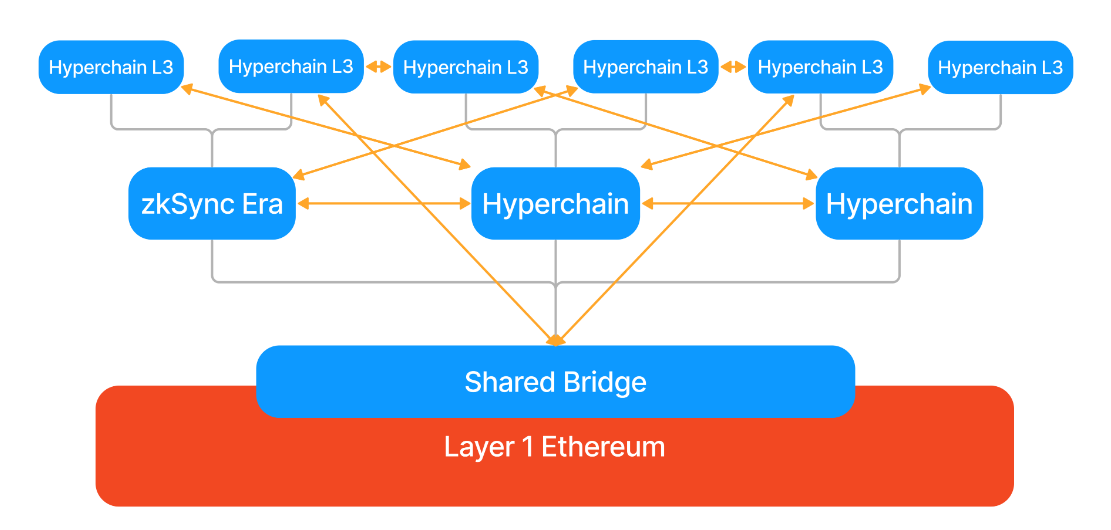

ZK Stack aims to build an open-source, composable, modular code based on the same underlying technology (ZK Rollup) as zkSync Era, allowing developers to customize their own ZK-driven L2 and L3 superchains.

Since ZK Stack is free and open-source, developers have the freedom to customize superchains according to their specific needs. Whether it is choosing a second-layer network that runs parallel to zkSync Era or a third-layer network built on top of it, the possibilities for customization will be extensive.

According to Matter Labs, creators have complete autonomy to customize and shape various aspects of the chain, from choosing data availability modes to using their project's own decentralized sequencer token.

Of course, these ZK Rollup superchains operate independently,But it will only rely on Ethereum L1 for security and verification.

Source: zkSync Document

From the figure, it can be seen that each hyperlink must use the zkEVM engine of zkSync L2 to share security. Multiple ZKP chains run concurrently and aggregate block proofs on the settlement layer of L1, just like building blocks, allowing for continuous expansion and the construction of more L3, L4...

2. Key Technologies

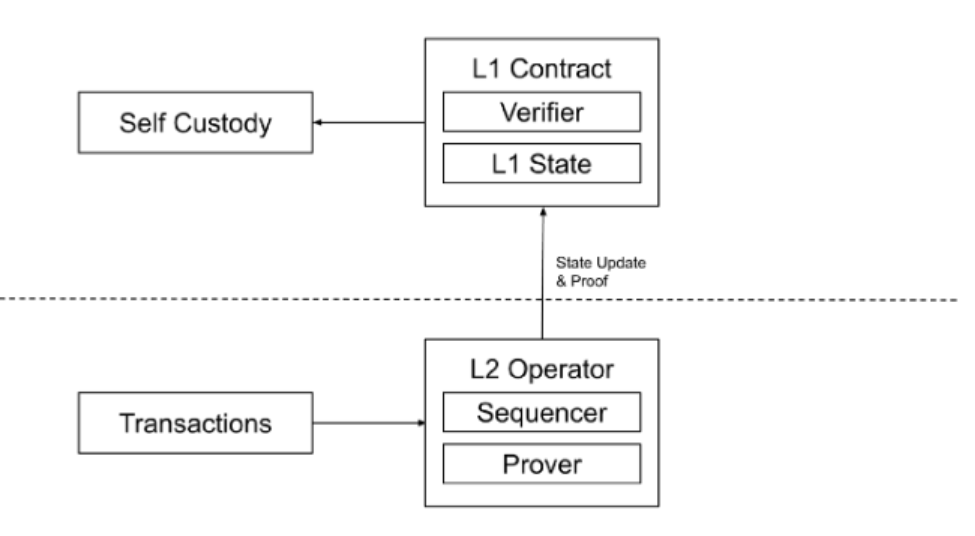

1) ZK Rollup

ZK Stack relies on ZK Rollup as the core technology, and the following are the main user processes:

Users submit their transactions, and the Sequencer collects the transactions into ordered batches and generates validity proofs (STARK/SNARK) for state updates. The updated state is then submitted to the smart contract deployed on L1 for verification. If the verification passes, the asset state on the L1 layer will also be updated. The advantage of ZK Rollup is the ability to mathematically validate using zero-knowledge proofs, providing higher technical and security levels.

2) Cross-chain Bridge

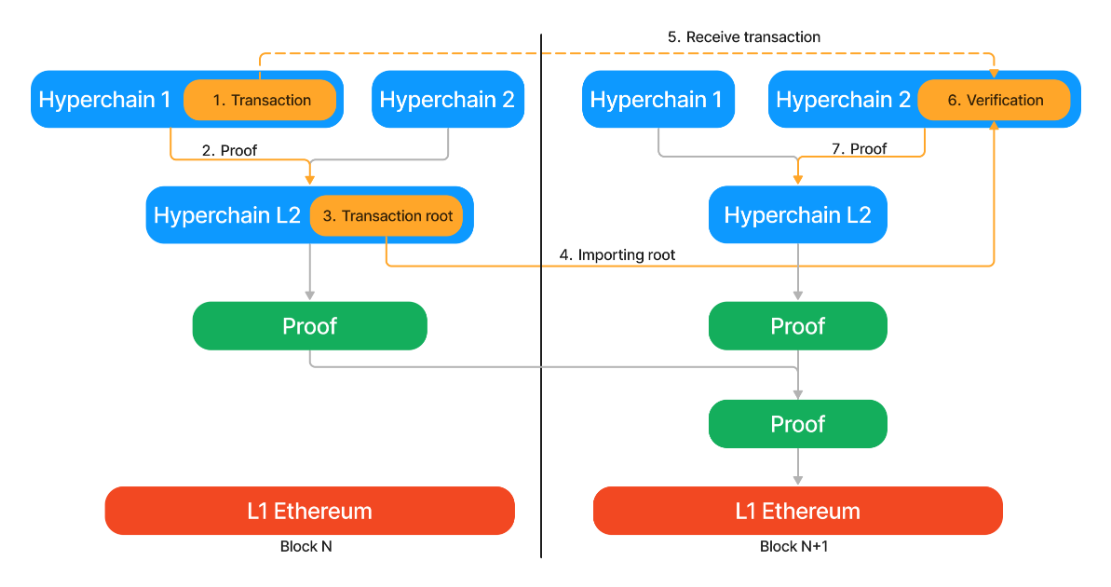

As shown in the above structure, ZK Stack can achieve infinite scalability, generating L3, L 4, and so on. How can interoperability between different chains be achieved?

ZK Stack introduces cross-chain bridges by deploying shared bridge smart contracts on L1 to verify transactions occurring on the super-chains using Merkle proofs. It is essentially the same as ZK Rollup, except the transition is from L3 to L2 instead of L2 to L1.

ZK Stack supports smart contracts on each super-chain to asynchronously invoke each other, allowing users to transfer their assets quickly and without trust in a matter of minutes, without incurring any additional costs. For example, in order for a message on super-chain B to be processed by super-chain A, super-chain A must stay finalized until it reaches the earliest common foundation of A and B. Therefore, in practice, the communication delay of cross-bridging is only a matter of seconds, with super-chains able to complete blocks every second and being more cost-effective.

Source:https://era.zksync.io/docs/reference/concepts/hyperscaling.html#l3s

Not only that, but L3 can also use compression techniques to package proofs. L2 will further expand the packaging, resulting in a more considerable compression ratio and lower costs (recursive compression), thus achieving trustless, fast (within minutes), and cheap (single transaction cost) cross-chain interoperability.

V. Polygon 2.0

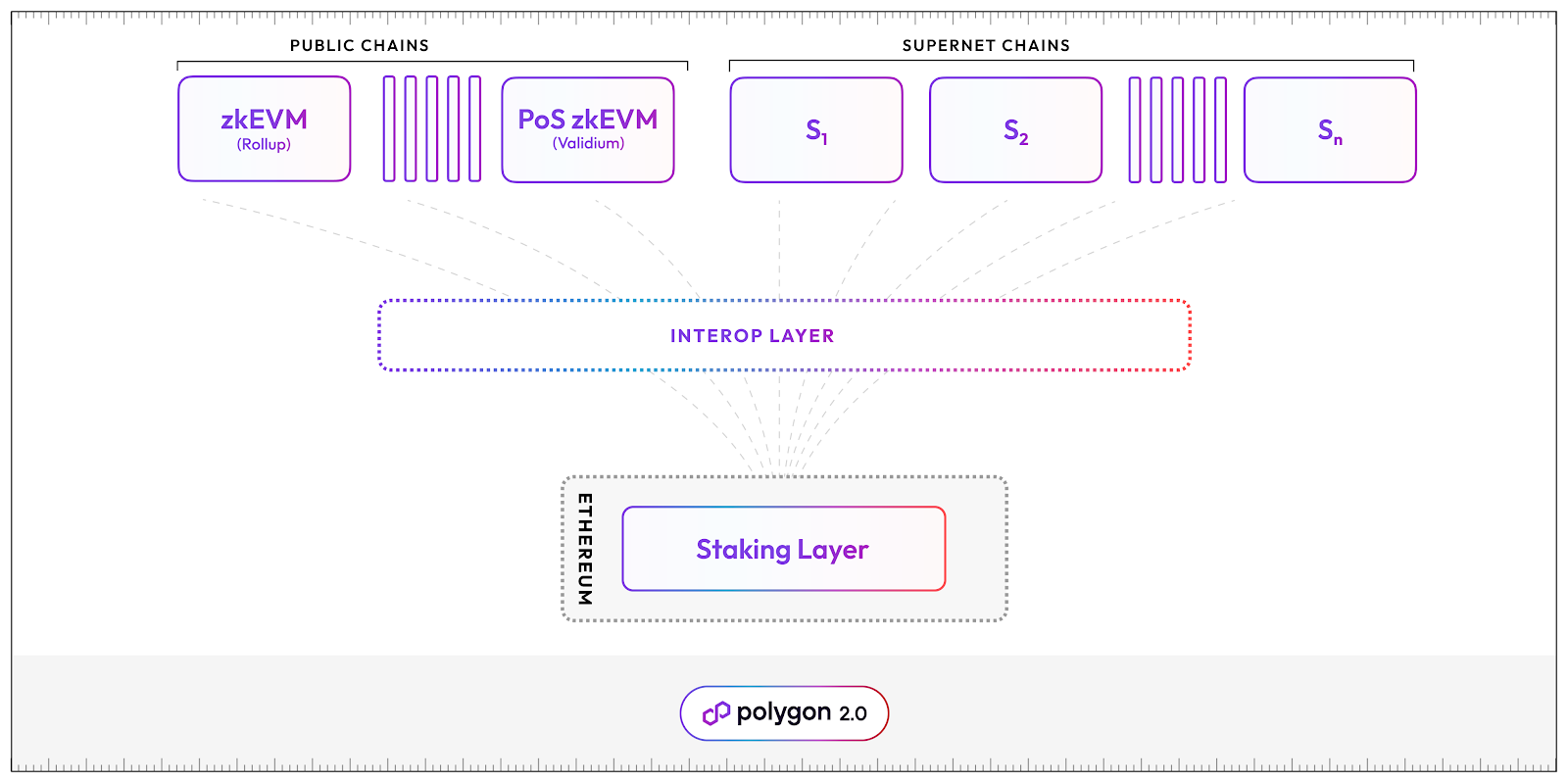

Polygon is a special L2 solution, which technically is L1 as an Ethereum sidechain. The Polygon team recently announced the Polygon 2.0 plan, enabling developers to create their own ZK L2 chains supported by ZK technology, unified through a novel cross-chain coordination protocol, making the whole network feel like one chain.

Polygon 2.0 aims to support an unlimited number of chains, enabling secure and immediate cross-chain interactions without additional security or trust assumptions, thereby achieving unlimited scalability and unified liquidity.

1. Structural framework

Source: Polygon Blog

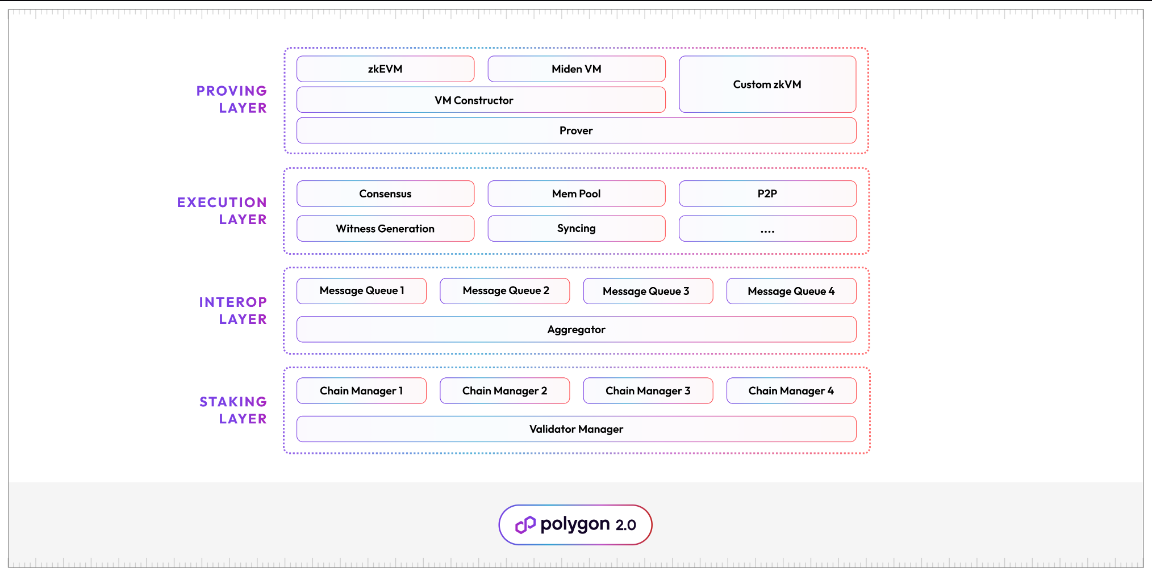

Polygon 2.0 consists of 4 protocol layers:

1) Staking Layer

The Staking Layer is a PoS-based protocol that utilizes staked $MATIC to achieve decentralized governance, efficient management of validators, and improved miner efficiency.

As shown in the diagram, the Staking Layer of Polygon 2.0 introduces Validator Manager and Chain Manager.

Validator Manager: A shared pool of validators for all Polygon 2.0 chains. It includes registration of validators, staking requests, unstaking requests... Think of it as the administrative department for validators.

Chain Manager: Manages the set of validators for each Polygon 2.0 chain. Unlike Validator Manager, Chain Manager is specific to each chain and focuses on validation management. It addresses validator count (which affects decentralization level), additional validator requirements, and other conditions.

The Staking Layer defines the underlying architecture and rules for each chain. Developers can focus on the development of their own chains.

Source: Polygon Blog

2) Interoperability Layer

Cross-chain protocols are crucial for the interoperability of the entire network. How to securely and seamlessly transfer cross-chain messages is something that every solution dedicated to the blockchain should continuously improve.

Currently, Polygon uses two types of contracts for support: the Aggregator and the Message Queue.

Message Queue: This has been revamped and upgraded specifically for the existing Polygon zkEVM protocol. Each Polygon chain maintains a local message queue in a fixed format, and these messages are included in the ZK proofs generated by that chain. Once the ZK proofs are verified on Ethereum, any message from that queue can be safely used by the receiving chain and address.

Aggregator: The purpose of the aggregator is to provide more efficient services between Polygon chains and Ethereum. For example, multiple ZK proofs can be aggregated into one ZK proof and submitted to Ethereum for verification, reducing storage costs and improving performance.

Once the ZK proofs are accepted by the aggregator, the receiving chain can start optimistically accepting messages, as the receiving chain trusts the ZK proofs. This enables seamless message transfer, among other things.

3) Execution Layer

The execution layer allows any Polygon chain to generate batches of ordered transactions, also known as blocks. Most blockchain networks (such as Ethereum, Bitcoin, etc.) use a similar format for it.

The execution layer consists of multiple components, such as:

Consensus: Allows validators to achieve consensus

Mempool: Collects transactions submitted by users and synchronizes them among validators. Users can also check their transaction status in the mempool.

P2P: Enables validators and full nodes to discover each other and exchange messages;

...

Given that this layer has been commercialized, but its implementation is relatively complex, existing high-performance implementations (such as Erigon) should be reused as much as possible.

4) Proof Layer

The proof layer generates proofs for each Polygon, using a high-performance and flexible ZK proof protocol, which typically consists of the following components:

Common Prover: A high-performance ZK prover that provides a clean interface designed to support arbitrary transaction types, i.e., state machine formats.

State Machine Constructor: A framework for defining the state machine used to construct the initial Polygon zkEVM. This framework abstracts the complexity of the proof mechanism and provides a simplified, modular interface that allows developers to customize parameters and build their own large-scale state machine.

State Machine: A simulation of the execution environment and transaction format being proven by the prover. The state machine can be implemented using the aforementioned constructor or fully customized, e.g., using Rust.

2. Key Technologies

Source: Polygon Blog

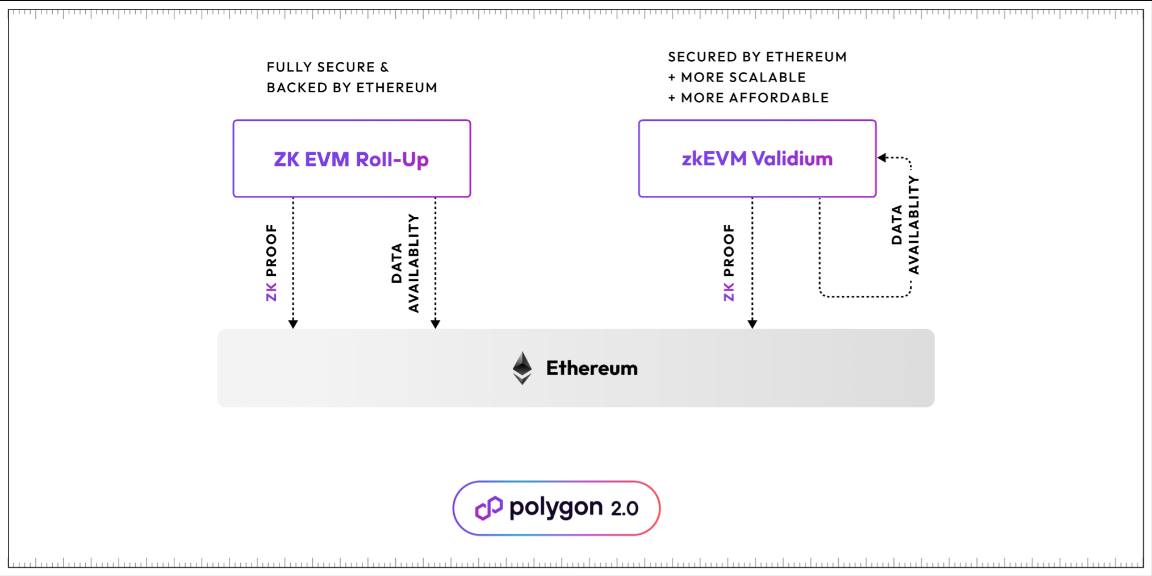

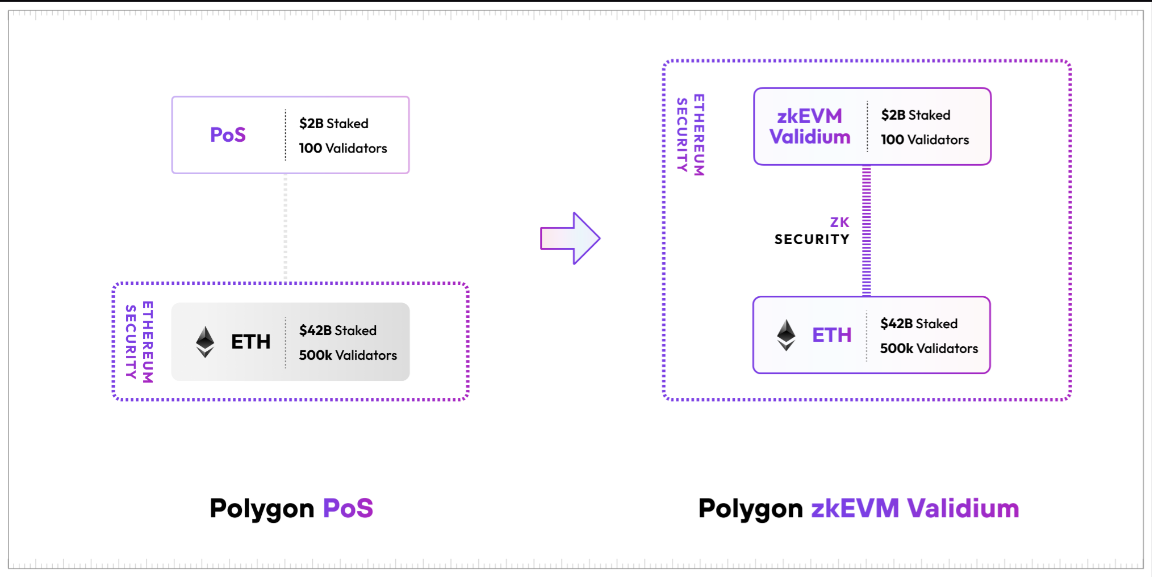

1) zkEVM validium

In the Polygon 2.0 update, the team retains the original Polygon POS and upgrades it to zkEVM validium.

Source: Polygon Blog

Here's a brief introduction: Validium and Rollup are both Layer 2 solutions designed to increase Ethereum's transaction capacity and reduce transaction time. Compared to each other:

Rollup bundles multiple transactions together and submits them to the Ethereum main chain as a batch, utilizing Ethereum to publish transaction data and verify proofs, hence inheriting its unparalleled security and decentralization. However, publishing transaction data to Ethereum is costly and limits throughput.

Validium, on the other hand, doesn't require submitting all transaction data to the main chain. It uses Zero Knowledge Proofs (ZKP) to prove the validity of transactions, while keeping transaction data off-chain. It also protects user privacy. However, Validium requires trust in the execution environment and is relatively more centralized.

Validium can be understood as a lower-cost and more scalable version of Rollup. However, before the upgrade, Polygon zkEVM (Polygon Proof-of-Stake mechanism) operated as a (ZK) Rollup and achieved remarkable results. In just 4 months after its launch, its Total Value Locked (TVL) has already surged to $33 million.

Source: Defilama

Based on Polygon PoS, the cost of generating proofs for zkEVM could become a scalability bottleneck in the long term. Although the Polygon team has been working to reduce the cost of batches and has already lowered it to $0.0259 for proving 10 million transactions, the cost of Validium is even lower. So why not use it?

Polygon has already released documentation stating that in future versions, Validium will take over the roles of the previous PoS while still retaining PoS, with the main purpose of the PoS validators being to ensure data availability and order transactions.

The upgraded zkEVM Validium will provide high scalability and low fees. It is particularly suitable for applications with high transaction volumes and low fees, such as Gamefi, Socialfi, and DeFi. For developers, no additional operations are required; just follow the mainnet updates to complete the Validium upgrade.

2) zkEVM rollup

Currently, Polygon PoS (soon to be upgraded to Polygon Validium) and Polygon zkEVM Rollup are the two public networks in the Polygon ecosystem. This will still be the case after the upgrade, as both networks utilize cutting-edge zkEVM technology, with one serving as an aggregator and the other as a verifier, bringing additional benefits.

Polygon zkEVM Rollup already provides the highest level of security, but at the cost of slightly higher fees and limited throughput. However, it is highly suitable for applications that prioritize high-value transactions and security, such as high-value DeFi Dapps.

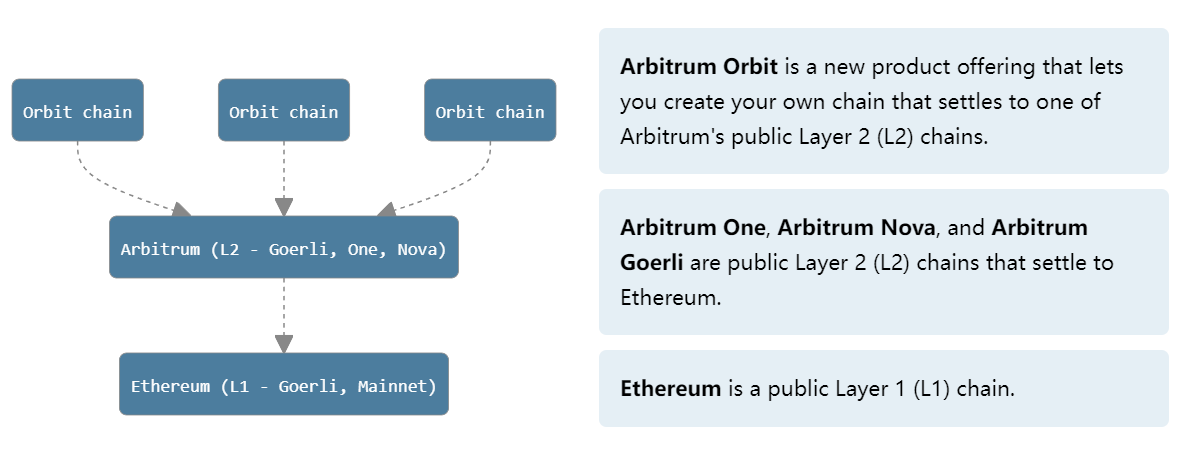

VI. Arbitrum Orbit

Arbitrum, as the current primary L2 public chain, has surpassed $5.1 billion in TVL since its launch in August 2021.

, which occupies nearly 54% of the market share as L2.Arbitrum released the Orbit version in March this year, and previously issued a series of ecological products:

Arbitrum One: The first and core mainnet Rollup of the Arbitrum ecosystem.

Arbitrum Nova: This is the second mainnet Rollup of Arbitrum, designed for projects that are cost-sensitive and require high transaction volume.

Arbitrum Nitro: This is the technical software stack that supports Arbitrum L2 and enables Rollups to be faster, cheaper, and more compatible with EVM.

Arbitrum Orbit: A development framework for creating and deploying L3 on top of the Arbitrum mainnet.

Today we will focus on Arbitrum Orbit.

1. Structure Framework

Originally, developers who wanted to use Arbitrum Orbit to create an L2 network, such as by proposing and having it voted on by the Arbitrum DAO, would create a new L2 chain if approved. However, developing L3, 4, 5, and so on on top of L2 no longer requires permission. Anyone can provide an permissionless framework for deploying custom chains on Arbitrum L2.

Source:Whitepaper

As can be seen, Arbitrum Orbit also strives to allow developers to customize their own Oribit L3 chain through Layer 2 technologies such as Arbitrum One, Arbitrum Nova, or Arbitrum Goerli. Developers can customize the privacy protocols, licenses, token economies, community management, and more of this chain, giving them maximum autonomy.

Among them, it is worth noting that Oribit allows L3 chains to use the token of this chain as the settlement unit, thereby effectively developing their own network.

2. Key Technologies

1) Rollup & AnyTrust

These two protocols respectively support Arbitrum One and Arbitrum Nova. As mentioned earlier, Arbitrum One is a core mainnet Rollup; Arbitrum Nova is a second mainnet Rollup that integrates the AnyTrust protocol, which allows for faster settlement and reduced costs through the introduction of a "trust assumption."

Arbitrum Rollup is an OP Rollup, so we won't go into too much detail about it. Instead, we will focus on analyzing the AnyTrust protocol.

The AnyTrust protocol mainly manages data availability and is governed by a series of third-party organizations such as the DAC (Data Availability Committee). By introducing a "trust assumption," transaction costs are greatly reduced. The AnyTrust chain operates as a sidechain on Arbitrum One, providing lower costs and faster transaction speeds.

So, what exactly is the "Trust Assumption"? And why does its existence reduce transaction costs and require less trust?

According to Arbitrum's official documentation, the AnyTrust chain is operated by a node committee and relies on the participation of several third-party institutions such as the DAC to ensure data availability. Through a set of cryptographic techniques, the AnyTrust protocol achieves security even under the assumption that a majority of these third-party institutions are honest.

Suppose you want to determine how many members of a committee are honest. For example, if the committee consists of 20 people and at least 2 members are assumed to be honest. Compared to BFT, which requires 2/3 of the members to be honest, AnyTrust indeed lowers the trust threshold to the minimum.In a transaction, since the committee promises to provide transaction data, nodes do not need to record all data of L2 transactions on L1. They only need to record the hash value of transaction batches, which greatly saves the cost of Rollup. This is also why AnyTrust chain can lower transaction costs.

Regarding the trust issue, as mentioned earlier, assuming that only 2 members out of 20 are honest, and assuming the assumption holds. As long as 19 members of the committee sign to commit the correctness of this transaction, it can be safely executed. Even if the member who didn't sign is honest, among the 19 members who signed, there must be at least 1 honest member.

What if members do not sign or a few members refuse to cooperate, causing the system to fail? AnyTrust chain can still operate, but it will revert to the original Rollup protocol, where data is still published on Ethereum L1. When the committee operates normally, the chain will switch back to a cheaper and faster mode.

The reason Aribtrum launched this protocol is to meet the needs of applications, such as Gamefi, that require high processing speed and low costs.

2) Nitro

Nitro is the latest version of Arbitrum technology, and its main element is the validator (Prover), which performs traditional interactive fraud proofs on Arbitrum through WASM code. Each component of Nitro is already complete, and Arbitrum has completed the upgrade by the end of August of 22, seamlessly migrating/upgrading from existing Arbitrum One to Aribitrum Nitro.

Nitro has the following characteristics:

Two-phase transaction processing: User transactions are first integrated into a single ordered sequence, then Nitro submits this sequence for processing transactions in order and achieving deterministic state transitions.

Geth: Nitro adopts the most widely supported Ethereum client, Geth (go-ethereum), to support Ethereum's data structures, formats, and virtual machine, ensuring better compatibility with Ethereum.

Separate execution and validation: Nitro compiles the same source code twice, once into native code for executing transactions on Nitro nodes, and again into WASM for validation.

OP Rollup with interactive fraud proof: Nitro uses OP Rollup, including Arbitrum's innovative interactive fraud proof, to settle transactions on the layer 1 Ethereum chain.

These features of Orbit provide technical support for Arbitrum's L3 and L4 use cases, allowing Arbitrum to attract developers seeking customization and create their own custom chains.

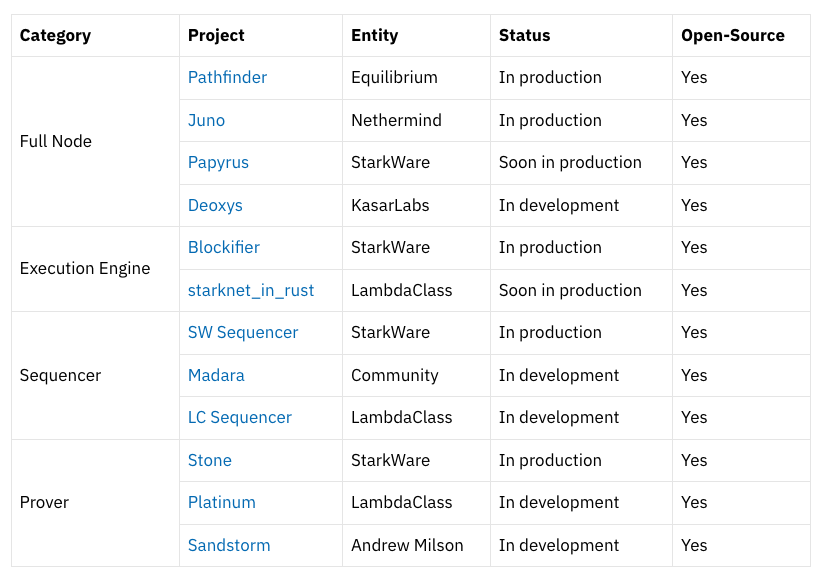

VII. Starknet Stack

StarkWare co-founder Eli Ben-Sasson announced at the EthCC conference in Paris that Starknet is launching the Starknet Stack, allowing any application to deploy its own Starknet application chain in a permissionless manner.

Key technologies such as STARK proofs, the Cairo programming language, and native account abstraction have provided the driving force for the rapid development of Starknet. When developers use the Stack to customize their own Starknet application chain, it becomes highly scalable, configurable, and greatly enhances network throughput, alleviating congestion on the mainnet.

Although Starknet is currently only a preliminary concept and official technical documentation has not been released, Madara Sequencer and LambdaClass are developing sequencer and stack components compatible with Starknet to better adapt to it. The official team is also working hard on the upcoming Starknet Stack, including the development of full nodes/execution engines/verifiers, and more.

It is worth noting that StarkNet recently submitted a proposal for a "Simple Decentralized Protocol" in an effort to change the current state of L2s relying on a single-point operated sequencer. Ethereum is decentralized, but L2s are not, and their MEV revenue corrupts the sequencer.

StarkNet listed some proposed solutions in their proposal, such as:

L1 Staking and Leader Election: Community members can join the Staker pool for Ethereum Staking without permission. Based on the distribution of pool assets and the on-chain random number, a group of Stakers is randomly selected as Leaders responsible for block production in an Epoch. This not only lowers the barrier for Staker users but also effectively prevents MEV gray income through randomness.

L2 Consensus Mechanism: Based on Tendermint, the Byzantine fault-tolerant consensus mechanism that Leaders participate as nodes. After consensus confirmation, it is executed by Voters, and the Proposer calls the Prover to generate ZKP.

In addition, there are also ZK proofs and L1 state updates. Combining with the previous major initiative to support community-driven Prover code operations without permission, the proposal for StarkNet aims to solve the lack of decentralization in L2 and attempt to balance the blockchain trilemma. It is highly anticipated.

Source:https://starkware.co/resource/the-starknet-stacks-growth-spurt/

VIII. Conclusion

Through the explanation of CP and various Layer 2 Stack technologies, this chapter actually reveals that the current Layer 2 Stack solutions can effectively address Ethereum's scalability issues but also bring a series of challenges and problems, especially in terms of compatibility. The technology in L2 Stack solutions is not as mature as CP's, and even CP's technological ideas from three or four years ago are still worth learning for current L2s. So, technically, CP is still far ahead of Layer 2. However, having advanced technology alone is not enough. In the next section, we will discuss the token value, ecosystem development, and the strengths, weaknesses, and characteristics of CP and L2 Stacks, in order to enhance readers' perspectives.

References:

https://medium.com/@eternal1997L

https://tokeneconomy.co/the-state-of-crypto-interoperability-explained-in-pictures-654cfe4cc167

https://research.web3.foundation/Polkadot/overview

https://foresightnews.pro/article/detail/16271

https://messari.io/report/ibc-outside-of-cosmos-the-transport-layer?referrer=all-research

https://stack.optimism.io/docs/understand/explainer/#glossary

https://www.techflowpost.com/article/detail_12231.html

https://gov.optimism.io/t/retroactive-delegate-rewards-season-3/5871

https://wiki.polygon.technology/docs/supernets/get-started/what-are-supernets/

https://polygon.technology/blog/introducing-polygon-2-0-the-value-layer-of-the-internet

https://era.zksync.io/docs/reference/concepts/hyperscaling.html#what-are-hyperchains

https://medium.com/offchainlabs

Statement: This report is provided by @sldhdhs 3 , the students of @GryphsisAcademy, completed this original work under the guidance of @Zou_Block and @artoriatech. The author is solely responsible for all content, which may not necessarily reflect the views of Gryphsis Academy or the organization commissioning the report. Editorial content and decision-making are not influenced by readers. Please note that the author may own cryptocurrencies mentioned in this report. This document is for informational purposes only and should not be relied upon as the basis for investment decisions. It is strongly recommended that you conduct your own research and consult independent financial, tax, or legal advisors before making any investment decisions. Remember, past performance of any asset is not indicative of future returns.