Original Author: Vitalik Buterin

Original source: Hackernoon

Original compilation: ChinaDeFi

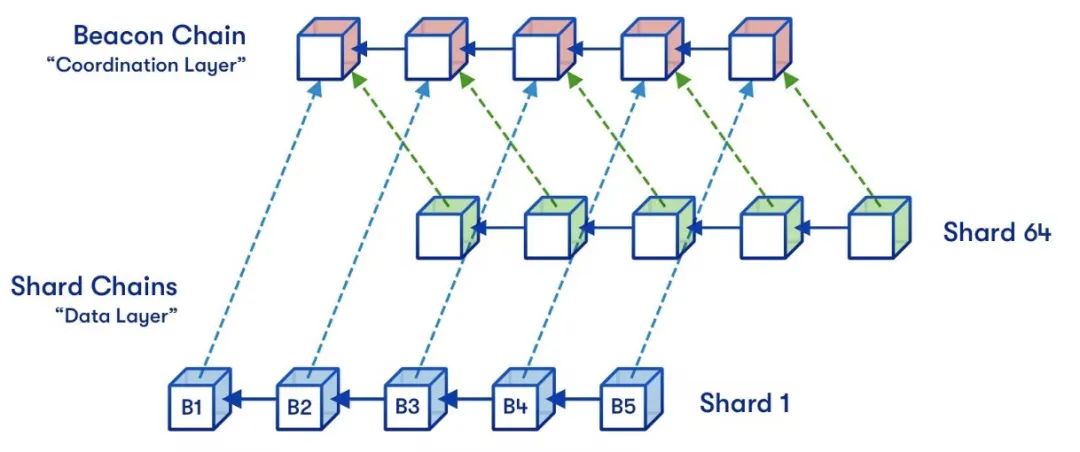

Sharding is the future of scalability for Ethereum and will be key to helping the ecosystem support thousands of transactions per second, and it will also allow large parts of the world to use the platform on a regular basis at an affordable cost. However, it is also one of the more misunderstood concepts in the Ethereum ecosystem and the broader blockchain ecosystem. It refers to a set of ideas with very specific properties, but it is now often merged with technologies with weaker security properties. The purpose of this post is to explain what specific properties sharding provides, how it differs from other non-sharding technologies, and what sacrifices a sharded system must make to achieve these properties.

One of many descriptions of Ethereum's sharded versions. Original drawing by Hsiao-wei Wang, designed by Quantstamp.

first level title

The scalability trilemma

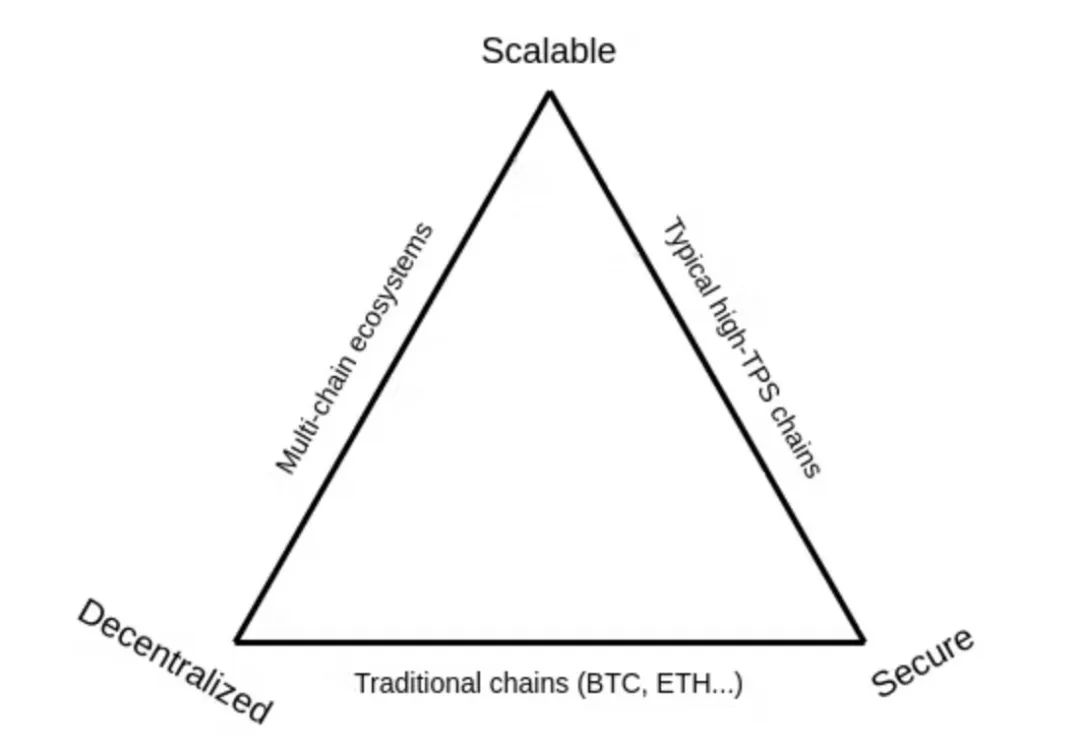

The best way to describe sharding should start with a problem statement that plans and inspires a solution: the scalability trilemma.

The scalability trilemma states that blockchains try to possess three properties, and if we stick to "simple" technologies, then we can only achieve two of these three. These three attributes are:

Scalability: The chain can handle more transactions than a single normal node can verify.

Decentralization: The chain can function without relying on a small group of large centralized actors. This is usually interpreted to mean that when we can't join a group of nodes with an ordinary laptop, we shouldn't have any trust in that node.

Security: The chain is resistant to most attacks on participating nodes. (Ideally 50%; more than 25% is fine, 5% is absolutely not).

Now let's look at these three categories of "easy solutions", only two of which are usually available:

Traditional blockchains: including Bitcoin, pre-PoS/sharded Ethereum, Litecoin and other similar chains. They rely on each participant running a full node to validate each transaction, so they are decentralized and secure, but not scalable.

High TPS chains: including the DPoS family, but also many others like it. They rely on a small number of nodes (usually 10-100) to maintain consensus among each other, and users must trust a majority of them. This is scalable and secure, but it is not decentralized.

Multi-chain ecosystem: This refers to the general concept of "horizontal scaling", by running different applications on different chains and communicating between them using cross-chain communication protocols. This is decentralized and scalable, but it's not secure because an attacker only needs to get a majority of consensus nodes in one of the many chains to disrupt that chain and potentially cause a chain reaction, affecting applications in other chains It can also cause great damage.

Sharding is a technology that can satisfy all three needs at the same time. A sharded blockchain is:

Scalable: It can handle many more transactions than a single node.

Decentralization: It can completely "survive" on ordinary laptops without relying on any "super nodes".

The remainder of this article describes how sharded blockchains do this.

first level title

Sharding by Random Sampling

The most understandable sharding is the sharding by random sampling. Sharding via random sampling has weaker trust properties than the form of sharding we have built in the Ethereum ecosystem, but it uses simpler techniques.

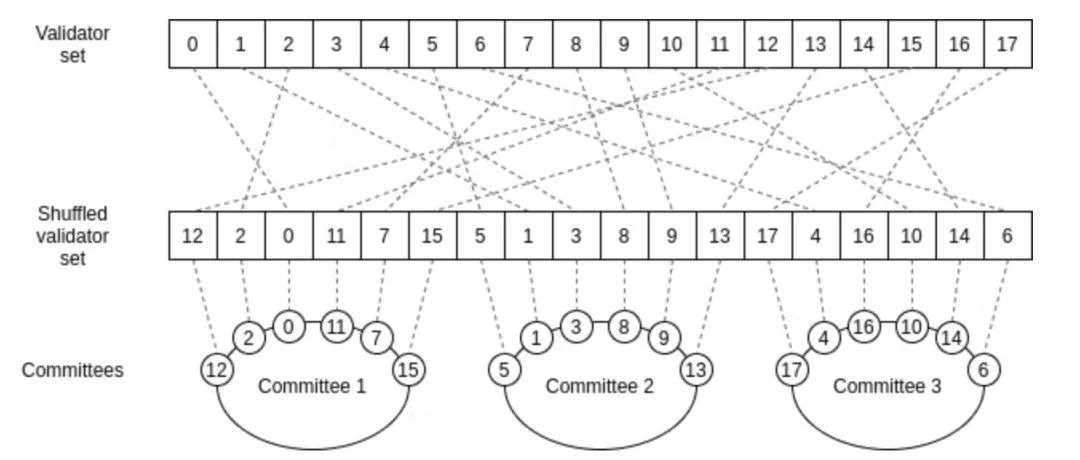

Its core idea is as follows. Suppose we have a proof-of-stake chain with a large number (say 10,000) of validators, and a large number (say 100) of blocks to be verified at the same time. No single computer is powerful enough to validate all of these blocks before the next set of blocks come in.

So what we do is split the validation work randomly. We randomly shuffle the validator list, let the first 100 validators in the scrambled list verify the first block, and let the last 100 validators in the scrambled list verify the second block, so that analogy. This group of validators who are randomly selected to validate a block (or perform some other task) is called a committee.

When validators validate a block, they issue a signature attesting to the fact that they have done so. Everyone else, instead of verifying 100 full blocks, now only verifies 10,000 signatures, which is much less work, especially with BLS signature aggregation. Instead of broadcasting over the same P2P network, each block is broadcast on a different subnet, and nodes only need to join the subnet corresponding to the block they are responsible for (or interested in for other reasons).

We can introduce some mathematical terms to discuss what's going on. Using Big O notation, we denote the computational power of a single node by "O(C)". Traditional blockchains can handle blocks of O(C) size. As mentioned above, the shard chain can process O(C) blocks in parallel (the cost of each node indirectly verifying each block is O(1), because each node only needs to verify a fixed number of signatures), each The capacity of a block is O(C), so the total capacity of the shard chain is O(C2). This is why we call this type of sharding secondary sharding, and this effect is a key reason why we believe sharding is the best way to scale blockchains in the long run.

first level title

FAQ: What is the difference between splitting into 100 committees and splitting into 100 separate chains?

There are two key differences:

Random sampling prevents attackers from focusing their firepower on one shard. In a multi-chain ecosystem of 100 chains, an attacker only needs about 0.5% of the total stake to cause damage. In a sharded blockchain, an attacker would have to own close to 30-40% of the entire stake to do the same (in other words, the chain has shared security). Of course, they can wait until they are lucky enough to randomly get 51% in a shard, although they own less than 50% of the total stake, this will multiply for attackers with much less than 51% . If the attacker's is below 30%, then this thing is almost impossible.

Both of these differences ensure that sharding creates an environment for applications that maintains the key security properties of a single-chain environment, which is simply not possible with a multi-chain ecosystem.

first level title

Improve sharding with a better security model

A common statement in bitcoin circles, and one that I completely agree with, is that blockchains like bitcoin (or ethereum) don't fully rely on honest majority assumptions. If there is a 51% attack on such a blockchain, the attacker can do nasty things like revert or censor transactions, but they cannot insert invalid transactions. Even if they revert or censor transactions, this behavior can be easily detected by users running normal nodes, so if the community wants to fork to coordinate the resolution of the attack, removing the power of the attacker, they can do so quickly at this point.

Ideally, we would like to have a form of sharding that avoids the 51% validity trust assumption and retains the strong security barriers that traditional blockchains achieve through full verification. And that's exactly what we've done most of our research over the past few years.

first level title

Scalable Verification of Computation

We can decompose the scalable verification problem of 51%-attack-proof into two cases:

Validate computations: Assuming we have all the inputs for the computations, check that certain computations are done correctly.

Validate data availability: check that the computational inputs themselves are stored in some form, and that they can be downloaded if really needed; this check should be performed without actually downloading the entire input itself (since the data may be too large to download every blocks).

When validating a block in the blockchain, this includes computation and data availability checks: we need to be confident that the transactions in the block are valid, and that the new state root hash declared in the block is the correct result of executing those transactions, But we also need to be confident that enough data has been published in the block so that the user who downloaded that data can compute the state and continue working on the blockchain. The second part is a very subtle but very important concept called the data availability problem; more on that later.

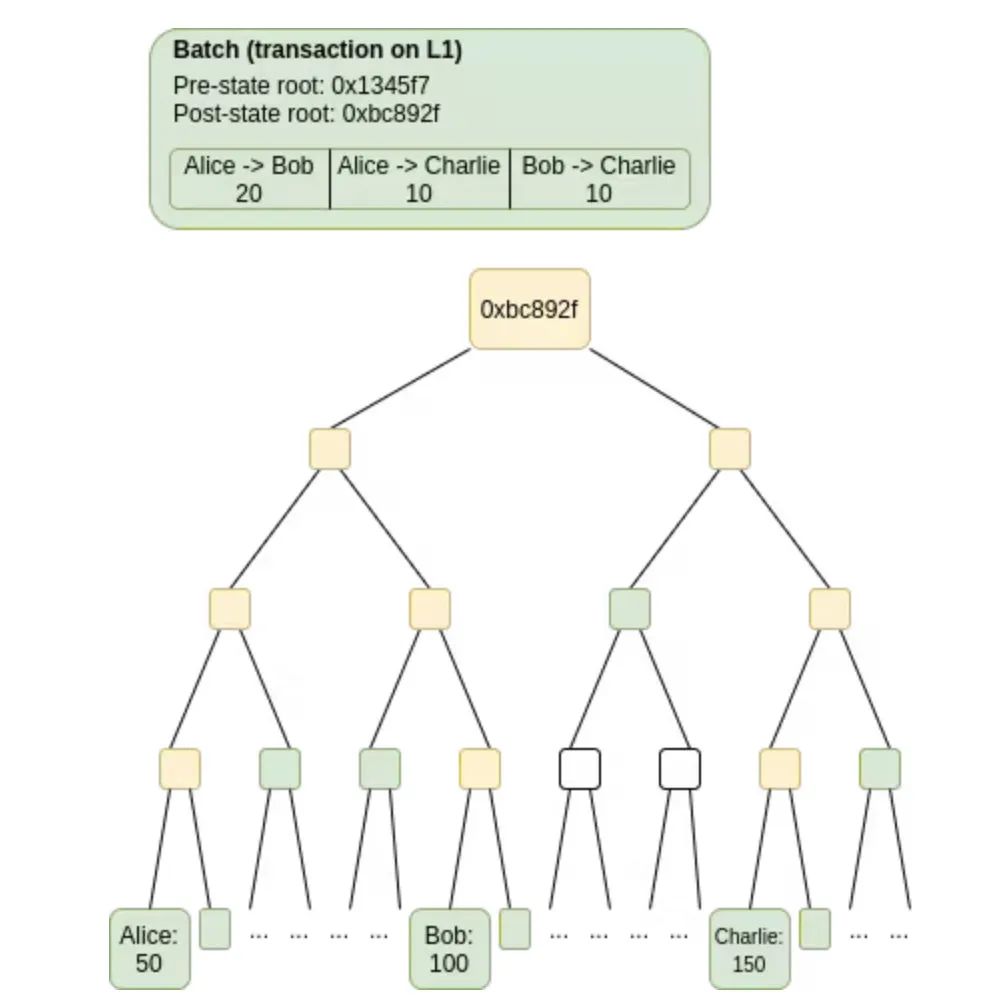

Scalable verification computation is relatively easy; there are two types of techniques: fraud proofs and ZK-SNARKs.

Both techniques can be briefly described as follows:

A fraud proof is a system that accepts the result of a computation and requires someone with a pledged deposit to sign a message that reads "I prove that if you perform computation C with input X, you will get output Y". By default, we trust these messages, but we also give others who have staked deposits the opportunity to challenge (a message with a signature saying "I disagree, the output is Z"). All nodes run computations only when challenged. A mistake by either of the two parties will lose their deposit and all calculations depending on the result of that calculation will be recalculated.

ZK-SNARK is a form of cryptographic proof that directly proves the statement that "performing a computation C on an input X results in an output Y". This proof is cryptographically "sound": if C(x) is not equal to Y, it is computationally impossible to make an efficient proof. Even though running C itself takes a lot of time, the proof can be verified quickly.

There is a class of semi-scalable systems that do scalable verification of computation only, while still requiring every node to verify all data. This can be achieved by using a set of compression tricks to replace most data with computation. This is the domain of rollups.

first level title

Scalable verification of data availability is more difficult

Fraud proofs cannot be used to verify the availability of data. Computational fraud proofs rely on the fact that the inputs to the computation are published on-chain the moment the original claim is submitted, so that, if challenged, the execution of the challenge occurs in the exact same "environment" in which the original execution occurred . In the case of checking for data availability, we cannot do that because the problem is precisely that there is too much data to check to post it on-chain. Therefore, fraud prevention schemes for data availability run into a critical problem: someone can claim "data X is available", but not publish it, wait for a challenge, and then publish data X, making it appear to the challenger to be untrue to the rest of the network. correct.

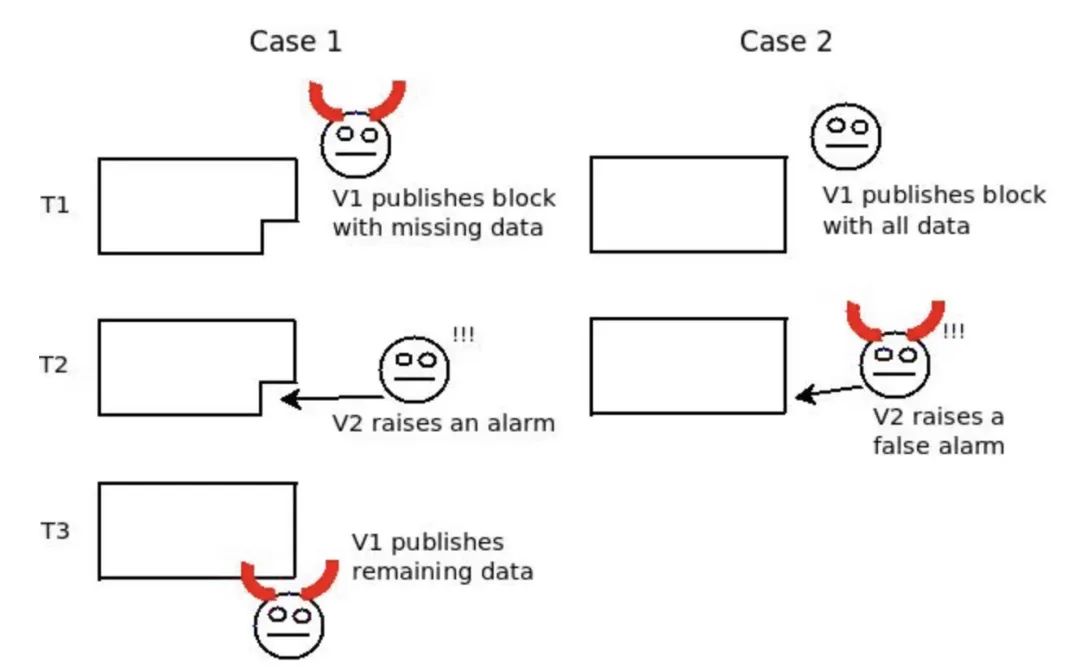

This is expanded on the Fisherman's Dilemma:

The fact that there is no way to tell who is right and who is wrong makes it impossible to have an effective data availability fraud prevention scheme.

first level title

FAQ: What if some data is not available? With ZK-SNARKs, you can be sure that everything is valid, isn't that enough?

There are some strong information-theoretic arguments that the problem is fundamental and that there are no neat tricks available to solve it.

first level title

So how can I check if 1 MB of data is available without trying to download it? It sounds impossible!

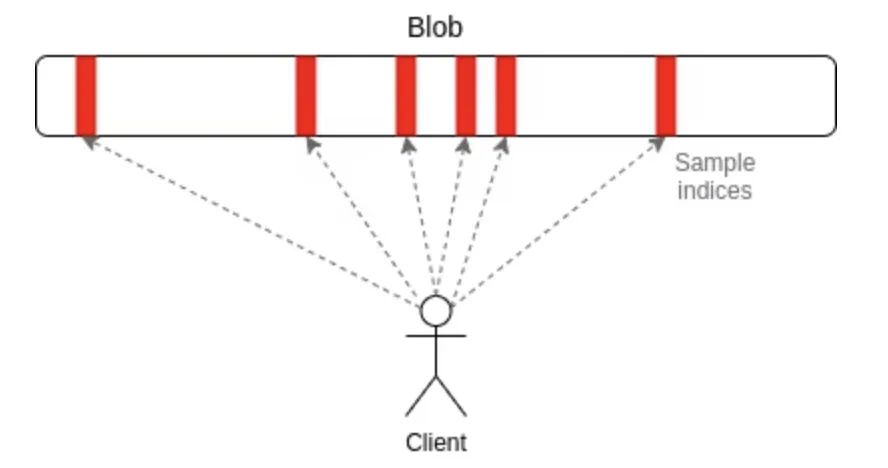

The key is a technique known as data availability sampling. Data availability sampling works as follows:

Use a tool called erasure coding to expand a data with N blocks into a data with 2N blocks, so that any N blocks in it can restore the entire data.

Check availability, instead of trying to download the entire data, the user simply randomly selects a fixed number of locations in the block (e.g. 30 locations), and only if they successfully find the block in the block at all the locations they chose, the blocks will be accepted.

ZK-SNARK can be used to verify whether the erasure coding on the data is correct, and then Merkle branches can be used to verify individual blocks. Alternatively, polynomial commitments (eg: Kate (aka KZG) commitments) can be used, which essentially do erasure coding, proof of a single element, and correctness verification in one simple component - this is what ethereum sharding uses of.

first level title

Review: How do we make sure everything is right again?

Suppose we have 100 blocks and want to efficiently verify the correctness of all blocks without relying on committees. We need to do the following:

Each client performs data availability sampling for each block, verifying that the data in each block is available, even if the size of the entire block is 1 megabyte or larger, each block only needs to download a few thousand byte. A client will only accept a block if all data for its availability challenge has been correctly responded to.

Now that we have verified the availability of the data, it becomes easier to verify correctness. There are two methods:

1. We can use ZK-SNARK. Each block has a ZK-SNARK to prove correctness.

2. We can use fraud proofs: some participants who have pledged deposits can sign the correctness of each block. Other nodes, called challengers (or fishermen) randomly check and try to process blocks completely. Because we have checked the availability of the data, it is possible to download the data and fully process any particular block. If they find an invalid block, they issue a challenge that everyone validates. If it turns out that the block is broken, then that block and all future blocks that depend on it need to be recalculated.

In the case of Ethereum sharding, the near-term plan is for shard blocks to contain only data; that is, sharding is purely a "data availability engine" that uses this secure data space along with fraud proofs or ZK-SNARKs to achieve high Throughput secure transaction processing capability is the job of layer 2 rollup. However, it is entirely possible to create such a built-in system to add "native" high-throughput execution.

first level title

What are the key properties of a sharding system? What is the compromise?

The key goal of sharding is to replicate as closely as possible the most important security properties of traditional (non-sharded) blockchains, but without requiring every node to personally verify every transaction.

Shards are very close. In a traditional blockchain:

Invalid blocks cannot go through because validators will notice that they are invalid and ignore them.

Unavailable blocks cannot go through because validators cannot download them, and ignore them.

In a sharded blockchain with advanced security features:

Invalid blocks cannot pass because:

Fraud proofs will quickly catch them and inform the entire network of the incorrectness of the block and severely punish the creator;

ZK-SNARK proves correctness, we cannot create a valid ZK-SNARK for an invalid block.

Unusable blocks cannot pass because:

If a block has less than 50% available data, then for each client at least one data availability sample check will almost certainly fail, which will cause the client to reject the block;

If at least 50% of the data in a block is available, then effectively the entire block is available, since only one honest node is required to reconstruct the rest of the block.

Traditional high TPS chains without sharding cannot provide these guarantees. A multi-chain ecosystem cannot avoid the problem of an attacker choosing a chain to attack and easily take over it (chains can share security, but if this is not done well, it will become a de facto traditional high TPS chain with all disadvantages, if done well, it will just be a more complex implementation of the above sharding techniques).

Sidechains are highly implementation dependent, but they are often vulnerable to weaknesses of traditional high TPS chains (if they share miners/validators) or multi-chain ecosystems (if they do not share miners/validators). Shard chains can avoid these problems.

However, there are some cracks in the armor of the sharding system. It is worth noting that:

Shard chains that rely only on committees are vulnerable to adaptive adversaries and have weaker accountability. That is, if an adversary has the ability to hack (or shut down) any set of nodes they choose in real-time, they only need to attack a small subset of nodes to disrupt a committee. Furthermore, if an adversary (whether an adaptive adversary or just a 50% attacker) corrupts a committee, only a small fraction is penalized. This is another key reason why data availability sampling, along with fraud proofs or ZK-SNARKs, is a great addition to random sampling techniques.

Data availability sampling is only safe if a sufficient number of online clients collectively issue sufficient data availability sampling requests whose responses almost always overlap to account for at least 50% of the blocks. In practice, this means that there must be several hundred clients online (and this number increases with the ratio of system capacity to individual node capacity). This is a minority-in-n trust model - generally quite trustworthy, but certainly not as reliable as 0-in-n trust for nodes in non-shard chains.

If a shard chain relies on fraud proofs, then it relies on timing assumptions; if the network is too slow, nodes may accept a finalized block before fraud prevention programs show up and show that it is wrong. Fortunately, if we follow the strict rule of reverting all invalid blocks after they are found, then this threshold is a user-set parameter: each user chooses how long they wait for the final result, and if they don't want to wait enough For a long time, losses are incurred, and more cautious users can be assured of safety. Still, this detracts from the user experience. Using ZK-SNARK to verify the validity solves this problem.

Large raw data volumes need to be passed, which increases the risk of failure under extreme network conditions. Small amounts of data are easier to send than large amounts of data. If the block explorer wants to save the entire chain, it needs to store more data.

Sharded blockchains rely on a sharded peer-to-peer network, with each p2p "subnet" being more vulnerable to attacks because it has fewer nodes. The subnetwork model for data availability sampling alleviates this problem because there is some redundancy between subnetworks, but there is still a risk.

Incidentally, if the throughput of a sharded blockchain becomes too high, its security risk becomes greater, which is a key reason why efforts to scale to hyper-quadratic sharding have been largely abandoned; it appears that maintaining Quadratic sharding is just that quadratic sharding is a nice compromise.

first level title

Why not centralize production and shard verification?

An often-proposed alternative to sharding is to build a chain with a structure similar to a centralized high-TPS chain, except that it uses data availability sampling and sharding to verify validity and availability.

This improves on the centralized high TPS chains that currently exist, but it is still much weaker than sharded systems. There are several reasons for this:

In high TPS chains, it is difficult to detect block producer censorship. Censorship detection requires either (i) being able to look at every transaction and verify that there are no transactions that are obviously worth entering but cannot enter, or (ii) have a 1 in n trust model among block producers and verify that there are no blocks that cannot be entered. In a centralized high TPS chain, (i) is impossible, (ii) more difficult, because the small number of nodes makes the trust model of 1 in n more likely to collapse, if the block time of the chain is too large for DAS Fast (as most centralized high TPS chains do), it's hard to prove that a node's blocks aren't rejected because they're all published too slowly.

If the majority of block producers and ecosystem members try to force through an unwelcome protocol change, user clients will surely find it, but it is much harder for the community to rebel and fork because they It will be necessary to spin up a new set of very expensive high-throughput nodes to maintain a chain that maintains the old rules.

Centralized infrastructure is more susceptible to scrutiny by outside actors. The high throughput of block producing nodes makes them very easy to detect and even easier to shut down. Logistically, it is much easier to censor dedicated HPC than to track down an individual user's laptop.

A proper sharding system is best used as the base layer. With a sharded base layer, we can always create a centralized production system by building it as a rollup. However, if we have a base layer that relies on centralized block production, we cannot build a more decentralized layer 2 on top of it.