Compilation of the original text: Nanfeng

Compilation of the original text: Nanfeng

However, one of the challenges with this goal is that complexity is hard to define, and sometimes, you have to make a trade-off between two options that introduce different kinds of complexity and have different costs. How do we compare?

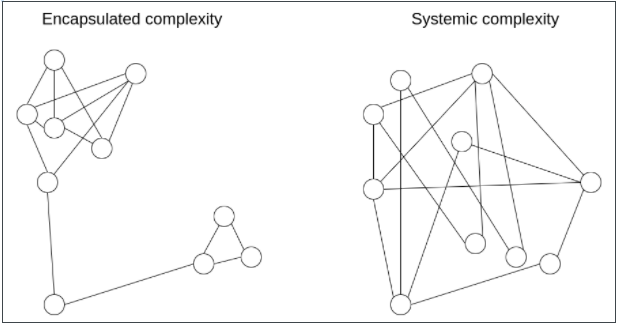

A powerful and intelligent tool that allows us to think more finely about complexity is the distinction between what we call encapsulated complexity andsystem complexity(systemic complexity)。

"Encapsulated complexity" occurs when a subsystem of a system is internally complex but presents a simple "interface" to the outside. "System complexity" occurs when different parts of a system cannot even be clearly separated and have complex interactions with each other.

Below are a few examples.

BLS signature vs. Schnorr signature

BLS signature and Schnorr signature are two commonly used cryptographic signature schemes that can be composed of elliptic curves.

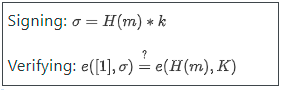

BLS signatures look mathematically very simple:

H is a hash function, m is the message, k and K are the private and public keys. So far, simple. However, the real complexity is hidden in the definition of the e-function: elliptic curve pairings, one of the most inscrutable mathematical parts of all of cryptography.

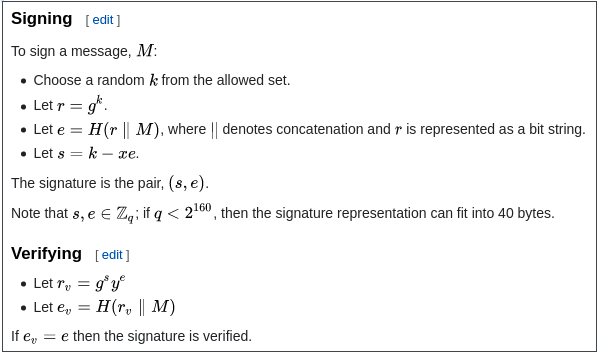

Now, let's look at Schnorr signatures. Schnorr signatures only rely on basic elliptic curves. But the signing and verification logic is a bit more complicated:

For example:

For example:

Doing a BLS multisignature (combined signature of two keys k1 and k2) is simple: just σ1+σ2. But Schnorr multi-signature requires two rounds of interaction, and needs to deal with some tricky Key Cancellation attacks.

Schnorr signatures need to generate random numbers, but BLS signatures do not.

secondary title

Cryptography vs. Cryptoeconomics

An important design choice that arises in many blockchain designs is the comparison between cryptography and cryptoeconomics. This (like in Rollups) is often a choice between validity proofs (ie ZK-SNARKs) and fraud proofs.

ZK-SNARKs are complex technologies. While the basic idea behind how ZK-SNARKs work can be explained clearly in a single article, actually implementing a ZK-SNARK to verify some computation involves many times more complexity than the computation itself (thus, this is why for ZK-SNARKs proofs for the EVM are still under development, while fraud proofs for the EVM are already in beta). Efficiently implementing a ZK-SNARK proof involves special-purpose optimized circuit design, the use of unfamiliar programming languages, and many other challenges. Fraud proofs, on the other hand, are simple in themselves: you just run computations directly on-chain if someone raises a challenge. For efficiency, a binary search scheme is sometimes added, but even that doesn't add much complexity.

While ZK-SNARKs are complex, their complexity is encapsulated complexity. The relatively low complexity of fraud proofs, on the other hand, is the system complexity. The following are examples of some of the system complexities introduced by fraud proofs:

They require careful incentive engineering to avoid the validator's dilemma.

If done with consensus, they would require additional transaction types for fraud proofs, while also taking into account what would happen if many participants were competing to submit fraud proofs at the same time.

They rely on a synchronization network.

They allow censorship attacks to also be used for theft.

Fraud-proof based Rollups require liquidity providers to support instant withdrawals.

secondary title

various examples

PoW (Nakamoto Consensus):Lower encapsulation complexity, because the mechanism is very simple and easy to understand, but higher system complexity (such as selfish mining attack).

Hash function:High packaging complexity, but with very well-understood properties, so the system complexity is low.

Random shuffling algorithm:Shuffle algorithms can be internally complex (such as Whisk), but ensure strong randomness, and easy to understand; or internally simple, but can produce weak and difficult to analyze random properties (such as system complexity ).

Miner Extracted Value (MEV):A protocol strong enough to support complex transactions may be fairly simple internally, but those complex transactions can have complex systemic effects on the protocol's incentives by proposing blocks in very abnormal ways.

Verkle tree:secondary title

How do we weigh that?

Usually, the option with lower packaging complexity is also the option with lower system complexity, so there is one option that is clearly simpler. But at other times, you have to make a difficult choice between one complexity and another. It should be clear at this point that if it's encapsulating complexity, it's less dangerous. The risk posed by the complexity of a system is not a simple function of the length of the specification; a small 10-line fragment of the specification that interacts with other parts will be more complex than a 100-line function that would otherwise be considered a black box .

However, there are limitations to this approach that favors encapsulation of complexity. Software bugs may appear in any piece of code. When the code becomes larger and larger, the probability of errors is close to 1. Sometimes what started as encapsulation complexity can become system complexity when you need to interact with subsystems in new and unexpected ways.

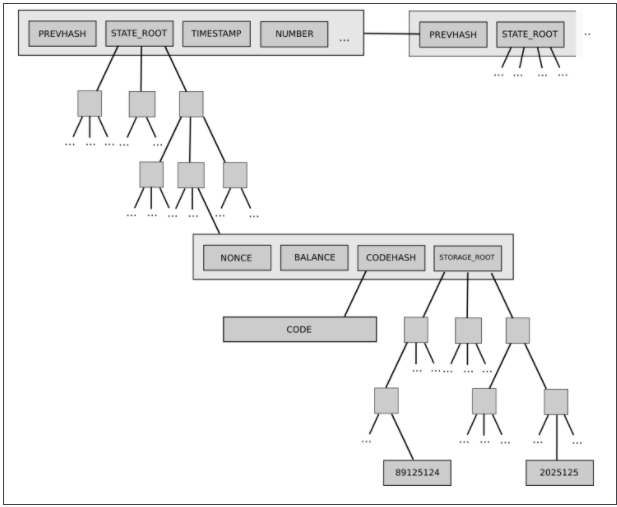

An example of the latter is Ethereum's current two-level state tree, which features a tree of account objects, where each account object in turn has its own storage tree.

This tree structure is complex, but at first this complexity seems to be well encapsulated: the rest of the protocol interacts with the tree as a readable and writable key/value store, so we don't have to worry about how the tree is constructed.

Later, however, this complexity proved to have systemic implications: the ability for accounts to have arbitrarily large storage trees meant that there was no way to reliably expect a particular state part (eg. "all accounts starting with 0x1234") to have predictable size. This makes it harder to split the state into multiple parts, complicating the design of synchronization protocols and attempts to distribute storage processes.Why does packaging complexity become systemic? Because the interface has changed.What is the solution? Current proposals to move to Verkle trees also include moving to a balanced single-level tree design.

Ultimately, which type of complexity is preferred in any given situation is a question with no easy answer.The best we can do is support encapsulation complexity moderately, but not too much, and exercise our judgment in each concrete case. haveSometimes, it is indeed the best practice to sacrifice a little system complexity to greatly reduce packaging complexity. Other times, you may even misjudge what is encapsulated and what is not. Every situation is different.