Original author: Adrian Chow

Contributed by Jonathan Yuen and Wintersoldier

Summary

Oracles are indispensable in ensuring the locked value of DeFi protocols. Of the total locked-up volume of DeFi of US$50 billion, 33 billion are guaranteed by oracles.

However, the inherent time delay in oracle price feed updates results in a subtype of value extraction called Maximal Extractable Value (MEV), which is called Oracle Extractable Value (OEV); OEV includes oracle frontrunning, arbitrage, and inefficient liquidations.

There are a growing number of design implementations available to prevent or mitigate negative OEV bleeds, each with its own unique trade-offs. This article discusses existing design options and their trade-offs, as well as proposes two new ideas, their value propositions, open issues, and development bottlenecks.

introduction

Oracle can be said to be one of the most important infrastructures in DeFi today. They are an integral part of most DeFi protocols, which rely on price feeds to settle derivatives contracts, close undercollateralized positions, and more. Currently, oracles guarantee a value of US$33 billion, accounting for at least two-thirds of the total locked-up volume of US$50 billion on the chain 1 . However, for application developers, adding oracles brings obvious design trade-offs and issues, stemming from the loss of value through frontrunning, arbitrage, and inefficient liquidations. This article classifies this value loss as Oracle Extractable Value (OEV), outlines its key issues from an application perspective, and attempts to illustrate the safe and reliable addition of OEV to DeFi protocols based on industry research. Key considerations for oracles.

Oracle Extractable Value (OEV)

This section assumes that the reader has a basic understanding of oracle functionality and the differences between push-based and pull-based oracles. Price feeds for individual oracles may vary. See the appendix for an overview, classification, and definitions.

Most applications that use oracle price feeds only require a price read: decentralized exchanges running their own pricing models use oracle price feeds as reference prices; depositing collateral for overcollateralized loan positions only require an oracle read Get the price to determine initial parameters such as borrowing value versus closing price; excluding extreme cases such as long-tail assets where pricing updates are too infrequent, basically the delay in the oracle updating the price feed is not important when considering designing the system. Therefore, the most important considerations for oracles are - the accuracy of the price contributors, and the decentralized performance of the oracle provider.

But if latency in price feed updates is an important consideration, more attention should be paid to how the oracle interacts with the application. Typically in this case, such delays lead to value extraction opportunities, i.e. front running, arbitrage and liquidation. This subtype of MEV is called OE V2. We will outline the various forms of OEV before discussing various implementations and their trade-offs.

arbitrage

Oracle front-running and arbitrage are commonly known as toxic flows in derivatives protocols because these transactions are conducted under information asymmetry and are often risk-free at the expense of liquidity providers. profit. OG DeFi protocols like Synthetix have been grappling with this problem since 2018 and have tried various solutions over time to mitigate these negative externalities.

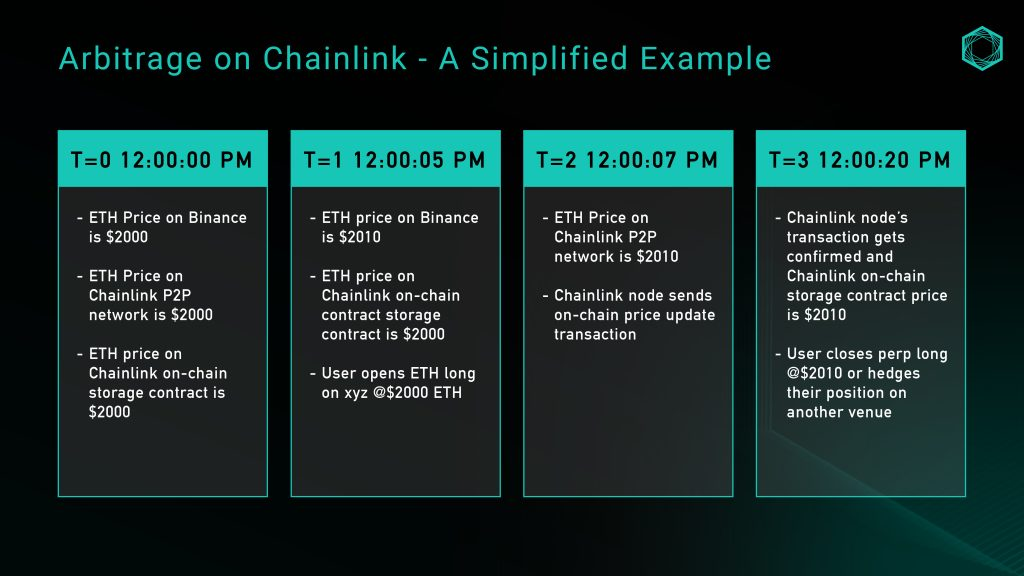

Let us illustrate with a simple example; the perpetual contract decentralized exchange xyz uses the Chainlink oracle in the ETH/USD market. The example uses the ETH/USD price feed to illustrate:

Figure 1: Example of arbitrage using Chainlink oracles

While the above is an oversimplified example that does not take into account factors such as slippage, fees, or funding, it illustrates the opportunities that arise from a lack of price granularity caused by the role of deviation thresholds. Searchers can monitor the latency of spot market price updates and extract zero-risk value from Liquidity Providers (LPs) based on Chainlinks on-chain storage.

front-running

Front-running, similar to arbitrage, is another form of value extraction where a searcher monitors a mempool for oracle updates and front-runs the actual market price before committing it on-chain. In this way, searchers have time to bid and trade before the oracle is updated, and the transaction is completed at a price that is favorable to their trading direction.

Perpetual contract decentralized exchanges such as GMX have always been victims of toxic front-running; before all oracles in GMX were updated through the KeeperDAO coordination protocol, about 10% of the protocol profits had been lost to front-running 4 .

What if we just go with a pull model?

One of the value propositions of Python is that using Python based on the Solana architecture, publishers can push price updates to the network every 300 milliseconds 5 , thereby maintaining low latency price feeds. Therefore, when an application queries prices through Pyths application programming interface (API), it can retrieve the latest price, update it to the target chains on-chain storage, and perform any downstream operations in the application logic in a single transaction.

As mentioned above, applications can directly query Python for the latest price updates, update on-chain storage, and complete all related logic in one transaction. Doesnt this effectively solve the problem of front-running and arbitrage?

Thats not all - updates to Pyth that give users the ability to choose which prices are used in transactions may lead to adverse selection (another trope of poison). While prices must be stored on-chain over time, users can still choose any price that meets these constraints - meaning arbitrage still exists, as it allows searchers to see future prices before using past prices. Pyths documentation6 suggests that a simple way to protect against this attack vector is to add a staleness check to ensure that the price is recent enough - however, there must be a certain buffer time before updating transaction data in the next block, we How to determine the optimal time threshold?

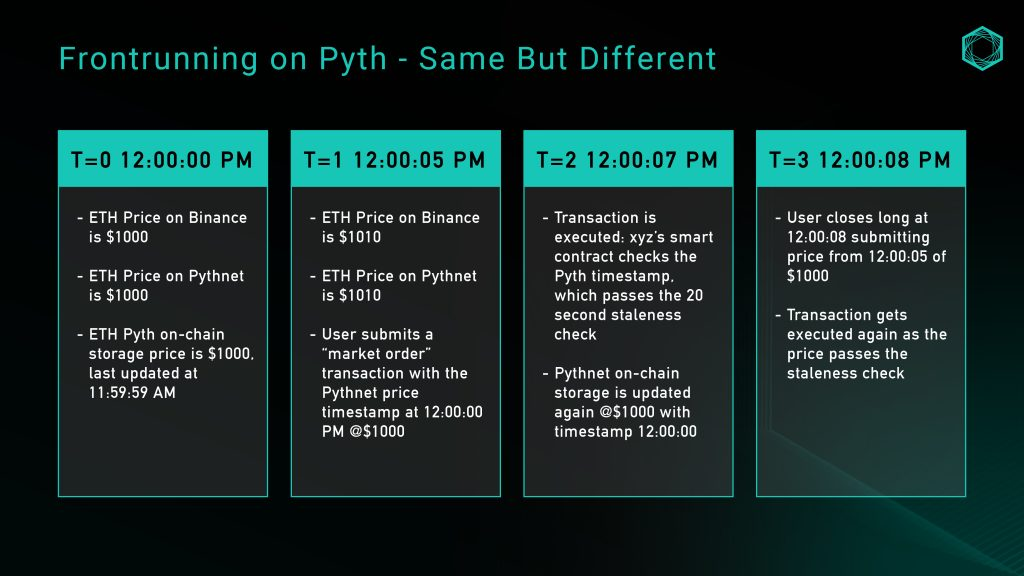

Let us take the perpetual contract decentralized exchange xyz as an example for analysis. This time they are using the Pyth ETH/USD price feed, and the expiration check time is 20 seconds, which means that the timestamp of the Pyth price must be in execution Within 20 seconds of the downstream transaction’s block timestamp:

Figure 2: Front-running example process using Pyth

An intuitive idea is to simply lower the expiration check threshold, but a lower threshold may lead to unpredictable network replies at block times, thus affecting the user experience. Since Pyths price feed relies on bridging, sufficient buffering is needed to a) provide time for Wormhole guardians to prove the price, and b) allow the target chain to process the transaction and include it in the block. These trade-offs are detailed in the next section.

Close position

Position closing is the core part of any agreement involving leverage, and the granularity of price feed updates plays an important role in determining the efficiency of position closing.

In the case of a threshold-based push oracle, when the spot price reaches the threshold but does not meet the preset parameters of the oracles price feed, the granularity (or lack of granularity) of the price update will result in a missed opportunity to close the position. This creates negative externalities in the form of market inefficiencies.

When a liquidation occurs, the application often pays out part of the liquidation collateral and sometimes provides a reward to the user who initiated the liquidation. For example, in 2002 Aave paid out $37.9 million in liquidation rewards on mainnet alone 7 . This clearly overcompensates the third party and creates poor performance for the user. Additionally, when there is extractable value, the ensuing Gas Wars cause value to drain from the application and thus flow to the MEV supply chain.

Design space and considerations

Considering the above problems, various implementation solutions based on push, pull and alternative designs will be discussed below, each of which is effective in solving the above problems and the trade-offs involved; the trade-offs can be in the form of additional centralization and trust preconditions , or poor user experience.

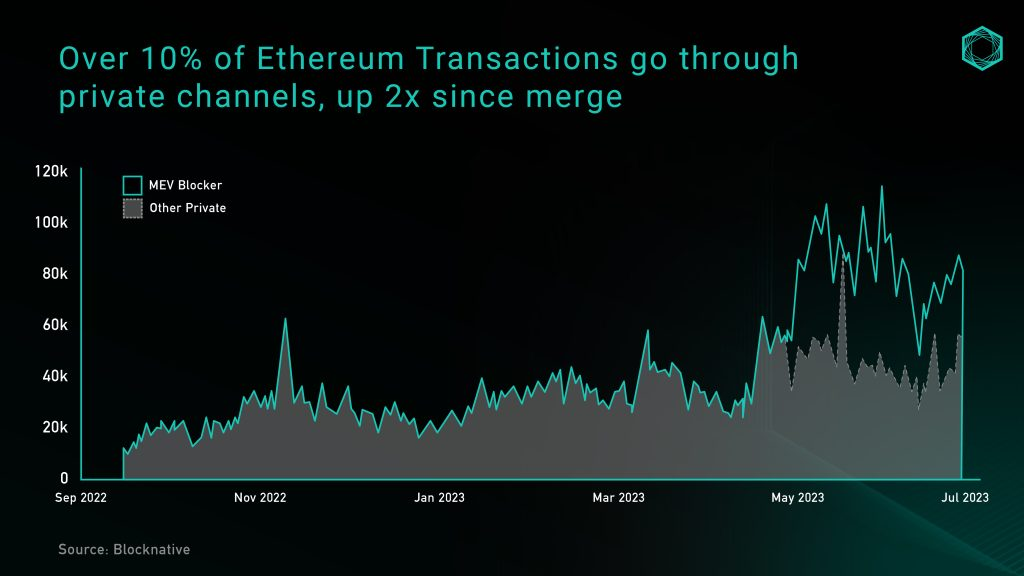

Order Flow Auctions (OFA) dedicated to oracles

Order flow bidding OFA has emerged as a solution to eliminate the negative externalities generated by MEV. Broadly speaking, OFA is a general-purpose third-party bidding service to which users can send orders (trades or intents), and searchers who extract MEV can bid for exclusive rights to run strategies on their orders. A significant portion of the auction revenue is returned to users to compensate them for the value they created in these orders. The adoption rate of OFA has surged recently, with more than 10% of Ethereum transactions conducted through private channels (private RPC/OFAs) (Figure 3), which is believed to further catalyze growth.

Figure 3: Consolidated daily number of private Ethereum transactions. Source: Blocknative

The problem with implementing a universal OFA in oracle updates is that the oracle has no way of knowing whether an update based on standard rules will produce any OEV, and if not, it will introduce additional delays when the oracle sends transactions into the auction. On the other hand, the simplest way to streamline OEV, and minimize latency, is to feed all oracle order flow to a single dominant searcher. But this obviously brings great centralization risks, may encourage rent-seeking behavior and censorship, and lead to poor user experience.

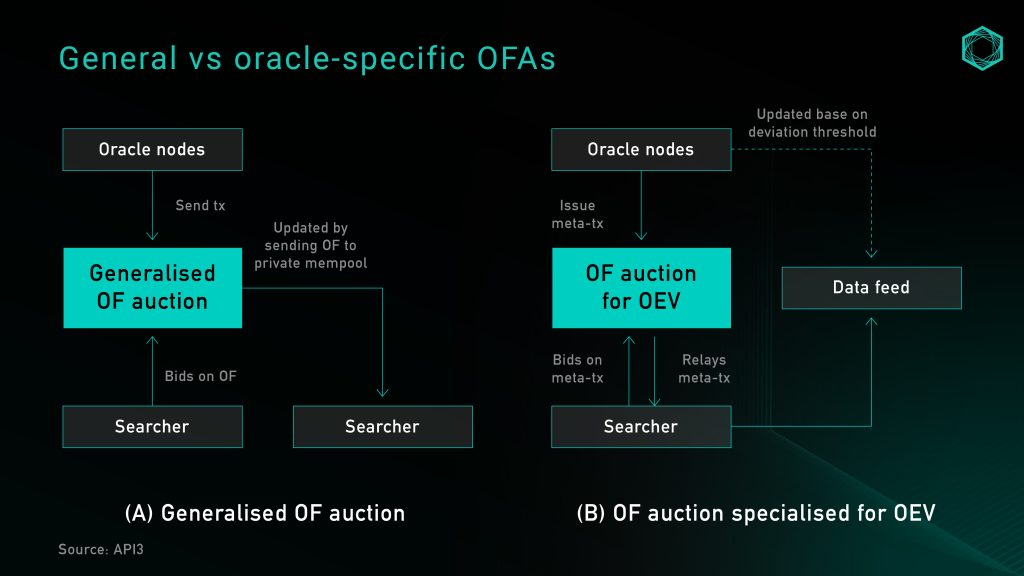

Figure 4: General OFA and Oracle-specific OFA

Price updates for oracle-specific OFA that do not include existing rule-based updates are still made in the public mempool. This allows the oracle’s price updates, and any extractable value that results from them, to remain in the application layer. As a by-product, it also increases the granularity of the data by allowing searchers to request data source updates without requiring oracle nodes to bear the additional cost of more frequent updates.

Oracle-specific OFA is ideal for liquidating positions because it can bring more fine-grained price updates, maximize the return of capital to the borrowers whose positions are liquidated, reduce the protocol rewards paid to the liquidators, and in the protocol The value extracted from bidders is retained in the bidder for redistribution to users. They also solve to some extent - though not entirely - the problems of front-running and arbitrage. Under perfect competition and first price sealed bid auction processes, the outcome of the auction should be a block space cost close to execution opportunity 8, extracted from the front-running OEV data feed. value, as well as the reduction in arbitrage opportunities generated by the increased price granularity of price feed updates.

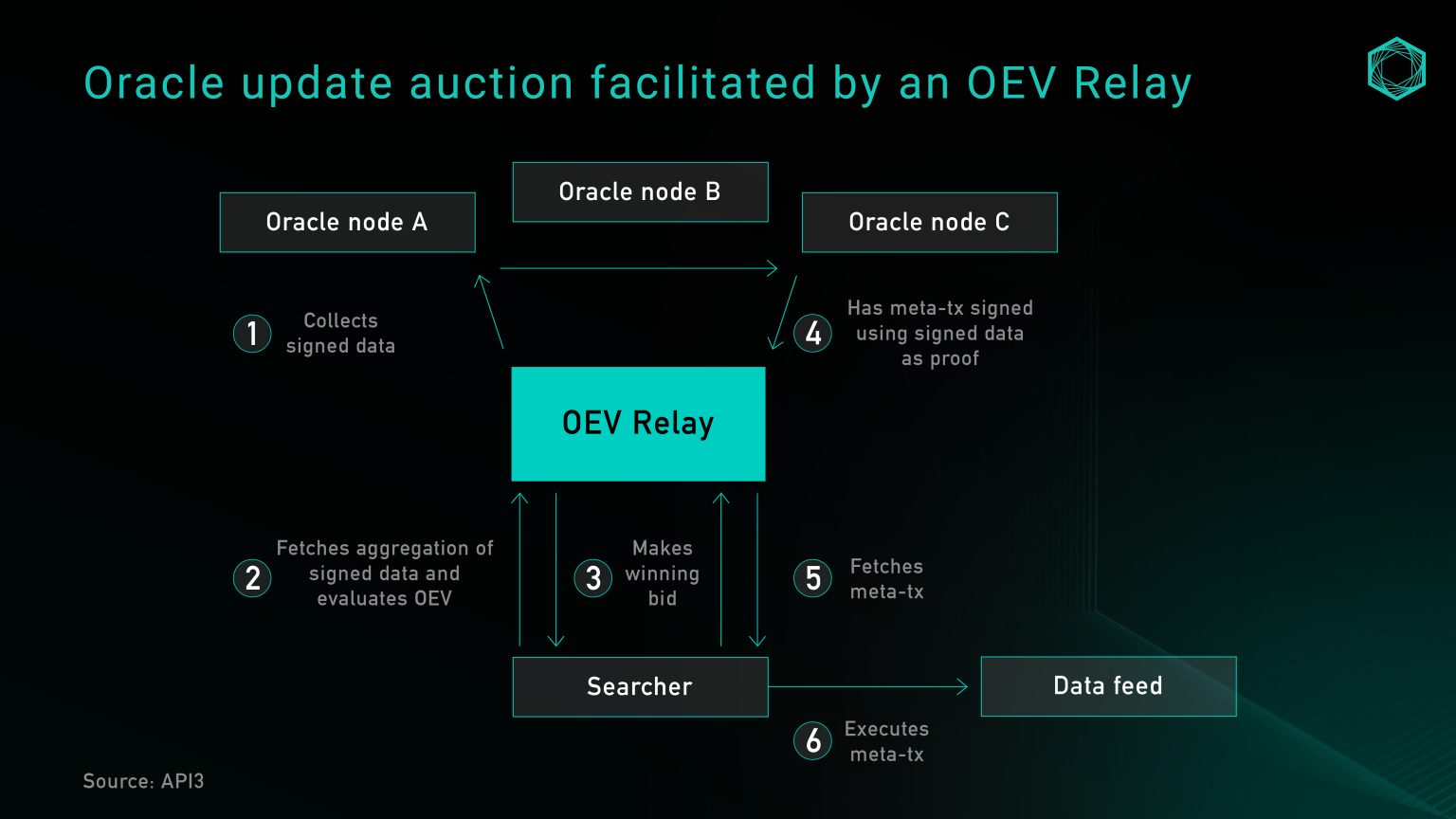

Currently, implementing an oracle-specific OFA requires either joining a third-party bidding service (such as OEV-Share) or building a bidding service as part of the application. Inspired by Flashbots, API 3 utilizes the OEV relay (Figure 5) as an API designed to perform DoS protection services for bidding. This relay is responsible for collecting meta-transactions from oracles, collating and aggregating searcher bids, and redistributing the proceeds in a trustless manner without controlling bids. When a searcher wins a bid, updating the data source can only rely on transferring the bid amount to the protocol-owned proxy contract, which then updates the price source with the signed data provided by the relayer.

Figure 5: OEV repeater for API 3

Alternatively, protocols can drop the middleman and build their own bidding service to capture all extracted value from OEV. BBOX is one upcoming protocol that hopes to embed bidding into its liquidation mechanism to capture OEV and return it to the application and its users 9 .

Run a central node or Keeper

An early idea stemming from the first wave of perpetual contract decentralized exchanges to combat OEV was to run a centralized Keeper network (gatekeeper network) to aggregate prices received from third-party sources (such as centralized exchanges). Then leverage data feeds like Chainlink as a contingency plan or circuit breaker. This model was popularized in GMX v1 10 and its many subsequent forks, and its main value proposition is that because the Keeper network is run by a single operator, it is absolutely protected against front-running.

While this solves many of the problems mentioned above, there are obvious centralization concerns. The centralized Keeper system can determine execution prices without proper verification of pricing sources and aggregation methods. In the case of GMX v1, Keeper is not an on-chain or transparent mechanism, but a program signed by the teams address that runs on a centralized server. The core role of Keeper is not only to execute orders, but also to execute orders according to its own preset definitions."Decide"Transaction prices, there is no way to verify the authenticity or source of the execution prices used.

Automated Keeper Network and Chainlink Data Flow

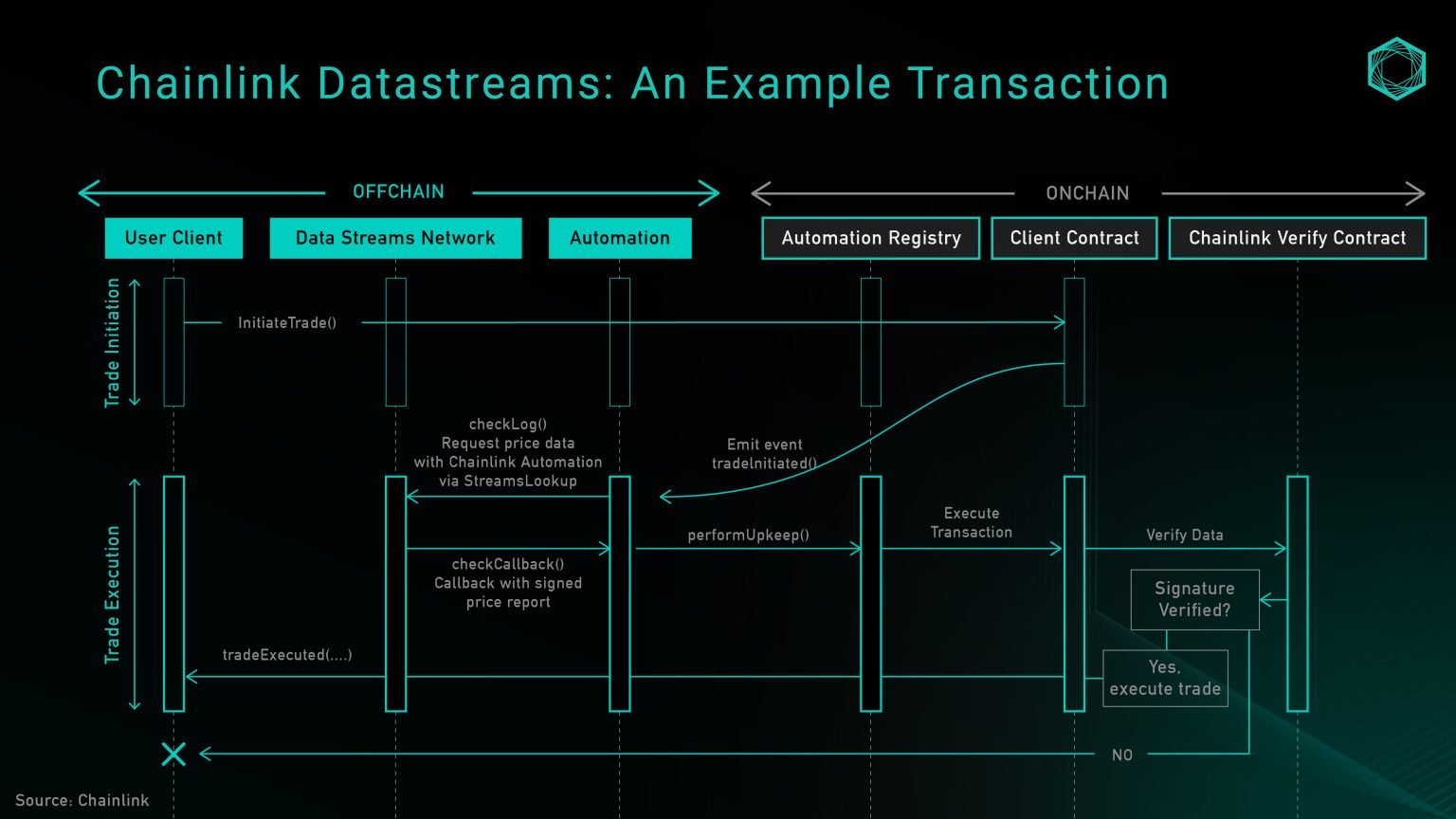

The solution to the above-mentioned centralization risks posed by a single-operator Keeper network is to use third-party service providers to build a more decentralized automation network. Chainlink Automation is one such product, and it provides this service in conjunction with Chainlink Data Streams, a new pull-based, low-latency oracle. The product was recently released and is currently in closed beta, but GMX v2 11 is already using it and it can serve as a reference for systems using this design.

At a high level, the Chainlink data flow consists of three main parts: data DON (decentralized oracle network), automated DON, and on-chain verification contracts 12 . Data DON is an off-chain data network with an architecture similar to how Python maintains and aggregates data. Automated DON is a network of guardians secured by the same node operators of Data DON, used to extract prices from on-chain Data DON. Finally, the validator contract is used to verify that the off-chain signature is correct.

Figure 6: Chainlink data flow architecture

The above figure shows the transaction process of calling the open transaction function, in which the automated DON is responsible for obtaining the price from the data DON and updating the on-chain storage. Currently, the endpoints for directly querying the data DON are limited to whitelist users, so the protocol can choose to offload the Keeper maintenance work to the Automation DON (Automation DON), or run its own Keeper. But as the product development life cycle progresses, expect this to gradually shift to a permissionless structure.

On a security level, the trust assumptions that rely on automated DONs are the same as those for data DONs alone, which is a significant improvement over a single Keeper design. However, if the power to update the price feed is given to the automated DON, then the opportunity for value extraction can only be left to the nodes in the Keeper network. This in turn means that the protocol will trust LinkToken node operators (mainly institutions) to maintain their social reputation and not preempt users from operating, which is similar to trusting Lido Node node operators to maintain their reputation and not to It has a large market share and monopolizes block space

Pull: Delayed Settlement

One of the biggest changes in Synthetix perps v2 is the introduction of Python price feeds for perpetual contract settlement 13. This allows orders to be settled at Chainlink or Pyth prices, provided they do not deviate by more than a predefined threshold and the timestamp passes expiry checks. However, as mentioned above, simply switching to pull-based oracles will not solve OEV-related issues for all protocols. To solve the problem of front-running, it can be introduced in the form of delayed orders."last viewed"Pricing mechanism, in practice this divides a user’s market order into two parts:

Transaction #1: Submit on-chain to open a market order"intention", and provides standard order parameters such as size, leverage, collateral and slippage tolerance. At the same time, additional Keeper fees are required to reward the Keeper for executing transaction #2.

Transaction #2: Keeper receives the order submitted in Transaction #1, requests the latest Python price feed, and calls Synthetix to execute the contract in one transaction. The contract will check predefined parameters such as timeliness and price slippage. If both pass, the order will be executed, the on-chain price storage will be updated, and the position will be established. Keepers charge fees to compensate for the gas used to use and maintain the network.

This implementation does not give users the opportunity to adversely select prices submitted on-chain, effectively solving front-running and arbitrage opportunities for the protocol. However, the tradeoff of this design is user experience: executing this market order requires two transaction processes. The user needs to compensate gas for the Keeper operation and share the cost of updating the storage on the oracle chain. What used to be a fixed fee of 2 sUSD has recently been changed to a dynamic fee based on Optimism gas oracle + premium, which will change based on layer 2 network activity. In any case, this can be seen as a solution to improve LP profitability at the expense of trader user experience.

Pull type: Optimistic settlement

Since delayed orders will bring additional network fees to users (proportional to the DA fee of the second-layer network), after brainstorming, we have come up with another order settlement model, which we call"Positive settlement", this model has the potential to reduce user costs while maintaining decentralization and the security of the protocol. As the name suggests, this mechanism allows traders to execute market trades atomically, with the system actively accepting all prices and providing a window for searchers to submit evidence that orders were placed maliciously. This section outlines different versions of this idea, our thought process, and unresolved issues.

Our initial idea was to build a mechanism that would allow users to submit prices via parsePriceFeedUpdates when opening a market order, and then allow users or any third party to submit settlement transactions using the price feed data, and have the transaction completed at that price when the transaction is confirmed. At settlement, any negative difference between the two prices will be included in the users profit and loss statement as slippage. The advantages of this approach include reducing the cost burden on users and reducing the risk of front-running. Users no longer have to bear the premium that rewards defenders, and since the settlement price is not known when an order is submitted, the risk of front-running remains manageable. However, this still introduces a two-step settlement process, which is one of the drawbacks we found with Synthetixs deferred settlement model. In most cases, if the volatility between order placement and settlement does not exceed the system-defined profitable front-running threshold, the additional settlement transactions may be unnecessary.

Another solution to circumvent the above problem is to allow the system to actively accept orders and then open a permissionless challenge period during which evidence can be submitted proving that the price deviation between the price timestamp and the block timestamp is allowed to proceed. Profitable front-running deals.

The specific operations are as follows:

Users create orders based on current market prices. They then pass the price along with the embedded Python price feed byte data as an order creation transaction.

Smart contracts actively verify and store this information.

After an order is confirmed on-chain, there is a challenge period where searchers can submit proof of adverse selection. This proof would confirm that traders used outdated price feed data with the intention of arbitraging the system. If the proof is accepted by the system, the difference will be applied to the traders execution price as slippage, and the excess value will be given to the Keeper as a reward.

After the challenge period, the system considers all prices valid.

This model has two advantages: It reduces the cost burden on users. Users only need to pay gas fees for order creation and oracle update in the same transaction, without requiring additional settlement transactions. It also prevents front-running, protects the integrity of the liquidity pool, and ensures a healthy Keeper network with financial incentives for submitting proof to the system that proves front-running.

However, there are still some issues to be resolved before putting this idea into practice:

definition"adverse selection": How does the system distinguish between users who submit expired prices due to network delays, and users who deliberately arbitrage? An initial idea could be to measure the volatility within the expiry check period (e.g. 15 seconds) and if the volatility exceeds the net execution fee, the order is flagged as a potential exploit.

Set an appropriate challenge period: Considering that toxic order flow may only be open for a short period of time, what is an appropriate time window for a Keeper to challenge a price? Batch proofing may be more cost-effective, but given the unpredictability of order flow over time, it is difficult to time batch proofing to ensure that all price information is proven or has sufficient time to be challenged.

Economic Rewards for Keepers: For submitting proofs to be reasonable for financially incentivized keepers, the rewards associated with submitting winning proofs must be greater than the gas costs associated with submitting proofs. Due to varying order sizes, this assumption may not be guaranteed.

Is a similar mechanism needed for closing orders? If so, how would it degrade the user experience?

Ensure that “unreasonable” slippage does not fall on users: In the event of a flash crash, there may be a very large price difference between order creation and on-chain confirmation. Some kind of fallback or circuit breaker may be needed and consider using Pyths EMA prices to ensure price feed stability before use.

ZK Co-processors - Another form of data consumption

Another direction worth exploring is the use of ZK auxiliary processors, which are designed to obtain on-chain states to perform complex calculations off-chain, and at the same time provide proof of how the calculations are performed; this method can be used without permission verify. Projects such as Axiom enable contracts to query historical blockchain data, perform calculations off-chain, and submit ZK proofs proving that the calculation results were correctly calculated based on valid on-chain data. The secondary processor opens up the possibility to build custom TWAP oracles with manipulation resilience using historical prices from multiple DeFi native liquidity sources (such as Uniswap + Curve).

Compared to traditional oracles that currently only have access to the latest asset price data, the ZK auxiliary processor will expand the range of data provided to dApps in a secure manner (Pyth does provide EMA prices for developers to use as a reference check for the latest prices) . This way, applications can introduce more business logic that works with historical blockchain data to improve protocol security or enhance user experience.

However, the ZK auxiliary processor is still in the early stages of development, and there are still some bottlenecks, such as:

In the auxiliary processor environment, the acquisition and calculation of large amounts of blockchain data may require a long proving time

Providing only blockchain data does not address the need for secure communication with non-Web3 applications

Oracle-less solutions – the future of DeFi?

Another way to solve this problem is to eliminate the need for external price feeds by designing a primitive from scratch, thus

Solving DeFi’s dependence on oracles. The latest development in this field is the use of various AMM LP tokens as pricing means. The core idea is that the LP position of a constant function market maker is a token that represents the preset weight of two assets, and there are two tokens. Automatic pricing formula (i.e. xy=k). By leveraging LP tokens (as collateral, loan basis, or in a recent use case, to move v3 LP positions to different tick points), the protocol can obtain information that would normally be obtained from oracles. As a result, a new wave of oracle-less solutions that are free from the above challenges has been realized. Application examples based on this direction include:

Panoptic is building a permanent, oracle-less options protocol leveraging Uniswap v3 centralized liquidity positions. Because the centralized liquidity position is 100% converted into the underlying asset when the spot price exceeds the upper limit of the LP position, the returns to the liquidity provider are very similar to the returns to the seller of the put option. Therefore, the options market works by liquidity providers depositing LP assets or positions, and option buyers and sellers borrowing liquidity and moving it in or out of the range, thereby generating dynamic option returns. Since the loan is denominated in LP positions, no oracle is required for settlement.

Infinity Pools is leveraging the centralized liquidity positions of Uniswap v3 to build a leveraged trading platform with no liquidation and no oracles. Uniswap v3s liquidity providers can lend their LP tokens, and traders deposit some collateral, borrow LP tokens and redeem their directional trading related assets. Loans at redemption will be denominated in asset basis or quoted assets, depending on the price at redemption, and can be calculated directly by checking the LP composition on Uniswap, eliminating reliance on oracles.

Timeswap is building a fixed-term, no-liquidation, no-oracle lending platform. It is a three-party market consisting of lenders, borrowers and liquidity providers. Unlike the traditional lending market, it uses"time basis"(time-based) liquidation rather than"price basis"(price-based) closing of positions. In decentralized exchanges, liquidity providers are automatically set to always buy from sellers and sell to buyers; while in Timeswap, liquidity providers always lend to borrowers and borrow from lenders in the market. Play similar roles. They are also liable for loan defaults and have priority in receiving forfeited collateral as compensation.

in conclusion

Pricing data remains an important part of many decentralized applications, and the total value gained by oracles continues to increase over time, further affirming their product-market fit (p product-market fit). The purpose of this article is to inform readers and provide an overview of the OEV-related challenges we currently face, as well as the design space in their implementation based on push, pull, and other designs using AMM liquidity providers or off-chain auxiliary processors.

Were excited to see energetic developers looking to solve these tough design challenges. If you are also working on a disruptive project in this space, we would love to hear from you!

References and Acknowledgments

Thanks to Jonathan Yuen and Wintersoldier for their contributions and conversations, which greatly contributed to this article.

Thanks to Erik Lie, Richard Yuen (Hailstone), Marc, Mario Bernardi, Anirudh Suresh (Pyth), Ugur Mersin (API 3 DAO), and Mimi (Timeswap) for their valuable comments, feedback, and reviews.

https://defillama.com/oracles(14 Nov) ↩︎

OEV Litepaper https://drive.google.com/file/d/1wuSWSI8WY9ChChu2hvRgByJSyQlv_8SO/edit

Frontrunning on Synthetix: A History by Kain Warwick https://blog.synthetix.io/frontrunning-synthetix-a-history/

https://docs.pyth.network/documentation/pythnet-price-feeds/on-demand↩︎

https://docs.pyth.network/documentation/solana-price-feeds/best-practices#latency↩︎

Aave liquidation figures https://dune.com/queries/3247324

https://drive.google.com/file/d/1wuSWSI8WY9ChChu2hvRgByJSyQlv_8SO/edit

https://gmx-io.notion.site/gmx-io/GMX-Technical-Overview-47fc5ed832e243afb9e97e8a4a036353

https://gmxio.substack.com/p/gmx-v2-powered-by-chainlink-data ↩︎

︎

appendix

Definition: Push vs. pull oracles

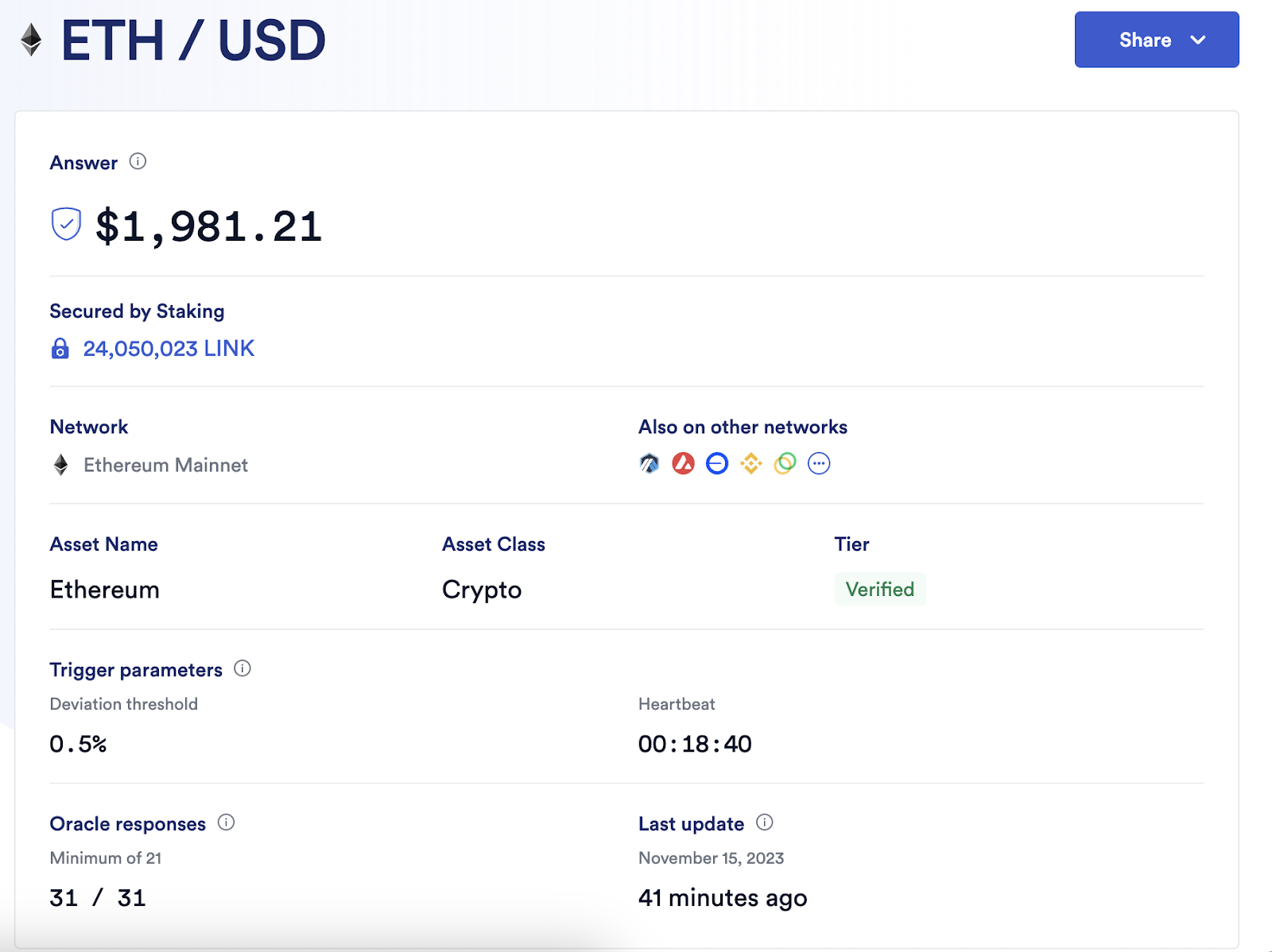

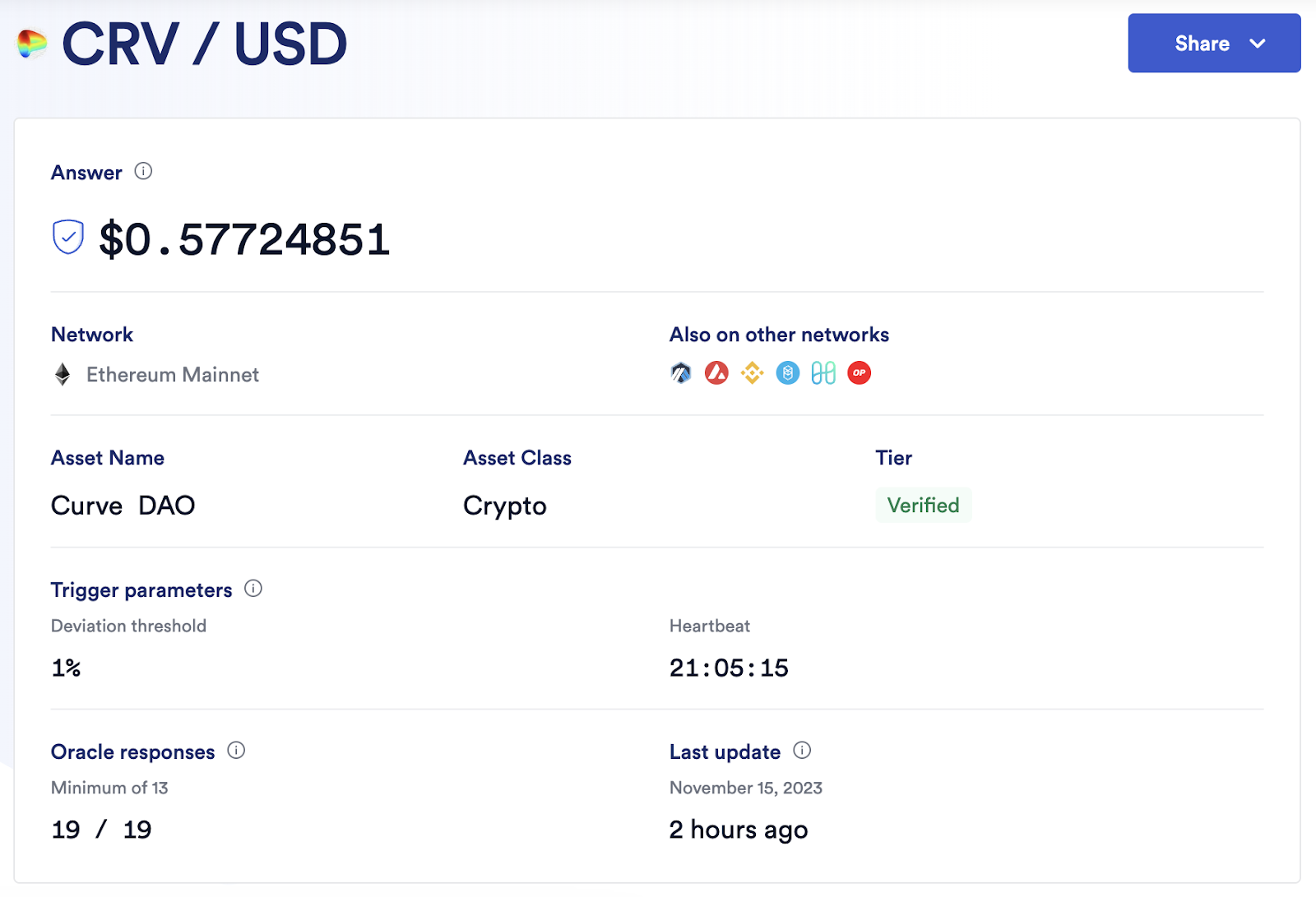

The push oracle machine maintains off-chain prices in the P2P network and maintains price updates based on predefined on-chain nodes. Taking Chainlink as an example, price updates are based on two trigger parameters: deviation threshold (deviation threshold) and heartbeat (heartbeat). The Ethereum ETH/USD price feed below will be updated whenever the off-chain price deviates 0.5% from the latest on-chain price, or the 1-hour heartbeat timer reaches zero.

In this case, the oracle operator must pay transaction fees for each price update, which is a trade-off between cost and scalability. Increasing the number of price sources, supporting additional blockchains, or adding more frequent updates will incur additional transaction costs. Therefore, long-tail assets with higher trigger parameters inevitably have price sources with low reliability. Lets take CRV/USD as an example to illustrate this - in order for the new price to be updated on the chain, a 1% deviation threshold is required, with a heartbeat of 24 hours, which means that if the price does not deviate by more than 1% within 24 hours, then There will only be one new price update every 24 hours. Intuitively, the lack of granularity in price sources for long-tail assets will inevitably result in applications needing to consider additional risk factors when creating markets for these assets, which explains why the vast majority of DeFi activity still revolves around the most liquid assets. Occurs with the strongest, largest market capitalization token.

In contrast, pull oracles allow prices to be pulled onto the chain on demand. Pyth is the most prominent example today, transmitting price updates off-chain, signing each update so that anyone can verify its authenticity, and maintaining aggregate prices on Pythnet, a private block based on Solana code chain. When updates are needed, the data is transferred through Wormhole, verified on Python, and then pulled onto the chain without permission.

The above figure describes the structure of Pyth price feed: When the price on the chain needs to be updated, the user can request the update through the Pyth API. The verified price on Pythnet will be sent to the Wormhole contract. The Wormhole contract will observe, create and send a place name. VAA, which can be verified on any blockchain where Pyth contracts are deployed.

Disclaimer: This article does not constitute investment advice. Users should consider whether any opinions, views or conclusions in this article are consistent with their specific situations, and comply with the relevant laws and regulations of the country and region where they are located.