Produced by - Odaily

Compilation - Loopy Lu

Today, Vitalik Buterin published an article in the Ethereum community titled What might a built-in ZK-EVM look like? 》New article. This article explores how Ethereum will have its own ZK-EVM built into future network upgrades.

As we all know, in the context of the slow development of Ethereum, almost all mainstream Layer 2 currently have ZK-EVM. When the Ethereum mainnet encapsulates its own ZK-EVM, will the mainnet and Layer 2 have role positioning? What about conflict? How can Layer 1 and Layer 2 effectively divide and cooperate?

In this article, Vitalik Buterin highlights the importance of compatibility, data availability, and auditability, and explores the possibility of implementing efficient and stateful provers. Additionally, the article explores the possibility of implementing stateful provers for increased efficiency and discusses the role of Layer 2 projects in providing fast pre-confirmation and MEV mitigation strategies. This article reflects on the balance of maintaining the flexibility of the Ethereum network while advancing its development through ZK-EVMs.

Odaily compiled the original text as follows:

As Layer-2 EVM protocols on top of Ethereum, optimistic rollups and ZK rollups both rely on EVM verification. However, this requires them to trust a large code base, and if there is a bug in this code base, then these virtual machines are at risk of being hacked. This also means that ZK-EVM, even if they hope to remain fully equivalent to L1 EVM, will need some form of governance to copy L1 EVM changes into their own EVM implementation.

This is not an ideal situation. Because these projects are copying functionality that already exists in the Ethereum protocol into themselves - Ethereum governance is already responsible for making upgrades and bug fixes, and ZK-EVM basically does the job of validating Layer 1 Ethereum blocks. Over the next few years, we expect light clients to become increasingly powerful and soon be able to use ZK-SNARKs to fully authenticate L1 EVM executions. At that point, the Ethereum network will actually have an encapsulated ZK-EVM. So the question arises: why not make this ZK-EVM natively available for rollups as well?

This article will describe several versions of “ZK-EVM in a package” and analyze their trade-offs, design challenges, and reasons not to go in certain directions. The benefits of implementing a protocol functionality should be compared with the benefits of letting the ecosystem handle transactions and keeping the underlying protocol simple.

What are the key features we want to get from the packaged ZK-EVM?

Basic function: Verify Ethereum blocks. The protocol function (not yet determined whether it is an opcode, precompilation, or other mechanism) should be able to accept at least a pre-state root, a block, and a post-state root as input, and verify that the post-state root is actually on top of the pre-state root The result of executing this block. Compatible with Ethereum’s multi-client. This means we want to avoid having a single proof system built in, and instead allow different clients to use different proof systems.

This also means a few things:

Data availability requirements:For any EVM execution that uses encapsulated ZK-EVM proofs, we want to ensure that the underlying data is available so that provers using different proof systems can re-certify the execution and clients that rely on that proof system can verify these newly generated proofs. .

Proofs lie outside the EVM and block data structures:The ZK-EVM functionality does not actually accept SNARKs as input inside the EVM, since different clients will expect different types of SNARKs. Instead, it might be similar to blob validation: transactions can include claims that need to be proven (pre-state, block body, post-state), the content of these claims can be accessed by opcodes or precompilation, and client consensus rules will check data availability and Proof of the claims made in the block.

Auditability:If any execution is proven, we want the underlying data to be available so that both users and developers can inspect it if any issues arise. In fact, this adds one more reason why data availability requirements are important.

Upgradeability:If a bug is discovered in a particular ZK-EVM solution, we want to be able to fix it quickly. This means no hard fork is required to fix the problem. This adds yet another reason why proofs outside of the EVM and block data structures are important.

Support for Approximate EVM:One of the attractions of L2s is the ability to innovate at the execution layer and extend the EVM. If a certain L2s VM is only slightly different from the EVM, it would be nice if the L2 could use native in-protocol ZK-EVM for the same parts as the EVM, and only rely on its own code to handle the different parts . This can be accomplished by designing the ZK-EVM functionality so that the caller can specify a bitfield or opcode or list of addresses, which will be processed by an externally provided table rather than by the EVM itself. We can also make the gas cost customizable to a certain extent.

Open vs. Closed multi-client systems

The multi-client idea is probably the most controversial requirement on this list. One option is to abandon multi-client and focus on a ZK-SNARK scheme, which will simplify the design. But the price is a larger philosophical shift for Ethereum (because this is actually abandoning Ethereums long-standing multi-client thinking) and the introduction of greater risks. In the long term future, such as when formal verification technology gets better, it may be better to go this route, but right now it seems too risky.

Another option is a closed multi-client system, where there is a fixed set of attestation systems that is known within the protocol. For example, we might decide to use three ZK-EVMs: PSE ZK-EVM, Polygon ZK-EVM, and Kakarot. A block requires proofs from at least two of these three to be valid. This is better than a single proof system, but it makes the system less adaptable as users must maintain validators for every proof system in existence, there will be an inevitable governance process to incorporate new proof systems, etc.

This leads me to prefer an open multi-client system, where proofs are placed outside the block and verified individually by clients. Individual users will use whatever client they want to validate blocks, and they can do this as long as there is at least one prover creating a proof for that proof system. Proof systems will gain influence by convincing users to run them, not by convincing the protocol governance process. However, this approach does have more complexity costs, as we will see.

What key features do we want in a ZK-EVM implementation?

Besides basic functional correctness and security guarantees, the most important attribute is speed. Although it is possible to design an asynchronous protocol with built-in ZK-EVM function, and each statement only returns the result after N slots, the problem will become easier if we can reliably guarantee that a proof can be generated in a few seconds. Many, so that what happens in each block is self-sufficient.

While generating a proof for an Ethereum block today takes minutes or hours, we know of no theoretical reason to prevent massive parallelization: we can always muster enough GPUs to individually prove block execution. different parts, and then use recursive SNARKs to combine these proofs together. In addition, the proof process can be further optimized through hardware acceleration through FPGAs and ASICs. However, actually achieving this is an engineering challenge that should not be underestimated.

What exactly does the ZK-EVM function within the protocol look like?

Similar to EIP-4844 blob transactions, we have introduced a new transaction type containing ZK-EVM claims:

class ZKEVMClaimTransaction(Container):

pre_state_root: bytes 32

post_state_root: bytes 32

transaction_and_witness_blob_pointers: List[VersionedHash]

...

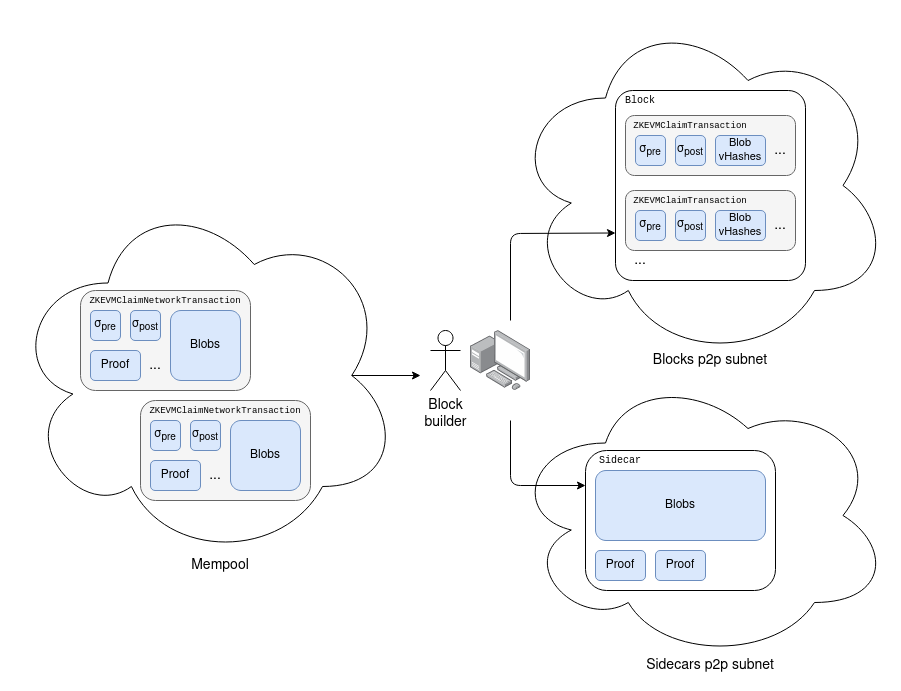

As with EIP-4844, the object passed in the mempool is a modified version of the transaction:

class ZKEvmClaimNetworkTransaction(Container):

pre_state_root: bytes 32

post_state_root: bytes 32

proof: bytes

transaction_and_witness_blobs: List[Bytes[FIELD_ELEMENTS_PER_BLOB * 31 ]]

The latter can be converted into the former, but not vice versa. We also extended the block sidecar object (introduced in EIP-4844) to include the list of proofs declared in the block.

Note that in practice we might want to split the sidecar into two separate sidecars, one for the blob and one for the proof, and have a separate subnet for each type of proof (and one for the blob additional subnet).

At the consensus layer, we added a validation rule that a client will only accept a block if it sees a valid proof of each claim in the block. The proof must be a concatenation of ZK-SNARK proofs, a pair of transaction_and_witness_blobs serialized, and pre_state_root using (i) valid, and Witness (ii) outputting the correct post_state_root. (Block, Witness) Potentially, the client can choose to wait for multiple The M-of-N of type proofs.

One note here is that the block execution itself can simply be viewed as a triplet (σpre,σpost,Proof) that needs to be checked along with the triplet provided in the ZKEVMClaimTransaction object.

Therefore, a users ZK-EVM implementation can replace its execution client; the execution client will still be used by (i) provers and block builders, and (ii) nodes that care about indexing and storing data for local use.

Verify and re-verify

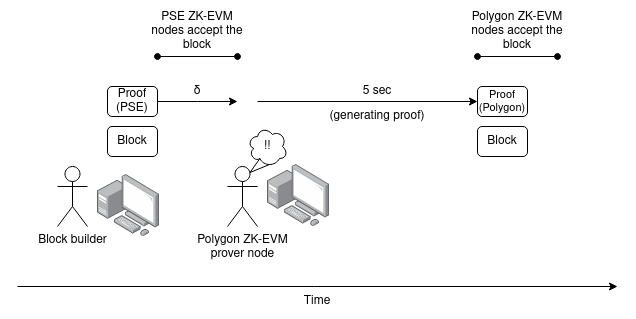

Suppose there are two Ethereum clients, one using PSE ZK-EVM and the other using Polygon ZK-EVM. Assume that at this point, both implementations have evolved to the point where they can prove Ethereum block execution in 5 seconds, and that for each proof system, there are enough independent volunteers running the hardware to generate the proof.

Unfortunately, since independent proof systems are not built in, they cannot be incentivized in the protocol; however, we expect that the cost of running a prover will be low compared to the RD costs, so we can simply use a common authority for the prover Provide funding for public goods.

Suppose someone releases a ZKEvmClaimNetworkTransaction, but they only release a version of the PSE ZK-EVM proof. Polygon ZK-EVMs proof node sees this and uses Polygon ZK-EVMs proof to compute and republish the object.

This increases the total maximum delay between the earliest honest node accepting a block and the latest honest node accepting the same block δ: 2 δ + Tprove (assuming Tprove<5 s )。

However, the good news is that if we adopt single-slot finality, we can almost certainly pipeline this additional latency along with SSFs inherent multi-round consensus latency. For example, in a 4-subslot proposal, the head vote step might only require checking the validity of the base block, but then the freeze and confirm step would require a proof of existence.

Extension: support for Approximate EVM

An ideal goal of ZK-EVM features is to support near-EVMs: EVMs with some extra functionality built-in. This could include new precompilations, new opcodes, or even the option that contracts can be written in the EVM or a completely different virtual machine (e.g., like in Arbitrum Stylus), or even multiple parallels with synchronized cross-communication EVM.

Some modifications can be supported in a simple way: we can define a language that allows ZKEVMClaimTransaction to pass a complete description of the modified EVM rule. This can be done:

Custom gas table (user cannot reduce gas cost, but can increase it)

Disable certain opcodes

Set the block number (this will imply different rules depending on the hard fork)

Set a flag that activates a set of EVM changes that have been standardized for L2 use instead of L1 use, or other simpler changes

In order to allow users to add new features in a more open way, by introducing new precompilation (or opcodes), we can add a precompiled input/output record to the blob of ZKEVMClaimNetworkTransaction:

class PrecompileInputOutputTranscript(Container):

used_precompile_addresses: List[Address]

inputs_commitments: List[VersionedHash]

outputs: List[Bytes]

The EVM execution will be modified as follows. Initialize an empty input array. Whenever an address in used_precompile_addresses is called, we add an InputsRecord(callee_address, gas, input_calldata) object to inputs and set the calls RETURNDATA to outputs[i]. Finally, we check that used_precompile_addresses has been called a total of len(outputs) times, and that inputs_commitments match the result of SSZ serialization of the inputs producing blob commitments. The purpose of exposing inputs_commitments is to make it easier for external SNARKs to prove the relationship between inputs and outputs.

Note the asymmetry between input and output, the input is stored in the hash and the output is stored in the bytes that must be provided. This is because execution needs to be done by a client that only sees the input and understands the EVM. The EVM execution has already generated the input for them, so they only need to check that the generated input matches the claimed input, which only requires a hash check. However, the output must be provided to them in its entirety and therefore must be usable data.

Another useful feature might be to allow privileged transactions, which can be called from any sender account. These transactions can be run between two other transactions, or when precompilation is called, as part of another (possibly also privileged) transaction. This can be used to allow non-EVM mechanisms to call back into the EVM.

This design may be modified to support new or modified opcodes in addition to new or modified precompilations. Even with just precompilation, this design is quite powerful. For example:

By setting used_precompile_addresses to include a list of normal account addresses with certain flags in the state, and making a SNARK to prove it was built correctly, you can support Arbitrum Stylus style functionality, where the contract can be written using EVM or WASM (or another a VM). Privileged transactions can be used to allow WASM accounts to call back into the EVM.

You can prove a parallel system of multiple EVMs talking to each other via synchronized channels by adding an external check to ensure that the input/output records and privileged transactions executed by multiple EVMs match in the correct way.

A type 4 ZK-EVM can be operated by having multiple implementations: one that directly converts Solidity or another high-level language into a SNARK-friendly VM, and another that compiles it into EVM code and builds it into the ZK-EVM implement. The second (inevitably slower) implementation can only run if the fault prover sends a transaction asserting the bug, and collects the bounty if they can provide both handle the transaction differently.

A purely async VM can be implemented by making all calls return zero and mapping the calls to privileged transactions added to the end of the block.

Extension: support for stateful provers

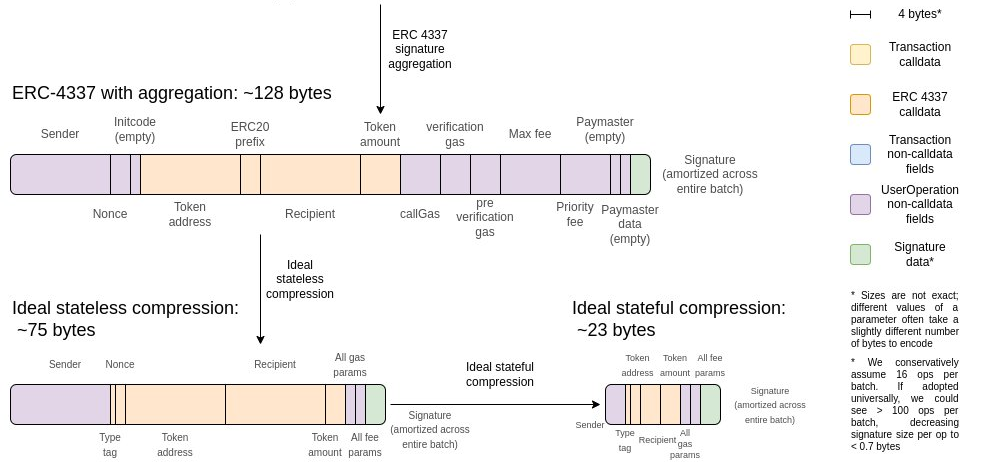

One challenge with the above design is that it is completely stateless, which makes it data inefficient. Under ideal data compression, ERC 20 sending with stateful compression can be up to 3x more space efficient than stateful compression alone.

Additionally, stateful EVMs do not require witness data to be provided. In both cases, the principle is the same: requiring data to be available when we already know it is available because it was entered or produced in a previous EVM execution is a waste.

If we want ZK-EVM features to be stateful, then we have two options:

1) Requires σpre to be either empty, a list of available data with declared keys and values, or a σpost executed previously.

2) Add a blob commitment to the block-generated receipt R to the (σpre, σpost, Proof) triplet. Any previously generated or consumed blob commitments, including those representing blocks, witnesses, receipts or even plain EIP-4844 blob transactions, may have some time limit and may be referenced in the ZKEVMClaimTransaction and accessed during its execution (possibly Through a series of instructions: Insert bytes N...N+k-1 of commitment i into position j of block + witness data).

Option one means: instead of built-in stateless EVM verification, build in EVM subchains. Option two is essentially to create a minimal built-in stateful compression algorithm that uses previously used or generated blobs as dictionaries. Both methods will put a burden on the prover node, only the prover node needs to store more information; in case two, it is easier to make this burden time-limited than in case one.

Parameters for closed multiple provers and off-chain data

A closed multi-prover system, where there is a fixed number of provers in an M-of-N structure, avoids many of the above complexities. In particular, closed multi-certifier systems do not need to worry about ensuring that data is on-chain. Additionally, a closed multi-prover system will allow ZK-EVM proofs to be executed off-chain; making it compatible with EVM Plasma solutions.

However, a closed multi-certifier system increases governance complexity and removes auditability, which are high costs that need to be weighed against these benefits.

If we build ZK-EVM in and make it a protocol feature, what is the role of the Layer 2 Project?

The EVM verification functionality currently implemented by the Layer 2 team themselves will be handled by the protocol, but the Layer 2 project is still responsible for many important functions:

Fast pre-confirmations: The finality of a single slot may slow down Layer 1 slots, and Layer 2 projects are already providing their users with pre-confirmations that are backed by Layer 2s own security with far lower latency in one slot. This service will continue to be fully managed by Layer 2.

MEV (miner extractable value) mitigation strategies: This may include encrypted mempools, reputation-based orderer selection, and other features that Layer 1 is unwilling to implement.

Extensions to the EVM: Layer 2 projects can provide their users with significant extensions to the EVM. This includes approximate EVM and fundamentally different approaches like Arbitrum Stylus WASM support and the SNARK-friendly Cairo language.

Convenience for users and developers: The Layer 2 team does a lot of work to attract users and projects to their ecosystem and make them feel welcome; they are compensated by capturing MEV and congestion charges within their network. This relationship will continue to exist.