Since the introduction of ChatGPT and GPT-4, there has been a lot written about how AI can revolutionize everything, including Web 3. Developers across multiple industries report significant productivity gains ranging from 50% to 500% by leveraging ChatGPT as a co-driver to automate tasks such as generating boilerplate code, conducting unit tests, creating documentation, debugging, and detecting bugs % varies. While this article will explore how AI can enable new and interesting Web 3 use cases, its main focus is on the mutually beneficial relationship between Web 3 and AI. There are very few technologies that have the ability to significantly influence the direction of artificial intelligence, and Web 3 is one of them.

How does Web3 facilitate artificial intelligence?

Despite their enormous potential, current AI models face several challenges, such as data privacy, fairness in the execution of proprietary models, and the ability to create and disseminate credible fake content. Some existing Web 3 technologies are uniquely positioned to address these challenges.

01 Create proprietary datasets for machine learning (ML) training

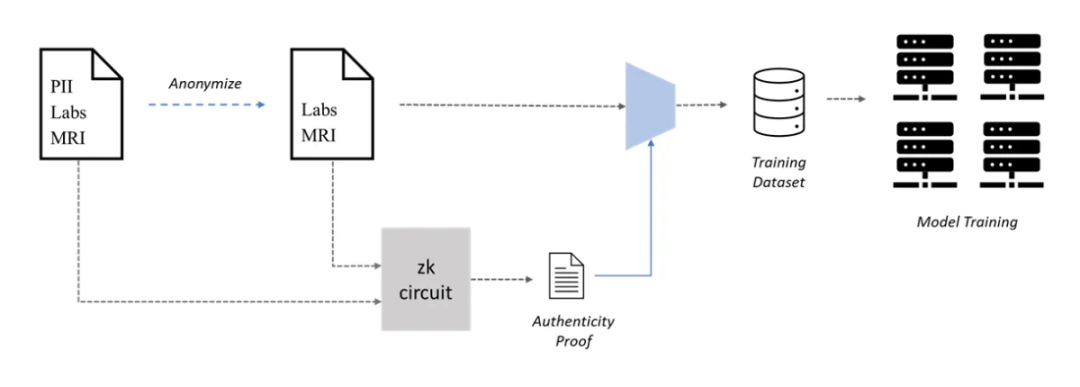

One area where Web 3 can help AI is through collaborative creation of proprietary datasets for machine learning (ML) training, i.e. using PoPW networks for dataset creation. Large-scale datasets are critical for accurate ML models, but their creation can become a bottleneck in use cases that require the use of private data, such as medical diagnosis using ML. Access to medical records is necessary to train these models due to patient data privacy concerns, but patients may be reluctant to share their medical records due to privacy concerns. To address this, patients can verifiably anonymize their medical records to preserve their privacy while still being available for ML training.

However, the authenticity of anonymized medical records is a problem, as fake data can seriously affect the performance of the model. To address this issue, zero-knowledge proofs (ZKPs) can be used to verify the authenticity of anonymized medical records. Patients can generate ZKPs to prove that anonymous records are indeed copies of the original records, even after removing personally identifiable information (PII). In this way, patients can make anonymous records available with ZKPs to interested parties and even receive rewards for their contributions without sacrificing their privacy.

02 Run private data inference

A major weakness of current LLMs is the handling of private data. For example, when a user interacts with chatGPT, OpenAI collects the user's private data and uses it for model training, which leads to the disclosure of sensitive information. This is the case of Samsung. Zero-knowledge (zk) techniques can help solve some of the problems that arise when ML models perform reasoning on private data. Here, we consider two cases: open source model and proprietary model.

For open-source models, users can download the model locally on their private data and run it. For example, Worldcoin plans to upgrade World ID. In this use case, Worldcoin needs to process the user's private biometric data, i.e. the user's iris scan, to create a unique identifier for each user called the IrisCode. In this case, users can keep their biometric data private on their device, download the ML model used for IrisCode generation, run inference locally, and create proof that their IrisCode was successfully created. The generated proofs guarantee the authenticity of the reasoning while maintaining the privacy of the data. An efficient zk proof mechanism for ML models like the one developed by Modulus Labs is crucial for this use case.

Another case is when the ML model used for inference is proprietary. This task is somewhat difficult, since local inference is not an option. However, there are two possible ways ZKPs can help. The first approach is to anonymize user data using a ZKP, as discussed in the previous dataset creation example, and then send the anonymized data to the ML model. Another approach is to use a local preprocessing step on the private data before sending the preprocessed output to the ML model. In this case, the preprocessing step hides the user's private data so that it cannot be reconstructed. The user generates a ZKP indicating the correct execution of the preprocessing steps, and then the rest of the proprietary model can be executed remotely on the model owner's server. Example use cases here might include an AI doctor who can analyze a patient's medical records for a potential diagnosis, and a financial risk assessment algorithm that evaluates a client's private financial information.

03 Authenticity of content and combating deepfake technology

chatGPT may have stolen the limelight from generative AI models that focus on generating images, audio, and video. However, these models are currently capable of generating realistic deepfakes. The recent AI-generated song by Drake is an example of what these models can achieve. Because humans are programmed to believe what they see and hear, these deepfakes represent a major threat. There are many startups trying to solve this problem using Web 2 technologies. However, Web 3 technologies, such as digital signatures, are better suited to solve this problem.

In Web 3, user interactions, i.e. transactions, are signed by the user's private key to prove their validity. Likewise, whether it is text, pictures, audio or video, content can also be signed by the creator's private key to prove its authenticity. Anyone can verify the signature against the creator's public address, which is provided on the creator's website or social media account. The Web 3 network has built all the required infrastructure to enable this use case. Fred Wilson discusses how linking content to public encryption keys can effectively combat misinformation. Many reputable venture capital firms have linked their existing social media profiles, such as Twitter, or decentralized social media platforms, such as Lens Protocol and Mirror, with an encrypted public address, which provides digital signatures as content The credibility of the authentication method provides support.

Despite the simplicity of the concept, a lot of work is still needed to improve the user experience of this authentication process. For example, digital signatures for content creation need to be automated to provide a seamless process for creators to consume. Another challenge is how to generate subsets of signed data, such as audio or video clips, without re-signing. Many existing Web 3 technologies are uniquely positioned to address these issues.

04 Minimize trust in proprietary models

Another area where Web 3 can help AI is in minimizing trust in the service provider when proprietary machine learning models are provided as a service. Users may need to verify that the service they paid for was actually provided, or to obtain assurance that the machine learning model performs fairly, that all users use the same model. Zero-knowledge proofs can be used to provide such guarantees. In this architecture, the creator of a machine learning model generates a zero-knowledge circuit that represents the model. When needed, this circuit is used to generate zero-knowledge proofs for user inferences. Zero-knowledge proofs can be sent to users for verification, or they can be published on a public chain that handles user verification tasks. If the machine learning model is private, an independent third party can verify that the zk circuit used is representative of the model. The trust-minimization aspect of machine learning models is particularly useful when the model's performance results are at high risk. For example:

Machine Learning Models for Medical Diagnosis

In this use case, patients submit their medical data for a machine learning model to make a potential diagnosis. Patients need to ensure that the target machine learning model has been correctly applied to their data. The inference process generates zero-knowledge proofs that prove that the machine learning model performed correctly.

Credit value assessment of loans

Zero-knowledge proofs can ensure that banks and financial institutions consider all financial information submitted by applicants when assessing creditworthiness. In addition, zero-knowledge proofs can prove fairness, that is, prove that all users use the same model.

Insurance Claims Processing

Current insurance claims processing is manual and subjective. Machine learning models can more impartially evaluate claims on insurance policies and claim details. Combined with zero-knowledge proofs, these claims-processing machine learning models can be proven to have considered all policy and claim details, and all claims under the same insurance policy were processed using the same model.

05 Solve the centralization problem of model creation

Creating and training an LLM is a time-consuming and expensive process that requires specific domain expertise, dedicated computing infrastructure, and millions of dollars in computational costs. These characteristics could lead to powerful central entities (such as OpenAI) that can exercise significant influence over their users by restricting access to their models.

Given these centralization risks, important discussions are taking place about how Web 3 can facilitate the decentralization of different aspects of LLM. Some Web 3 proponents have proposed decentralized computing as a way to compete with centralized players. The basic idea is that decentralized computing can be a cheaper alternative. However, we believe this may not be the best angle to compete with centralized players. The downside of decentralized computing is that it can be 10-100x slower in ML training due to the communication overhead between different heterogeneous computing devices.

As an alternative, Web 3 projects can focus on creating unique and competitive ML models in a PoPW fashion. These PoPW networks can also collect data to build unique datasets to train these models. Some projects that are moving in this direction include Together and Bittensor.

06 Payment and Execution Channels for AI Agents

Over the past few weeks, AI agents that use LLMs to reason about the tasks needed to accomplish a goal and execute those tasks to achieve the goal have been on the rise. The wave of AI agents started with the idea of BabyAGI and quickly spread to advanced versions, including AutoGPT. An important prediction here is that AI agents will become more specialized to excel at certain tasks. If there is a market for dedicated AI agents, then AI agents can search for, hire, and pay other AI agents to perform specific tasks, leading to the completion of the main project. Along the way, Web 3 networks provide the ideal environment for AI agents. For payments, AI agents can be equipped with cryptocurrency wallets for receiving payments and paying other AI agents. Additionally, AI agents can plug into encrypted networks to delegate resources without permission. For example, if an AI agent needs to store data, the AI agent can create a Filecoin wallet and pay for decentralized storage on IPFS. An AI agent can also delegate computing resources from a decentralized computing network such as Akash to perform certain tasks, or even extend its own execution.

07 Protection from AI privacy violations

Given the large amount of data required to train well-performing ML models, it is safe to assume that any public data will be used in ML models to predict individual behavior. Additionally, banks and financial institutions can build their own ML models that are trained on users' financial information and are able to predict users' future financial behavior. This could be a major invasion of privacy. The only mitigation to this threat is the privacy of financial transactions by default. This privacy can be achieved by using private payment blockchains like zCash or Aztec payments and private DeFi protocols like Penumbra and Aleo.

AI-powered Web3 Application Cases

01 On-Chain Games

Generate bots for non-programmer players

On-chain games like Dark Forest create a unique paradigm where players gain an advantage by developing and deploying bots that perform desired game tasks. This paradigm shift may exclude players who cannot code. However, LLM can change this. LLM can be fine-tuned to understand on-chain game logic and allow players to create bots that reflect player strategies without requiring players to write any code. Projects like Primodium and AI Arena are working on engaging AI and human players for their games.

Robot Fighting, Gambling and Betting

Another possibility for on-chain gaming is fully autonomous AI players. In this case, the player is an AI agent, such as AutoGPT, that uses an LLM as a backend and has access to external resources such as internet access and potentially initial cryptocurrency funding. These AI players can gamble like robot wars. This can open up a market for speculation and betting on the outcomes of these bets.

Create realistic NPC environments for on-chain games

Current games pay little attention to non-player characters (NPCs). NPCs have limited actions and have little impact on game progress. Given the synergy of AI and Web3, it is possible to create more engaging AI-controlled NPCs that can break predictability and make games more interesting. The underlying challenge here is how to introduce meaningful NPC dynamics while minimizing the throughput (TPS) associated with these activities. The TPS requirements required for excessive NPC activity can lead to network congestion, creating a bad user experience for actual players.

02 Decentralized social media

One of the current challenges facing decentralized social (DeSo) platforms is that they do not offer a unique user experience compared to existing centralized platforms. Embracing seamless integration with AI can deliver a unique experience that Web2 alternatives lack. For example, AI-managed accounts can help attract new users to the network by sharing relevant content, commenting on posts, and participating in discussions. AI accounts can also be used for news aggregation, summarizing the latest trends that match user interests. [ 18 ]

03 Security and Economic Design Testing of Decentralized Protocols

The trend toward LLM-based AI agents that can define goals, create code, and execute code creates opportunities to test the security and economic soundness of decentralized networks. In this case, the AI agent is instructed to exploit the security or economic balance of the protocol. AI agents can first review protocol documents and smart contracts, identifying weaknesses. AI agents can then independently compete for enforcement mechanisms to attack the protocol to maximize their own gains. This approach simulates the actual environment that the protocol experiences after startup. Based on these test results, protocol designers can review protocol designs and patch weaknesses. To date, only specialized companies such as Gauntlet have the technical skill set required to provide such services for decentralized protocols. However, we anticipate that LLMs trained on Solidity, DeFi mechanisms, and previously developed mechanisms can provide similar capabilities.

04 LLM for data indexing and indicator extraction

Even though blockchain data is public, indexing that data and extracting useful insights has been an ongoing challenge. Some players in this space (such as CoinMetrics) specialize in indexing data and building complex metrics for sale, while others (such as Dune) focus on indexing the main components of raw transactions and crowdsourcing metrics extraction parts through community contributions. Recent LLM advances have shown that data indexing and metric extraction can be compromised. Dune has recognized this threat and announced an LLM roadmap that includes SQL query interpretation and the potential for NLP-based queries. However, we predict that the impact of LLM will go deeper than this. One possibility here is LLM-based indexing, where the LLM model interacts directly with blockchain nodes to index data for specific metrics. Startups like Dune Ninja are already exploring innovative LLM applications for data indexing.

05 Introducing new ecosystem developers

Different blockchains compete to attract developers to build applications in this ecosystem. Web 3 developer activity is an important indicator of the success of an ecosystem. The main difficulty developers face is getting support when they start learning and building a new ecosystem. The ecosystem has invested millions of dollars to support developers exploring the ecosystem in the form of a dedicated developer relations team. In this regard, emerging LLMs have shown impressive results, explaining complex code, catching errors, and even creating documentation. Adapted LLMs can complement human experience, significantly expanding the productivity of developer relations teams. For example, LLMs can be used to create documentation, tutorials, answer frequently asked questions, and even support hackathon developers with template code or create unit tests.

06 Improve the DeFi protocol

By integrating artificial intelligence into the logic of DeFi protocols, the performance of many DeFi protocols can be significantly improved. To date, the main bottleneck in integrating AI into DeFi has been the prohibitive cost of implementing on-chain AI. AI models can be implemented off-chain, but previously there was no way to verify model execution. However, validation performed off-chain is becoming possible through projects such as Modulus and ChainML. These projects allow the execution of ML models off-chain while limiting on-chain costs. In the case of Modulus, on-chain fees are limited to validating the model's ZKP. In the case of ChainML, the on-chain cost is an oracle fee paid to the decentralized AI execution network.

Some DeFi use cases that could benefit from AI integration.

AMM liquidity supply, that is, to update the range of Uniswap V3 liquidity.

Liquidation protection for debt positions using on-chain and off-chain data protection.

Complex DeFi structured products where the vault mechanism is defined by a financial AI model rather than a fixed strategy. These strategies can include AI-managed trades, lending or options.

in conclusion

in conclusion

We believe that Web3 and AI are culturally and technologically compatible. Unlike Web2, which tended to repel bots, Web3 allows AI to flourish due to its permissionless programmability. More broadly, if you think of blockchain as a network, then we expect AI to dominate the edge of the network. This applies to a variety of consumer applications, from social media to gaming. So far, the edges of Web 3 networks have been largely human. Humans initiate and sign deals or implement bots with fixed strategies. Over time, we will see more and more AI agents at the edge of the network. AI agents will interact with humans and each other through smart contracts. These interactions will enable novel consumer experiences.