Summary

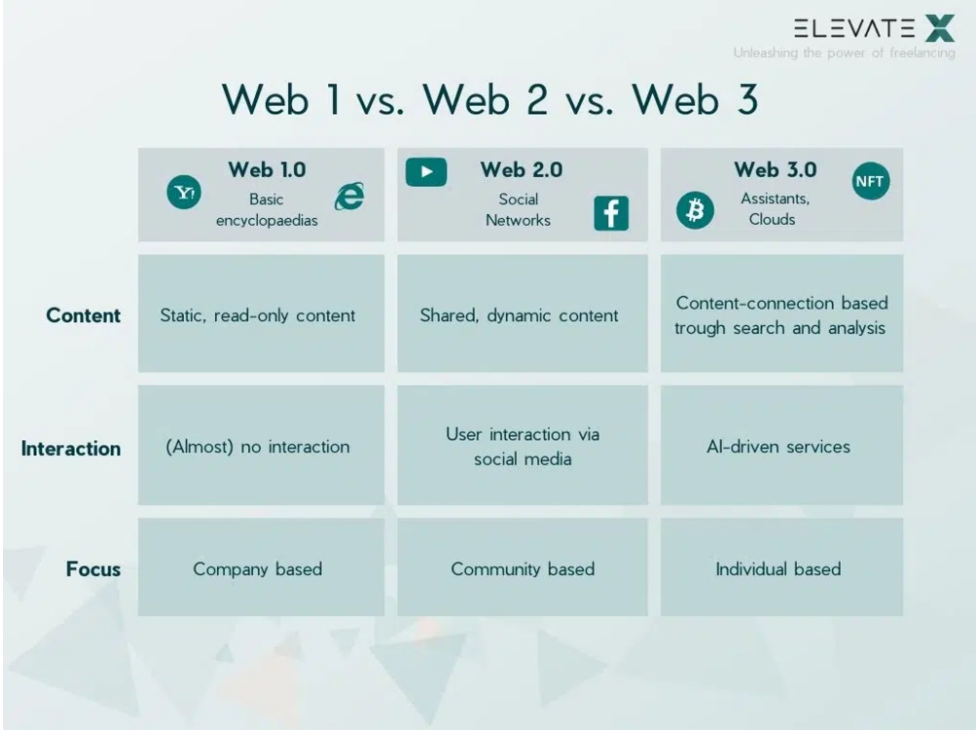

AIGC is a productivity tool in the Web3.0 era. AIGC provides a large amount of productivity, while the application of Web3.0 and blockchain determines production relations and user sovereignty.

Summary

AIGC is a productivity tool in the Web3.0 era. AIGC provides a large amount of productivity, while the application of Web3.0 and blockchain determines production relations and user sovereignty.

But we must realize that AIGC and Web3 are two different directions. As a production tool using AI technology, AIGC can be applied to both the Web2 world and the Web3 world. Most of the projects that have been developed so far are still in the Web2 area. It is inappropriate to talk about the two together. And Web3 hopes to use blockchain and smart contract technology to allow users to have the sovereignty of virtual assets. There is no direct connection between it and the creation mode.

This article will decrypt the development and current situation of AIGC from the following four aspects:

The Evolution of Content Creation Forms

Technical test overview

Industry Applications of AIGC

AIGC and Web3

Part 1: The Evolution of Content Creation Forms

Can be divided into three stages:

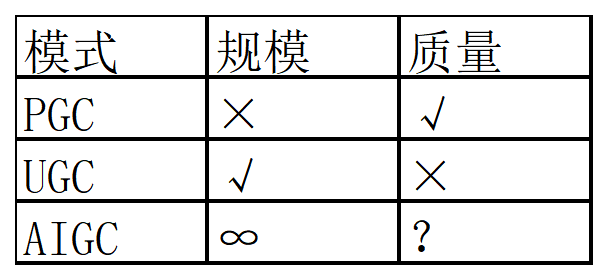

The first stage is PGC (Professionally-Generated Content), where content is generated by experts and created by a professional team with qualifications in content-related fields. The threshold and cost are high, and the quality is guaranteed to a certain extent. It pursues the benefits of commercial channels such as TV dramas and movies. The representative project is the video platform led by Ayouteng. On these platforms, users mostly receive and search for video resources to watch, similar to the concept of Web1.0.

But at this stage, the right to create is in the hands of a few professionals, and the achievements of ordinary creators are difficult to be seen by the public. In the second stage, a series of UGC platforms (User-generated Content, user-generated content) were derived, such as Twitter, YouTube, and domestic video platforms such as Ayouteng. On these platforms, users are not only recipients, but also content providers. The scale of content production has greatly expanded, but the quality of content produced by users is uneven. It can be regarded as the content of the Web2.0 era. creation.

So what is the content creation ecology in the Web3.0 era? Where is the connection between AIGC and web3?

AIGC (AI generated content, artificial intelligence generated content), means that artificial intelligence helps or even replaces humans in content creation, which can be used as a powerful productivity tool to help solve some practical problems in Web3.0 and the Metaverse. It produces more frequently and can be styled to suit everyone's needs. It has unlimited scale for content creation inspiration, and the results can't be too bad.

Part II: Technical Test Overview

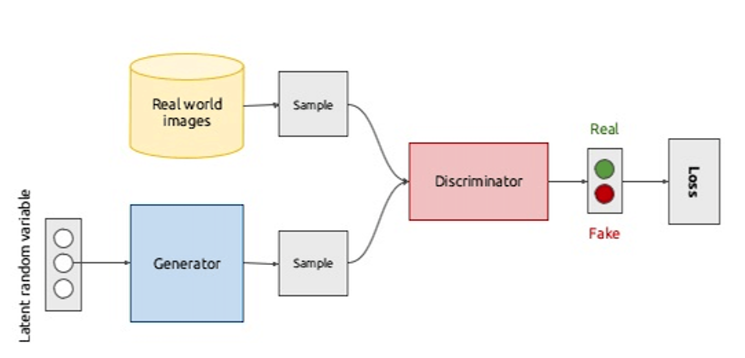

The rapid development of AIGC technology began with the publication of the GAN (Generation Against Network, 2014) model. It consists of two models: a generative model and a discriminative model. The generator generates "fake" data and tries to fool the discriminator; the discriminator verifies the generated data and tries to correctly identify all "fake" data. During training iterations, the two networks improve against each other until an equilibrium state is reached.

The rapid development of AIGC technology began with the publication of the GAN (Generation Against Network, 2014) model. It consists of two models: a generative model and a discriminative model. The generator generates "fake" data and tries to fool the discriminator; the discriminator verifies the generated data and tries to correctly identify all "fake" data. During training iterations, the two networks improve against each other until an equilibrium state is reached.

In the two or three years after the publication of GAN, various transformations and applications of the GAN model have been carried out in the industry. In 2016 and 2017, a large number of practical applications were generated in the fields of speech synthesis, emotion detection, face changing, etc.

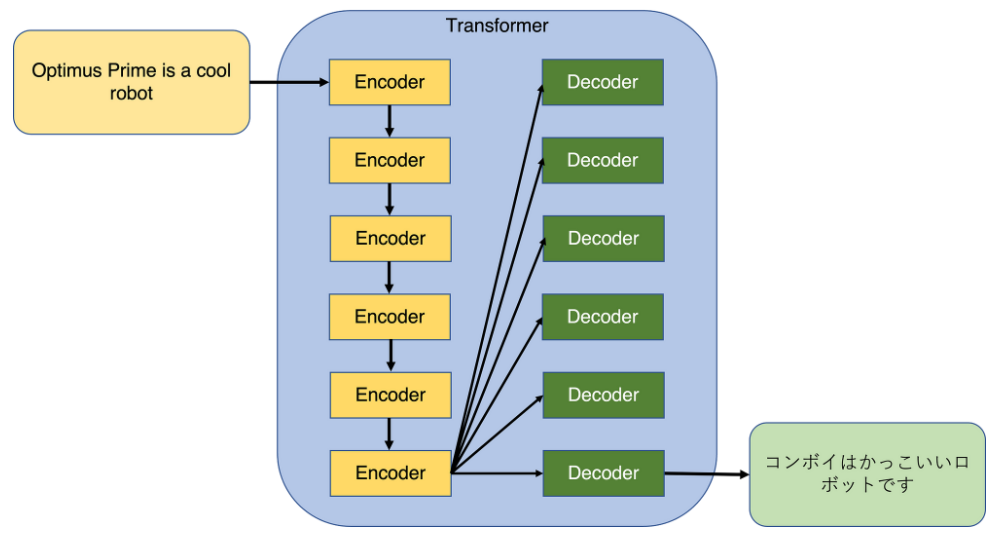

The Transformer model developed by Google in 2017 gradually replaced traditional RNN models such as Long and Short Term memory (LSTM) and became the model of choice for NLP problems.

As a Se q2 seq model, it proposes an attention mechanism that computes the correlation of each word with its context to determine which information is most important for the task at hand. Transformer is faster and retains valid information longer than other models.

BERT (Bidirectional Encoder Representations from Transformer, 2018) uses Transformer to build a complete model framework for natural language processing. It outperforms existing models on a range of natural language processing tasks.

BERT (Bidirectional Encoder Representations from Transformer, 2018) uses Transformer to build a complete model framework for natural language processing. It outperforms existing models on a range of natural language processing tasks.

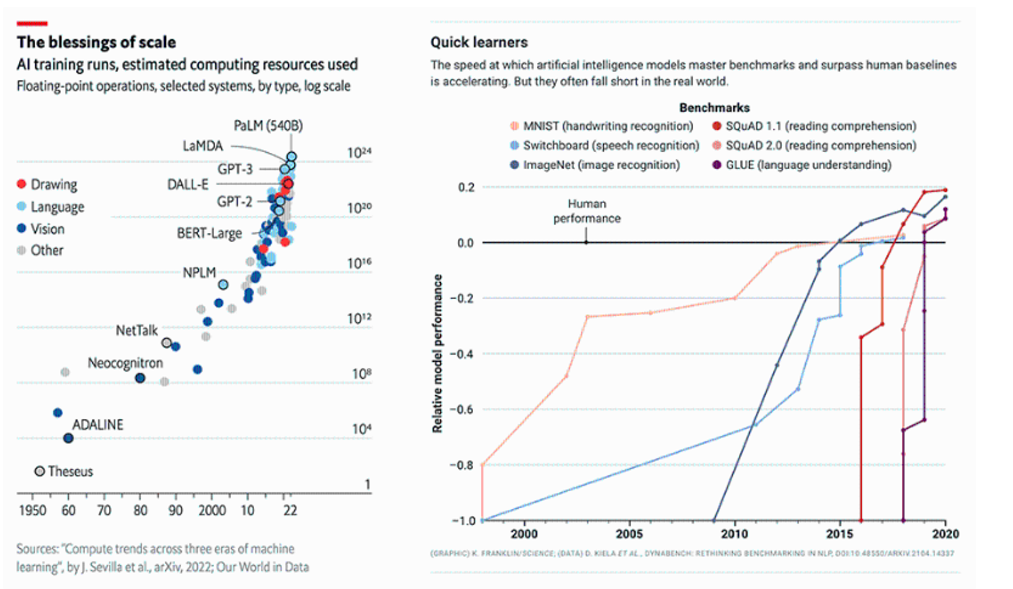

Since then, the size of the model has continued to increase, and in the last two years there have been a number of large models such as GPT-3, InstructGPT, and ChatGPT, and their cost has also increased exponentially.

Today's language models have three characteristics: large models, large data, and large computing power. In the plot above you can see how quickly the number of model parameters increases. Some people even proposed the Moore's Law of the language model - a tenfold increase in one year. The newly released ChatGPT model has 175 billion parameters, and it is hard to imagine how many parameters there are in GPT-4 after that.

Advantages of ChatGPT:

Introduced HFRL (Human Feedback RL, 2022.03) technology, adding human feedback to the training data set, and optimizing based on human feedback, but due to the need for a large number of human annotations, the cost is further expanded.

The second point is that the model will have its own principles when answering questions. The previous chatbots learned some negative and sensitive content when chatting with users, and finally learned to abuse and make discriminatory remarks. Unlike previous models, ChatGPT can identify malicious messages and then refuse to give an answer.

Memory: ChatGPT supports continuous dialogue and can remember the content of previous conversations with users, so after multiple rounds of dialogue users will find that its answers are constantly improving.

Link:https://new.qq.com/rain/a/20221121 A 04 ZNE 00

Part III: Industrial Application of AIGC

Among the 55 companies participating in the 2022 Qiji Chuangtan Autumn Camp, there are 19 AI-themed companies, 15 Metaverse-themed companies, and 16 large-scale model-themed companies. There are more than ten projects related to AIGC, more than half of which are image-related. Details for each project are attached at the links below:

The most popular subdivision track of AIGC is the image field. Thanks to the industry application of Stable Diffusion, image AIGC will usher in explosive growth in 2022. Specifically, the image AIGC track has the following advantages:

Compared with the large models in natural language processing, the model size in the CV field is relatively small, and it has a higher degree of fit with Web3, and can be closely linked with NFT and metaverse.

Compared with text, people's reading cost of pictures is lower, and it has always been a more intuitive and acceptable form of expression.

The pictures are more interesting and diverse, and this part of the technology is currently mature and is being iterated rapidly.

Among the 55 companies participating in the 2022 Qiji Chuangtan Autumn Camp, there are 19 AI-themed companies, 15 Metaverse-themed companies, and 16 large-scale model-themed companies. There are more than ten projects related to AIGC, more than half of which are image-related. Details for each project are attached at the links below:

Diffusion model

2022 CVPR paper "High-Resolution Image Synthesis with Latent Diffusion Models"

By adding noise to an image, a picture can be turned into a random noisy picture, while the diffusion model learns how to remove the noise. The model then applies this denoising process to random noisy pictures, resulting in realistic images.

There are also some limitations in the current image AIGC field, specifically the following:

The model needs to make a trade-off between effect and efficiency, and it is still difficult to generate accurate and customized effects that users expect at the second level.

These companies have high operating and maintenance costs and require a lot of graphics card equipment to drive their models.

The track has seen a slew of startups recently, with fierce competition but a lack of killer apps.

Next, let’s discuss 3D-AIGC. This is a track with great potential. The current model is not yet mature, but it will become the just-needed infrastructure in the Metaverse in the future.

Similar to the generation of 2D images, the 3D-AIGC project is able to generate 3D objects and even render and build 3D scenes automatically. When the Metaverse becomes popular in the future, there will be a large demand for virtual three-digit assets. When the user is in a three-dimensional scene, what the user needs is no longer a two-dimensional picture, but a three-dimensional object and scene.

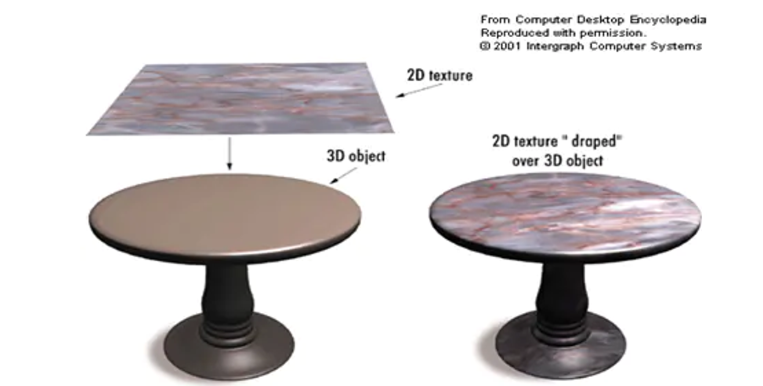

Generating virtual assets in 3D requires more considerations than generating 2D images. A three-dimensional virtual object consists of two parts, one is the three-dimensional shape, and the other is the patterns and patterns on the surface of the object, which we call texture.

Therefore, a model needs to select 3D virtual assets and can be generated in two steps. After we have obtained the geometry of a 3D object, we can give it the surface texture through texture mapping, environment mapping and other methods.

When describing the geometric shape of a three-dimensional object, it is also necessary to consider a variety of expressions, including explicit expressions, such as grids and point clouds; there are also implicit expressions such as algebra and NeRF (Neural Radiation Field). Specifically, you need to choose the way to adapt the model.

In short, we ultimately need to integrate all these processes together to form a process pipeline from text to 3D images. The pipeline is relatively long, and there is no mature application-side model yet. But the popularity of the diffusion model will prompt many researchers to further study the 3D image generation technology. At present, the technical model in this direction is also rapidly iterating.

Compared with VR, XR and other technologies that need to interact with people and have strict requirements for real-time performance. The real-time requirements of 3D AIGC are lower, and the application threshold and speed will be faster.

Part Four: AIGC and Web3

It is said that AIGC is a productivity tool in the web3.0 era. AIGC provides a large amount of productivity, while the application of web3.0 and blockchain determines the relationship of production and user sovereignty.

But we must realize that AIGC and Web3 are two different directions. As a production tool using AI technology, AIGC can be applied to both the web2 world and the Web3 world. Most of the projects that have been developed so far are still in the Web2 area. It is inappropriate to talk about the two together. And Web3 hopes to use blockchain and smart contract technology to allow users to have the sovereignty of virtual assets. There is no direct connection between it and the creation mode.

But there are indeed many similarities between the two:

On the one hand, they all rely on programs to optimize existing production and authoring models. AIGC replaces humans with AI for creation, and Web3 replaces artificial centralized institutions with decentralized programs such as smart contracts and blockchains. Using machines instead of humans will eliminate subjective errors and deviations, and the efficiency will be significantly improved.

On the other hand, Web3 and Metaverse will have a great demand for two-dimensional pictures and audio, three-dimensional virtual objects and scenes, and AIGC is a good way to meet them.

However, when the concept of web3.0 has not been popularized to the general public, we can see that the emerging projects are almost Web2 projects, and the applications in the field of web3 are still mostly on AIGC for image generation, which are used for NFT. creation.

In fact, on the application side, the connection between AIGC and web3.0 cannot rely solely on the connection between "productivity" and "production relations", because AIGC can also bring productivity improvements to web2 projects, while the advantages of web3 projects are not obvious of.

Therefore, in order to seize the opportunity of AIGC development, I think the current web3 project needs to be optimized in the following two aspects:

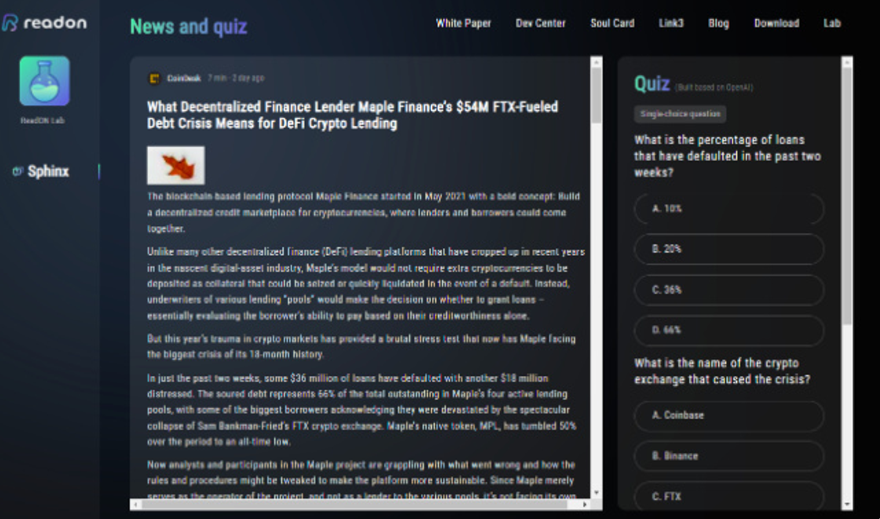

One is to seek the native Web3.0 projects supported by AIGC, that is, projects that can only be applied on the Web3 side. Or in other words, to think about how to use AIGC to solve the current dilemma faced by the Web3 project, such a solution is also native to Web3. For example, ReadOn uses AIGC to generate article quiz, opens up a new model of Proof of Read, solves the problem of brushing coins that has always existed in ReadFi, and provides token rewards for users who actually read. It's hard to do, but web3 needs such model innovation.

The second is to use AIGC to optimize the efficiency and user experience of existing Web3 applications. At present, the application of AIGC mainly exists on images and NFTs, but in fact, creation is a very broad concept, and there are many other ways of creation besides pictures. The 3D-AIGC mentioned above is an application channel that can be considered in the Metaverse, and quiz generation is also an idea that shines at the moment. eduDAO and the developer platform can think about using AIGC to empower education, for setting questions or modifying modular codes, generating unit tests, etc.; GameFi can think about whether AIGC can be used as an NPC in the game; whether it can even use AIGC The coding ability to generate smart contracts.

Video link: https://www.bilibili.com/video/BV17D4y1p7EY/spm_id_from=333.999.0.0

Thanks:

DAOrayaki, a decentralized media and research organization, publicly funded THUBADAO to conduct independent research and share results publicly. The research topics mainly focus on Web3, DAO and other related fields. This article is the sixth sharing of funding results.

DAOrayaki is a fully functional decentralized media platform and research organization representing the will of the community. It aims to link creators, funders, and readers, and provide multiple governance tools such as Bounty, Grant, and prediction markets, and encourage the community to freely conduct research, curate, and report on various topics.

DAOrayaki & THUBA DAO |Multi-case analysis of token economy design ideas