Original author: Jiawei, IOSG Ventures

Original editor: Olivia, IOSG Ventures

tl;dr:

If "The Merge" goes well, sharding will become the main development axis of Ethereum in 2023 and beyond. Since sharding was proposed in 2015, its meaning has changed a lot.

After Vitalik put forward the "Rollup-centered Ethereum roadmap" and Ethereum's "Endgame", the general direction of Ethereum has undergone a de facto change—"retreating behind the scenes" as Rollup's security guarantee and data Availability layer.

Danksharding and Proto-Danksharding are a series of technical combinations, the expression of which is a set of combined punches that "discover problems" and introduce or propose new technologies to "solve problems".

Image Source

introduction

In the blink of an eye, 2022 is already halfway through. Looking back at the Serenity Roadmap proposed by Vitalik in his Devcon speech in 2018, it is easy to find that the development path of Ethereum has changed several times. Compared with the current roadmap, sharding has been given a new meaning, and eWASM is rarely mentioned.

In order to avoid potential fraud and user misleading issues, at the end of January this year, the Ethereum Foundation announced that it would abandon the term "ETH2", and instead renamed the current Ethereum mainnet as the "execution layer" that processes transactions and executions. The term is renamed the "consensus layer" that coordinates and processes PoS.

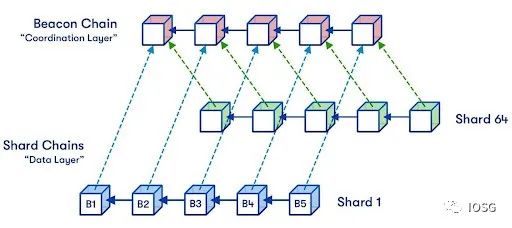

Currently, Ethereum’s official roadmap covers three parts: the beacon chain, merging, and sharding.

Among them, the Beacon Chain, as the pre-work for the migration of Ethereum to PoS, and the coordination network of the consensus layer, was launched on December 1, 2020 and has been in operation for nearly 20 months so far.

Image Source

In this article, we will focus on sharding. the reason is:

First, assuming that the merger of the main network can be successfully realized within the year, thenSharding will follow as the main axis of Ethereum development in 2023.

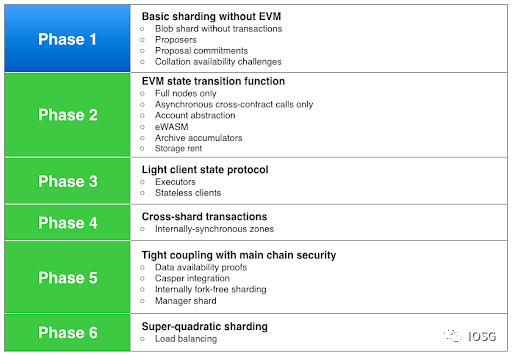

Second, the concept of Ethereum sharding was first proposed by Vitalik in Devcon 1 in 2015. Since then, six development stages of sharding have been proposed in GitHub's Sharding FAQ (as shown in the figure above). However,With the update of the Ethereum roadmap and the promotion of related EIPs, the meaning and priority of sharding have changed a lot.When we discuss sharding, we need to first make sure we agree on what it means.

To sum up the above two points, it is very important to sort out the ins and outs of sharding. This article will focus on discussing the origin, progress and future route of Ethereum original sharding, Danksharding and Proto-Danksharding, rather than going into every technical detail. For details about Danksharding and Proto-Danksharding, please refer to previous articles of IOSG:"Will the expansion killer Danksharding be the future of Ethereum sharding?"、"EIP4844: L2 transaction fees will be opened soon to reduce the foreseeable depression effect"。

Quick Review

Rollups, data availability, and sharding will be mentioned several times in this article.

Let's quickly go over the basic concepts of all three here.

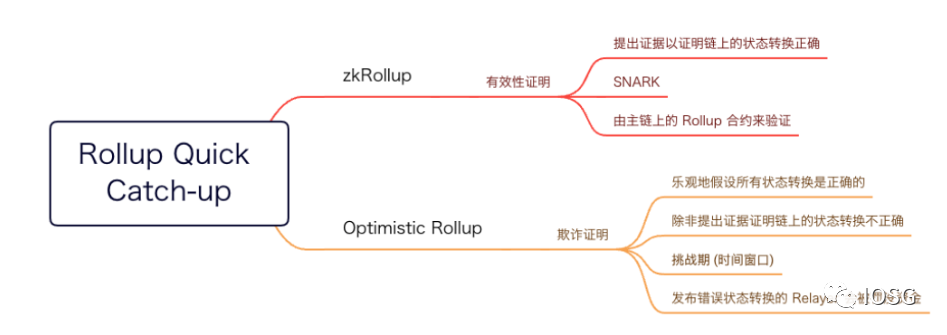

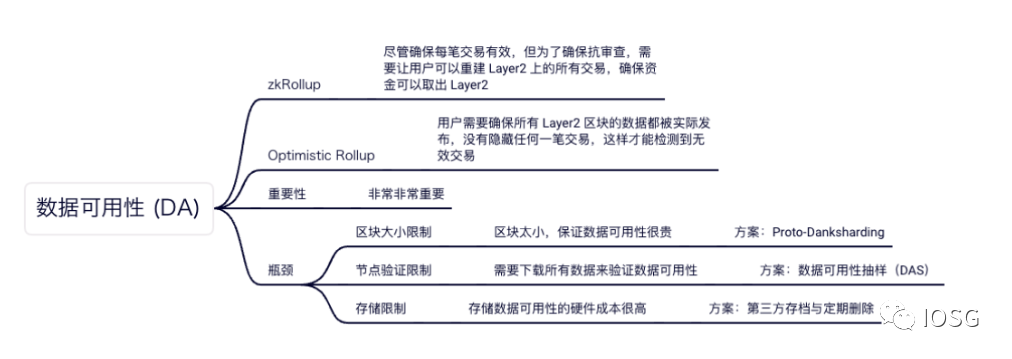

The current mainstream Rollup is divided into zkRollup and Optimistic Rollup. The former is based on validity proof, that is, batch execution of transactions, relying on cryptographic proof SNARK to ensure the correctness of state transitions; the latter "optimistically" assumes that all state transitions are correct unless falsified; that is, it takes a period of time to ensure Wrong state transitions can be detected.

Image Source

The full node of Ethereum stores the complete state of the EVM and participates in all transaction verification, which ensures decentralization and security, but then comes the problem of scalability: transactions are executed linearly, and each node needs to It is undoubtedly inefficient to confirm one by one.

In addition, as time goes by, the Ethereum network data continues to accumulate (currently up to 786GB), and the hardware requirements for running a full node increase accordingly. A decrease in the number of full nodes will create a potential single point of failure and reduce the degree of decentralization.

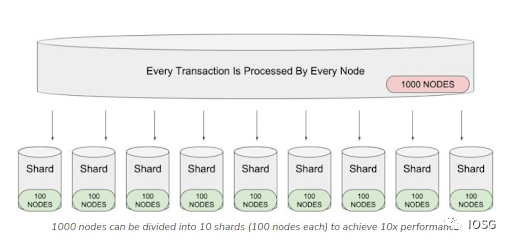

Intuitively, sharding is equivalent to division of labor and cooperation, that is, all nodes are grouped, each transaction only needs to be verified by a single group of nodes, and transaction records are regularly submitted to the main chain to achieve parallel processing of transactions (for example, there are 1000 nodes, each transaction must be verified by each node; if they are divided into 10 groups, each group of 100 nodes to verify the transaction, the efficiency is obviously greatly improved). The use of fragmentation makes it possible to improve scalability while reducing the hardware requirements of a single group of nodes, thereby solving the above two problems.

Image Source

background

background

Before talking about Danksharding, let's take a moment to understand its background.Personally, the foundation of the community atmosphere launched by Danksharding mainly comes from two articles by Vitalik. These two articles set the tone for the future direction of Ethereum.

First of all, Vitalik published the "Rollup-centric Ethereum Roadmap" in October 2020, proposing that Ethereum needs to provide centralized support for Rollup in the short to medium term. First, the expansion of the Ethereum base layer will focus on expanding the data capacity of blocks, rather than improving the efficiency of on-chain calculations or IO operations. That is: Ethereum sharding is designed to provide more space for data blobs (rather than transactions), and Ethereum does not need to interpret these data, only to ensure that the data is available. Second, Ethereum's infrastructure is adjusted to support Rollup (such as ENS's L2 support, wallet's L2 integration, and cross-L2 asset transfer).Image Source

Since then, Vitalik described the final picture of Ethereum in "Endgame" published in December 2021: block output is centralized, but block verification is trustless and highly decentralized, while ensuring anti-censorship.The underlying chain provides guarantees for the data availability of the blocks, while the Rollup provides guarantees for the validity of the blocks (in zkRollup, this is achieved through SNARKs; in Optimistic Rollup, only one honest participant needs to run a fraud proof node). Similar to the multi-chain ecology of Cosmos, the future of Ethereum will be multi-Rollup coexistence-they are all based on the data availability and shared security provided by Ethereum. Users rely on the bridge to move between different Rollups without paying the high fees of the main chain.

The above two articles basically determined the development direction of Ethereum: optimizing the construction of the base layer of Ethereum to serve Rollup. The above argument may be based on such a view: since Rollup has been proven effective and well adopted, then "instead of spending a few years waiting for an uncertain and complicated expansion plan (note: refers to the original sharding), it is better to pay attention to Focus on the Rollup-based solution."

Image Source

Proto-Danksharding

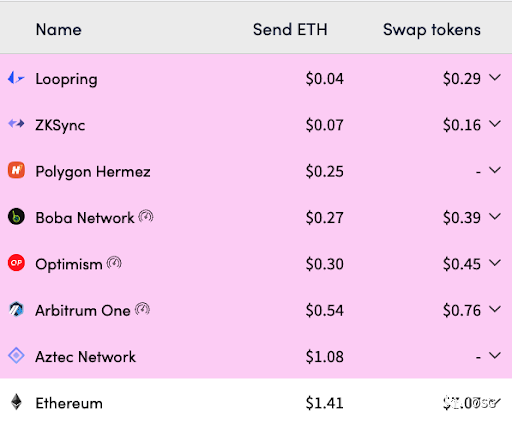

The background of Proto-Danksharding is that although the Rollup scheme has significantly reduced transaction costs compared to the Ethereum main chain, it has not yet reached a low enough ideal level. This is because CALLDATA, which provides data availability on the Ethereum main chain, still occupies a large cost (16 gas / byte). In the original idea, Ethereum proposed to provide 16MB of dedicated data space for each block in data sharding for Rollup to use, but the actual implementation of data sharding is still far away.

On February 25 this year, Vitalik and DankRad proposed the EIP-4844 (Shard Blob Transactions) proposal, also known as Proto-Danksharding, which aims to expand the data availability of Ethereum in a simple and forward-compatible way, making it available in Danksharding Still available afterwards. The changes in this proposal only occur on the consensus layer, and do not require additional adaptation work by clients, users, and Rollup developers of the execution layer.

Proto-Danksharding does not actually perform sharding, but introduces a transaction format called "Blob-carrying Transactions" for future sharding. This transaction format is different from ordinary transactions in that it carries an additional data block called blob (about 125kB), which makes the block actually larger, thus providing cheaper data availability than CALLDATA (about 10kB).

However, the general problem of "big blocks" is the continuous accumulation of disk space requirements. The adoption of Proto-Danksharding will increase the storage capacity of Ethereum by an additional 2.5TB per year (currently, the entire network data is only 986GB). Therefore, Proto-Danksharding sets a period of time window (for example, 30 days), after which the blob is deleted, and the user or protocol can back up the blob data within this period.

That is, Ethereum's consensus layer is only used as a highly secure "real-time bulletin board" to ensure that these data are available for a long enough time, and to allow other users or protocols enough time to back up the data, rather than by Ethereum. All blob historical data is permanently retained in the workshop.

The reason for this is that, for storage, the annual increase of 2.5TB is not a problem, but it brings a lot of burden to the Ethereum nodes.As for the trust assumption that may be caused, in fact, only one data storage party is honest (1 of N), the system can operate normally, and there is no need for a validator node set that participates in verification and executes consensus in real time (N/2 of N) to store this part of historical data.

So, is there any incentive to push third parties to store these data? The author has not found the launch of the incentive scheme for the time being, but Vitalik himself proposed several possible data storage methods:

Application-specific protocols (such as Rollup). They can require nodes to store historical data related to the application. If the historical data is lost, it will cause risks to this part of the application, so they have the motivation to do storage;

BitTorrent;

Ethereum’s Portal Network, a platform that provides lightweight access to the protocol;

Blockchain browsers, API providers or other data service providers;

Individual enthusiasts or scholars engaged in data analysis;

Third-party indexing protocols such as The Graph.

Image Source

In Proto-Danksharding, we mentioned that the new transaction format makes the block actually larger, and Rollup also accumulates a large amount of data, which nodes need to download to ensure data availability.

The idea of DAS is: if the data can be divided into N blocks, and each node downloads K blocks randomly, it can verify whether all the data is available without downloading all the data, which can greatly reduce the burden on the nodes. But what if a block of data is lost? It is difficult to find that a block is missing just by randomly downloading K blocks.

In order to realize DAS, erasure coding (Erasure Coding) technology is introduced.Erasure code is a coding error-tolerant technology. The basic principle is to segment data, add certain checksums and make associations between each data segment. Even if some data segments are lost, the complete data can still be calculated through the algorithm. .

If the redundancy ratio of the erasure code is set to 50%, it means that only 50% of the block data is available, and anyone in the network can reconstruct all the block data and broadcast it. If an attacker wants to deceive nodes, more than 50% of the blocks must be hidden, but as long as multiple random samples are taken, this will hardly happen. (For example, assuming 30 random samples of blocks, the chance that any of these blocks will happen to be hidden by the attacker is 2^(-30) )…

Since the nodes do not download all the data, but rely on erasure codes to reconstruct the data, they first need to ensure that the erasure codes are correctly encoded, otherwise of course the data cannot be reconstructed with a wrongly encoded erasure code.

In this way, KZG Polynomial Commitments (KZG Polynomial Commitments) are further introduced, a polynomial promise is a simplified form that "represents" a polynomial, used to prove that the value of the polynomial at a particular location agrees with a specified value, without including all the data for the polynomial. In Danksharding, the verification of the erasure code is realized by using the KZG commitment.

It would be easy if we could put all the data in one KZG commitment, but building this KZG commitment, or rebuilding the data once some of the data is unavailable—both are huge resource requirements. (Actually, the data of a single block requires multiple KZG commitments to guarantee)In order to reduce the burden on nodes and avoid centralization, Danksharding further splits the KZG commitment and proposes a two-dimensional KZG commitment framework.

When we solve the above problems in turn, relying on DAS, nodes or light clients only need to randomly download K data blocks to verify that all data is available; Overburdening the node.

(Note: In particular, the erasure code algorithm used in Danksharding is Reed-Solomon code; KZG commitment is a polynomial commitment scheme published by Kate, Zaverucha and Goldberg. I will not expand it here, and readers who are interested in the principle of the algorithm can Self-expanding. Also, a solution to ensure the correctness of erasure codes is the fraud proof adopted in Celestia)

Separation of block proposers and builders (PBS)

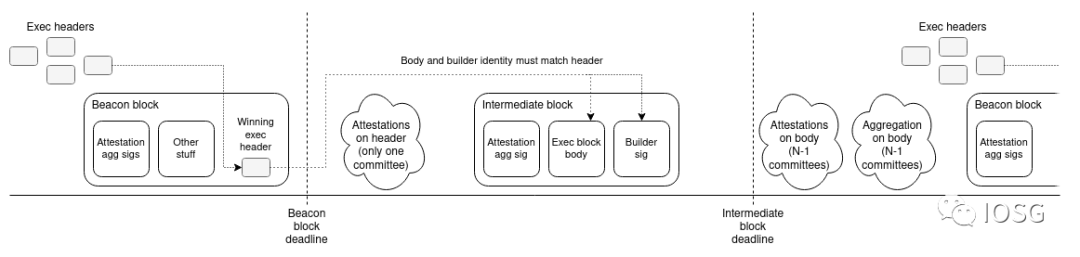

In the current situation, PoW miners and PoS validators are both block builders (Builder) and block proposers (Proposer) - in PoS, validators can use MEV profits to obtain more new validator seats , so that there are more opportunities to realize MEV; in addition, a large verification pool obviously has a stronger ability to capture MEV than ordinary validators, which leads to serious centralization problems. Therefore, PBS proposed to separate Builder and Proposer.

The idea of PBS is as follows: Builders build a sorted list of transactions and submit bids to Proposers. The proposer only needs to accept the transaction list with the highest bid, and no one can know the specific content of the transaction list until the winner of the auction is selected.

This separation and auction mechanism introduces "involution" between the game and the Builder: After all, each Builder has a different ability to capture MEV, and the Builder needs to weigh the relationship between the potential MEV profit and the auction bid, so that the actual This reduces the net income of MEV; regardless of whether the block submitted by the final Builder can be successfully produced, the bidding fee needs to be paid to the Proposer. In this way, Proposer (in a broad sense, all validator sets, randomly reselected within a certain period of time) is equivalent to sharing a part of MEV's revenue, which weakens the degree of centralization of MEV.

The above describes the advantages of PBS in solving MEV, but there is another reason to introduce PBS. In Danksharding, the requirements for Builder are: to calculate the KZG proof of 32MB data in about 1 second, which requires a CPU with 32-64 cores; and to broadcast 64MB data in a P2P manner within a certain period of time, which requires 2.5Gbit/ s bandwidth. Obviously the verifier cannot meet such requirements.

Image Source

In October last year, Vitalik proposed a dual-slot PBS scheme (note: each slot is 12 seconds, which is the time unit of the beacon chain), but the specific PBS scheme is still under discussion.

Image Source

But PBS also brings a problem. If a Builder always bids the highest price (even willing to bear economic losses) to win the auction, then he actually has the ability to review transactions and can selectively not include certain transactions in the auction. in the block.

Image Source

summary

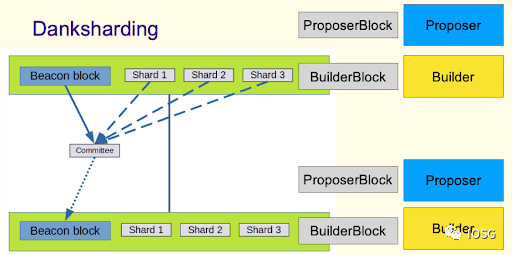

Combining the aforementioned Data Availability Sampling (DAS), Separation of Block Builders and Proposers (PBS), and Censorship Resistance Lists (crList), you get complete Danksharding.We found that the concept of "sharding" has actually been downplayed. Although the name of Sharding is retained, the actual focus has been on the support of data availability.

So what are the advantages of Danksharding over original sharding?

(Dankrad himself listed 10 advantages of Danksharding here, we choose two to explain in detail)

In the original shard, each individual shard has its proposer and committee, respectively votes on the transaction verification in the shard, and all the voting results are collected by the proposer of the beacon chain. This work is difficult in a single Completed within the slot. In Danksharding, there is only a committee on the beacon chain (a generalized verifier set, randomly re-selected within a certain period of time), and this committee verifies the beacon chain blocks and shard data. This is equivalent to simplifying the original 64 groups of proposers and committees to 1 group, and the complexity of both theory and engineering implementation is greatly reduced.

Another advantage of Danksharding is the possibility of synchronous calls between the Ethereum main chain and zkRollup. As we mentioned above, in the original shard, the beacon chain needs to collect the voting results of all shards, which will cause a delay in confirmation. In Danksharding, the blocks and shard data of the beacon chain are uniformly certified by the committee of the beacon chain, that is, transactions in the same beacon block can instantly access the shard data. This stimulates the imagination of more composability: for example, the distributed AMM (dAMM) proposed by StarkWare can perform Swap or share liquidity across L1/L2, so as to solve the problem of liquidity fragmentation.

After Danksharding is implemented, Ethereum will become Rollup's unified settlement layer and data availability layer.

Closing Thoughts

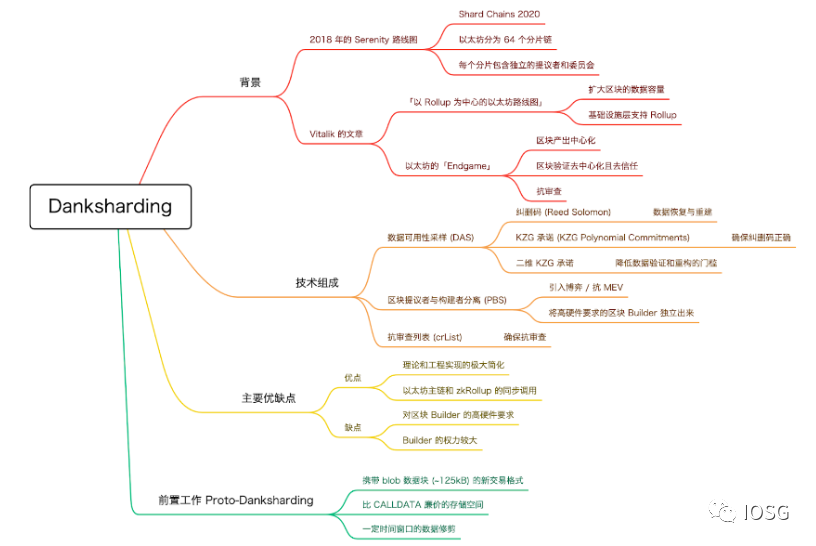

In the figure above, we make a summary of Danksharding.

To sum up, we can roughly see that in the next 2 to 3 years, the directionality of Ethereum's roadmap is very obvious - it revolves around the Rollup service.Although it is still unknown whether the roadmap will change or not in the process: Danksharding is expected to be implemented in the next 18-24 months, and Proto-Danksharding will be implemented in 6-9 months.But at least we have made it clear that Rollup, as the expansion basis of Ethereum, occupies a certain dominant position.

According to the outlook proposed by Vitalik, here we also propose some predictive thinking and conjectures:

One is a multi-chain ecology similar to Cosmos. In the future, there will be a multi-Rollup competition pattern on Ethereum, and Ethereum will provide them with guarantees of security and data availability.

Second, cross-L1/Rollup infrastructure will become a rigid demand. Cross-domain MEV will bring more complex arbitrage combinations, similar to the dAMM mentioned above, which will bring richer composability.

Original link

References:

https://consensys.net/blog/blockchain-explained/the-roadmap-to-serenity-2/

https://www.web3.university/article/ethereum-sharding-an-introduction-to-blockchain-sharding

https://ethereum-magicians.org/t/a-rollup-centric-ethereum-roadmap/4698

https://twitter.com/pseudotheos/status/1504457560396468231https://ethos.dev/beacon-chain/

https://notes.ethereum.org/@fradamt/H1ZqdtrBF

https://cloud.tencent.com/developer/article/1829995

https://medium.com/coinmonks/builder-proposer-separation-for-ethereum-explained-884c8f45f8dd

https://dankradfeist.de/ethereum/2021/10/13/kate-polynomial-commitments-mandarin.html

https://members.delphidigital.io/reports/the-hitchhikers-guide-to-ethereum